Von Neumann architecture

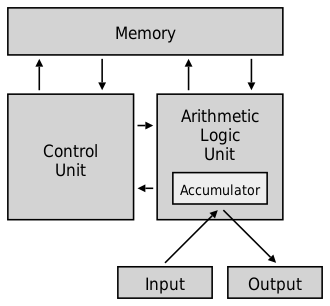

The von Neumann architecture, also known as the von Neumann model or Princeton architecture, is a computer architecture based on that described in 1945 by mathematician and physicist John von Neumann and others, in the first draft of a report on the EDVAC. It describes a design architecture for an electronic digital computer with parts consisting of a unit of processing unit that contains an arithmetic logic unit and processor registers, a control unit that contains an instruction register and a program counter, a memory to store both data and instructions, external mass storage, and input and output mechanisms. The concept has evolved to become a stored-program computer in which an instruction fetch and a data operation cannot occur simultaneously, since they share a common bus. This is known as the von Neumann bottleneck, and often limits system performance.

The design of a von Neumann architecture is simpler than the more modern Harvard architecture, which is also a stored-program system, but has a dedicated set of addresses and data buses for reading data from memory and writing data to memory. itself, and another set of addresses and data buses to fetch instructions.

A stored-program digital computer is one that maintains its program instructions, as well as its data, in read-write random access memory (RAM). Stored-program computers represented an advance over the program-controlled computers of the 1940s, such as the Colossus and the ENIAC, which were programmed by setting switches and inserting interconnect cables to route data and to control signals between them. various functional units. In the vast majority of modern computers, the same memory is used for both data and program instructions, and the distinction between von Neumann vs. Harvard applies to cache memory architecture, but not to main memory.

History

The first computing machines had fixed programs. Some very simple teams continue to use this design, either for simplification or training reasons. For example, a desktop calculator is (in principle) a fixed program computer. Basic math can be done on it, but it cannot be used as a word processor or game console. Changing the program of a fixed program machine requires rewiring, restructuring, or redesigning the machine. The first computers were not so much "programmed" as they were "designed"."Reschedule" When possible, it was a laborious process that began with flowcharts and paper notes, followed by detailed engineering designs, and then the often arduous process of physically rewiring and rebuilding the machine. It could take up to three weeks to set up an ENIAC program and get it working.

That situation changed with the proposal of the stored-program computer. A stored-program computer includes, by design, a set of instructions and can store in memory a set of instructions (a program) that details the computation.

A stored program design also allows for mutating code. An early motivation for such a facility was the need for a program to increment or otherwise modify the address portion of instructions, which, in early designs, had to be done manually. This became less important when index registers and addressing modes became common features of machine architecture. Another use was to embed frequently used data into the instruction stream using immediate addressing. Mutant code has largely fallen out of favor, as it is often difficult to understand and debug, as well as inefficient, in favor of modern processor pipeline and caching regimes.

On a large scale, the ability to treat instructions in the same way as data is what assemblers, compilers, linkers, loaders, and other possible automatic programming tools do. You can "write programs that write programs". On a smaller scale, repetitive I/O-intensive operations—such as early BitBLT image manipulators or pixel and vertex shaders in modern 3D graphics—were deemed inefficient as they worked without custom hardware. These operations could be sped up on general purpose processors with "fly build" ("run-time compilation"), for example, generated code programs, a form of self-modifying code that has remained popular.

There are some disadvantages to the von Neumann design. Aside from the von Neumann bottleneck described below, program modifications can be very disruptive, either by accident or by design. In some simple stored-program computer designs, a malfunctioning program can damage itself, other programs, or even the operating system, which can lead to a computer crash. Typically, memory protection and other forms of access control can protect against both accidental modification and malicious programs.

Development of the stored program concept

The mathematician Alan Turing, who had been alerted to a problem in mathematical logic by Max Newman's lectures at Cambridge University, wrote an article in 1936 entitled On Computable Numbers, with an Application to the Entscheidungsproblem, which was published in the Proceedings of the London Mathematical Society. In it he described a hypothetical machine which he called the "universal computing machine", and which is now known as the "Universal Turing machine". The hypothetical machine had infinite storage (memory in today's terminology) that contained both instructions and data. John von Neumann met Turing when he was a substitute professor at Cambridge in 1935 and also during Turing's PhD year at the Institute for Advanced Study in Princeton, New Jersey during 1936-37. When he learned of Turing's 1936 article is unclear.

In 1936, Konrad Zuse also anticipated in two patent applications that machine instructions could be stored in the same storage used for data.

Independently, J. Presper Eckert and John Mauchly, who were developing the ENIAC at the Moore School of Electrical Engineering at the University of Pennsylvania, wrote about the concept of a "stored program" in December 1943. In January 1944, While designing a new machine, the EDVAC, Eckert wrote that data and programs would be stored in a new addressable memory device, delay line memory. This was the first time that the construction of a practical stored program was proposed. At that time, they were unaware of Turing's work.

Von Neumann was involved in the Manhattan Project at Los Alamos National Laboratory, which required vast amounts of calculations. This led to the ENIAC project, in the summer of 1944. There he joined the discussions on the design of a stored-program computer, the EDVAC. As part of the group, he volunteered to write a description of himself. The term "von Neumann architecture" arose from von Neumann's first article: "First Draft of a Report on the EDVAC", dated June 30, 1945, which included ideas from Eckert and Mauchly. It was unfinished when his partner Herman Goldstine circulated it with only von Neumann's name written on it, much to the dismay of Eckert and Mauchly. The paper was read by dozens of von Neumann's coworkers in America and Europe, and influenced the next batch of computer designs.

Therefore, von Neumann was not alone in developing the idea of stored-program architecture, and Jack Copeland considers it "historically inappropriate to refer to stored-program digital electronic computers as &# 39;von Neumann's machines'. His colleague from Los Alamos College, Stan Frankel said of von Neumann's considerations regarding Turing's ideas:

I know that in or around 1943 or '44 von Neumann was very aware of the fundamental importance of Turing's 1936 role... von Neumann introduced me to that role and, in his insistence, I carefully studied it. Many people have acclaimed von Neumann as the "father of the computer" (in the modern sense of the term), but I am sure that he himself would never have made that mistake. He might well have called the midwife perhaps, but firmly emphasized for me, and I am sure that for others, that the fundamental conception is due to Turing—as Babbage did not anticipate it... Of course, both Turing and von Neumann also made important contributions to the "implemented" of these concepts, but I would not consider this as comparable in importance with the introduction and explanation of the concept of a computer capable of storing in their memory their program of activities, and of modifying that program in the course of these activities

At the same time the "First Draft" was distributed, Turing developed a detailed technical report, Proposed Electronic Calculator, which describes in detail the engineering and programming of his idea for a machine that was called the Automatic Computing Engine (ACE). He presented this to the British National Physical Laboratory on February 19, 1946. Although Turing knew from his wartime experience in Bletchley Park that his proposal was feasible, decades of secrecy about the Colossus computers prevented him from saying so. Several successful implementations of the ACE design were produced.

The works of both von Neumann and Turing described stored-program computers, but because von Neumann's paper predated it, it gained wider circulation and impact, so the computer architecture it outlined acquired the name "von Neumann architecture". In the 1953 publication Faster than Thought: A Symposium on Digital Computing Machines, a section in the chapter on < i>Computers in America reads as follows:

The Machine of the Institute for Advanced Studies, Princeton

In 1945, Professor J. von Neumann, who at that time worked at the Moore School of Engineering in Philadelphia, where the ENIAC had been built, issued on behalf of a group of his coworkers a report on the logical design of digital computers. The report contained a fairly detailed proposal for the design of the machine which, since then, is known as the EDVAC (discreet variable retardant). This machine has recently been completed in America, but the von Neumann report inspired the construction of the EDSAC (automatically calculating electronic storage of delay) in Cambridge (see page 130).

In 1947, Burks, Goldstine and von Neumann published a report describing the design of another type of machine (a parallel machine at this time) that should be very fast, able to do 20 000 operations per second. They pointed out that the persistent problem in the construction of such a machine was in the development of a proper memory, all the contents of which were instantly accessible, and at first it was suggested to use a special tube –called Selectron – which had been invented by the laboratories of Princeton of the RCA. These tubes are expensive and difficult to manufacture, so Von Neumman decided to build a machine based on Williams' memory. That machine completed in June 1952 at Princeton has been known as MANIAC I. The design of this machine that has been inspired by a dozen or more machines that are currently under construction in America.

In the same book, the first two paragraphs of a chapter on ACE read as follows:

Automatic calculation in the national physics laboratory

One of the most modern digital equipment that incorporates innovations and improvements in electronic computing technique has been demonstrated at the National Physics Laboratory, Teddington, where it has been designed and built by a small team of mathematicians and electronic engineers researchers on the laboratory staff, assisted by production engineers of the English electric company. The equipment built so far in the laboratory is only the pilot model of many very large installations that will be known as the automatic calculus engine, but although it is relatively small in volume and contains only 800 thermoil valves, it is a very fast and versatile calculation machine.

The basic concepts and abstract principles of machine computing were formulated by Dr. A. M. Turing in a paper1 read to the London Mathematical Society in 1936, but the work on such machines in the UK was delayed by war. In 1945, a review was made of the problem in the national physics laboratory by Professor J. R. Womersley. Dr. was attached. Turing a small team of specialists, and in 1947 preliminary planning was advanced enough to justify the establishment of the above-mentioned panel. In April 1948, the latter became the electronics section of the laboratory, under the charge of Mr. F. M. Colebrook.

Formal definition

Computers are machines of von Neumann architecture when:

- Both programs and data are stored in a common memory. This makes it possible to execute commands in the same way as the data.

- Each memory cell of the machine is identified with a single number, called address.

- The different parts of the information (commands and data) have different modes of use, but the structure is not represented in a coded memory.

- Each program is executed sequentially that, in case there are no special instructions, begins with the first instruction. To change this sequence the transfer control command is used.

Classical structure of von Neumann machines

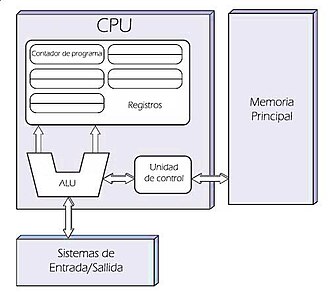

A von Neumann machine, like virtually all modern general-purpose computers, consists of four main components:

- Operation device (DO), which executes instructions from a specified set, called system (set) of instructions, about portions of stored information, separated from the memory of the operating device (although in modern architecture the operating device consumes more memory -usually from the log bank-), in which the operating are stored directly in the calculation process, in a relatively short time

- Control unit (UC)which organizes the consistent implementation of command decoding algorithms that come from the memory of the device, responds to emergency situations and performs general direction functions of all computer nodes. The DO and the UC usually form a structure called CPU. It should be noted that the requirement is consistent, the order of the memory (the order of the change of direction in the program counter) is fundamental in the execution of the instruction. Generally, the architecture that does not adhere to this principle is not considered von Neumann

- Device memory— a set of cells with unique identifiers (directions), containing instructions and data.

- E/S device (DES), which allows communication with the outside world of computers, are other devices that receive the results and that transmit the information to the computer for processing.

First computers based on von Neumann architecture

The first saga was based on a design that was used by many universities and companies to build their computers. Among these, only ILLIAC and ORDVAC had a compatible instruction set.

- Small Scale Experimental Machine (SSEM), nicknamed "Baby" (University of Manchester, England) made its first successful execution of a program stored on June 21, 1948.

- EDSAC (University of Cambridge, England) was the first practical electronic computer of stored program (May 1956)

- Manchester Mark I (University of Manchester, England) Developed from SSEM (June 1947)

- CSIRAC (Council for Scientific and Industrial Research) Australia (November 1949)

- EDVAC (Laboratory for Ballistic Research, Aberdeen Proving Ground Computer Laboratory, 1951)

- ORDVAC (U-Illinois) in Aberdeen Proving Ground, Maryland (completed in November 1951)

- IAS machine at Princeton University (January 1952)

- MANIAC I in Los Alamos Scientific Laboratory (March 1952)

- ILLIAC at the University of Illinois (September 1952)

- AVIDAC in Laboratorios Argonne National (1953)

- ORACLE at Oak Ridge National Laboratory (June 1953)

- JOHNNIAC at RAND Corporation (January 1954)

- BESK in Stockholm (1953)

- BESM-1 in Moscow (1952)

- DASK in Denmark (1955)

- PERM in Munich (1956?)

- SILLIAC in Sidnei (1956)

- WEIZAC in Rehovoth (1955)

Early stored-program computers

The date information in the following chronology is difficult to put in the correct order. Some dates are from the first run of a test program, some dates are from the first time the equipment was demonstrated or completed, and some dates are from the first delivery or installation.

- IBM SSEC had the capacity to treat instructions as data, and was publicly demonstrated on 27 January 1948. This capacity was claimed in an American patent. However, it was partially electromechanical, not entirely electronic. In practice, the instructions were read from a paper tape due to their limited memory.

- The SSEM Manchester (the baby) was the first fully electronic computer running a stored program. A factoring program was run for 52 minutes on 21 June 1948, after running a simple division program and a program to show that two numbers were cousins to each other.

- The ENIAC was modified to function as a primitive computer with a single-read stored program (using the ROM function table) and proved as such on September 16, 1948, running an Adele Goldstine program for von Neumann.

- BINAC ran some test programs in February, March and April 1949, although it was not completed until September 1949.

- The Manchester Mark I developed based on the SSEM project. In April 1949, in order to implement programmes, an intermediate version of Mark 1, was made available but was not completed until October 1949.

- The EDSAC ran its first program on 6 May 1949.

- The EDVAC was presented in August 1949, but had problems that kept it out of regular operation until 1951.

- CSIR Mark I ran its first program in November 1949.

- SEAC was demonstrated in April 1950.

- The Pilot ACE ran its first program on May 10, 1950 and was demonstrated in December 1950.

- The SWAC was completed in July 1950.

- The Whirlwind Computer was completed in December 1950 and was in real use in April 1951.

- ERA 1101 (later ERA 1101/UNIVAC 1101 commercial) was installed in December 1950.

Evolution

Throughout the 1960s and 1970s, computers generally became both smaller and faster, leading to some evolutions in their architecture. For example, memory I/O mapping allowed input and output devices to be treated the same as memory. A single system bus could be used to provide a modular system at lower cost. This is sometimes called "rationalization" of architecture. In subsequent decades, simple microcontrollers would sometimes allow features to be omitted from the model at lower cost and size. Larger computers added features for higher performance.

Von Neumann bottleneck (von Neumann bottleneck)

The shared data transmission channel between CPU and memory creates a von Neumann bottleneck, limited performance (data transfer rate) between CPU and memory compared to the amount of memory. In most modern computers, the communication speed between the memory and the CPU is lower than the speed at which the latter can work, reducing the performance of the processor and severely limiting the effective processing speed, especially when they are needed. process large amounts of data. The CPU is forced to wait continuously for the necessary data to arrive from or to memory. Since the processing speed and amount of memory have increased so much faster than the transfer performance between them, the bottleneck has become more of a problem, a problem that grows more severe with each new generation of CPUs.

The term “von Neumann bottleneck” was coined by John Backus in his 1977 ACM Turing Award Lecture. According to Backus:

"Surely there must be a less primitive way to make great changes in memory, pushing so many words to one side and the other side of von Neumann's bottleneck. Not only is it a bottleneck for data trafficking, but more importantly, it is an intellectual bottleneck that has kept us tied to the thought of "one word at a time" rather than encouraging us to think of larger conceptual units. So the programming is basically the planning of the huge word traffic that cross the bottleneck of von Neumann, and much of that traffic does not concern the data itself, but where to find these. »

The performance problem can be alleviated (to some extent) by using various mechanisms. Offering a cache between the CPU and main memory, providing separate caches or independent access paths for data and instructions (so-called modified Harvard architecture), using branch predictor logic and algorithms, and providing a limited CPU stack or other in reusable memory chip to reduce memory access, are four of the ways available to increase performance. The problem can also be circumvented, to some extent, by using parallel computing, using non-uniform memory access (NUMA) architecture, for example—this approach is commonly employed by supercomputers. It is less clear whether the intellectual bottleneck Backus criticized has changed much since 1977. The solution Backus proposed has had no major influence.[citation needed] Modern programming Functional and object-oriented programming care much less about "pushing a large number of words around" than earlier languages like Fortran was, but internally, this is still what computers spend much of their time doing, even highly parallel supercomputers.

Starting in 1996, a database benchmark study found that three out of four CPU cycles are spent waiting for memory. The researchers hope that increasing the number of concurrent instructions with multithreading or single-chip multiprocessing will make this bottleneck even worse.

Non-von Neumann processors

The National Semiconductor COP8 was introduced in 1986; it has a modified Harvard architecture.

Perhaps the most common type of non-von Neumann structure used in modern computers is content-addressable memory (CAM).

Contenido relacionado

Clipper (programming language)

Chaitin's constant

EPROM memory