Turing test

The Turing test or Turing test is an examination of a machine's ability to exhibit intelligent behavior similar to or indistinguishable from a human being. Alan Turing proposed that a human evaluate natural language conversations between a human and a machine designed to generate human-like responses. The evaluator would know that one of the participants in the conversation is a machine and the participants would be separated from each other. The conversation would be limited to a purely textual medium such as a computer keyboard and a monitor, so the ability of the machine to transform text into speech would be irrelevant. In the event that the evaluator cannot distinguish between the human and the machine correctly (Turing originally suggested that the machine had to convince an evaluator, after 5 minutes of conversation, 70% of the time), the machine would have passed the test. This test does not evaluate the knowledge of the machine in terms of its ability to answer questions correctly, it only takes into account its ability to generate answers similar to those that a human would give.

Turing proposed this test in his 1950 essay "Computing Machinery and Intelligence" while working at the University of Manchester (Turing, 1950; p. 460). He begins with the words: "I propose that you consider the following question, 'Can machines think?'” Since the word "think" is difficult to define, Turing decides to "replace the question with another that is closely related and in unambiguous words." Turing's new question is: "Are there imaginable digital computers that perform well on the imitation game?" Turing believed that this question could be answered and in the remainder of his essay he devotes himself to arguing against the main objections to the idea that "machines can think"..

Since it was created by Turing in 1950, the test has proven both highly influential and widely criticized, as well as becoming an important concept in the philosophy of artificial intelligence.

History

Philosophical context

The question of the capacity of thinking machines has a long history, divided between the materialistic and dualistic perspectives of the mind. René Descartes had ideas similar to the Turing test in his text Discourse on Method (1637) where he wrote that automata are capable of reacting to human interactions but argues that such an automaton lacks the ability to respond adequately to what is said. in his presence in the same way that a human could. Therefore, Descartes opens the doors for the Turing test by identifying the insufficiency of an appropriate linguistic response that separates the human from the automaton. Descartes does not go so far as to consider that an appropriate linguistic response can be produced by an automaton of the future and therefore does not propose the Turing test as such, although he already reasoned out the criteria and the conceptual framework.

How many different automaton or mobile machines can be built by the man industry [...] We can easily understand the fact that a machine is designed to utter words, even respond to tangible actions that produce a change in its organs; for example, if it is touched in a particular way I would not ask what we want to say; if it is touched elsewhere you can say that it is being hurt among other things. But it never happens that you can order your speech in different ways to respond appropriately to everything you say in your presence, just as the lower class of humans can do it. |

Denis Diderot proposes in his Pensées philosophiques a criterion of the Turing test:

“If you find a parrot that can respond to everything, it would be considered intelligent without a doubt."

This does not mean that he agrees but it shows that it was already a common argument used by materialists at the time.

According to dualism, the mind does not have a physical state (or at least has non-physical properties) and therefore cannot be explained in strictly physical terms. According to materialism, the mind can be explained physically, which opens the possibility for the creation of artificial minds.

In 1936, the philosopher Alfred Ayer considered the typical philosophical question about other minds: How do we know that other people experience the same level of consciousness as we do? In his book Language, Truth and Logic, Ayer proposed a method to distinguish between a conscious man and an unconscious machine: “The only argument I have to ensure that what appears to be conscious is not a conscious being but rather a doll or a machine, is the fact that it fails the empirical tests by means of which the presence or absence of consciousness is determined" (It is a proposal very similar to the Turing test but this one focuses in consciousness instead of in intelligence). Furthermore it is unknown whether Turing was familiar with Ayer's philosophical classic. In other words, something is not conscious if it fails a consciousness test.

Alan Turing

“Machine intelligence” has been a topic that UK researchers have followed for 10 years before the field of artificial intelligence (AI) research was founded in 1956. It was a topic commonly discussed by members of the "Club of Reason", an informal group of British cyber and electronic researchers that included Alan Turing.

Turing, in particular, had been working with the concept of machine intelligence since at least 1941, one of the first mentions of "computational intelligence" being made by Turing in 1947. In Turing's report called " intelligent machinery", he investigated "the idea of whether or not it was possible for a machine to demonstrate intelligent behavior' and as part of his research, he proposed what can be considered a predecessor of his future tests:

It is not difficult to design a paper machine that plays well chess. We have to get 3 men as subjects for the experiment. A,B and C. A and C are two bad chess players while B is the machine operator.... Two rooms are used with some arrangement to transmit the movements and a game between C and either A or machine is held. C may have difficulty deciding who is playing against.

The first published text written by Turing and focused entirely on machine intelligence was “Computing Machinery and Intelligence”. Turing begins this text by saying "I propose to consider the question 'Can machines think?'". Turing mentions that the traditional approach is to start with definitions of the terms "machine" and "intelligence", he decides to ignore this and begins replacing the question with a new one, "that is closely related and in unambiguous words". He proposes, in essence, changing the question from "can machines think?" to "Can machines do what we (as thinking entities) do?" The advantage of this new question is that it "draws a boundary between man's physical and intellectual capacities."

To demonstrate this approach, Turing proposes a test inspired by the "Imitation Game", in which a man and a woman would enter separate rooms and the rest of the players would try to distinguish between each one by asking questions and reading the responses (typed) aloud. The objective of the game is that the participants who are in the rooms must convince the rest that they are the other. (Huma Shah argues that Turing includes the explanation of this game to introduce the reader to the question and answer test between human and machine.) Turing describes his version of the game as follows:

We ask ourselves the question, “What if a machine takes the role of A in this game?” Would the questioner so often be mistaken in this new version of the game that when he was played by a man and a woman? These questions replace the original question “Can machines think?”.

Later in the text a similar version is proposed in which a judge converses with a computer and a man. Although none of the proposed versions is the same as we know today, Turing proposed a third option, which he discussed on a BBC radio broadcast, where a jury asks a computer questions and the computer's goal is to fool the majority of the jury into thinking it is human.

Turing's text considered nine putative objections which include all the major arguments against artificial intelligence that had arisen in the years after the publication of his text (see “Computing Machinery and Intelligence”).

ELIZA and PARRY

In 1966, Joseph Weizenbaum created a program that claimed to pass the Turing test. This program was known as ELIZA and it worked by analyzing the words typed by the user in search of keywords. In the case of finding a keyword, a rule that transformed the user's comment kicks in and a result sentence is returned. If a keyword was not found, ELIZA would either give a generic answer or repeat one of the previous comments. In addition, Weizenbaum developed ELIZA to replicate the behavior of a Rogerian psychotherapist which allowed ELIZA to 'take on the role of someone who knows nothing of the real world." The program was able to fool some people into thinking they were talking to a real person, and even some subjects were "very difficult to convince them that ELIZA was not human." As a result, ELIZA it is hailed as one of the programs (probably the first) to pass the Turing test, although this is highly controversial (see below).

PARRY was created by Kenneth Colby in 1972. It was a program described as "ELIZA with character". It attempted to simulate the behavior of a paranoid schizophrenic using a similar (probably more advanced) approach than Weizenbaum's. PARRY was tested using a variation of the Turing test in order to validate the work. A group of experienced psychiatrists were analyzing a group of real patients and computers running the PARRY program through teletypes. Another group of 33 psychiatrists were shown transcripts of the conversations. Both groups were asked to indicate which patients were human and which were computers. The psychiatrists were able to answer correctly only 48% of the time, a value consistent with random answers.

Into the 21st century, versions of these programs (called “chatbots”) continued to fool people. "CyberLover", a malware program, lured users by convincing them to "disclose information about their identities or enter a website that would introduce malware to their computers". The program emerged as a "Valentine's Day risk", flirting with people "seeking relationships online to gather personal information."

The Chinese Room

The 1980 text “Minds, Brains, and Programs” by John Searle proposed the “Chinese room” experiment and argued that the Turing test could not be used to determine whether a machine could think. Searle observed that software (such as ELIZA) could pass the Turing test by manipulating characters that he had not understood. Without understanding they cannot really be classified as "thinking" in the same way as humans, therefore Searle concluded that the Turing test cannot prove that a machine can think. Like the Turing test, Searle's argument has been widely criticized as well as supported.

Arguments like Searle's in the philosophy of mind sparked a more intense debate about the nature of intelligence, the possibility of intelligent machines, and the value of the Turing test that continued through the 1980s and 1990s. The physicalist philosopher William Lycan recognized the advancement of artificial intelligences, beginning to behave as if they had minds. Lycan uses the thought experiment of a humanoid robot named Harry who can converse, play golf, play the viola, write poetry and thus manage to fool people as if he were a person with a mind. If Harry were human, it would be perfectly natural to think that he has thoughts or feelings, which would suggest that Harry can actually have thoughts or feelings even if he is a robot. For Lycan "there is no problem with or objection to the experience qualitative in machines that is not equally a dilemma for such an experience in humans" (see Problem of other minds).

Loebner Prize

The Loebner Award provides an annual platform for practical Turing tests, with the first competition being held in November 1991. Created by Hugh Loebner, the Cambridge Center for Behavioral Studies in Massachusetts, United States, organized the awards through the year 2003 included. Loebner said that one reason the competition was created was to advance the state of AI research, at least in part, since no attempt had been made to implement the Turing test after 40 years of discussion.

The first Loebner Prize competition, in 1991, led to renewed discussion about the feasibility of the Turing test and the value of continuing to pursue it in the press and in academia. The first competition was won by an unconscious program without no identifiable intelligence that managed to fool naive interrogators. This exposed many of the shortcomings of the Turing test (discussed below): The program won because it was able to "imitate human typing errors"; "unsophisticated interrogators were easily fooled" and some AI researchers felt that the test is a distraction from more fruitful investigations.

The silver (textual only) and gold (visual and aural) awards have not been won to date, however, the competition has awarded the bronze medal each year to the computer system which, according to the opinion of the judges, demonstrates the “most humane” conversational behavior among the other participants. A.L.I.C.E. (Artificial Linguistic Internet Computer Entity) has won the bronze award 3 recent times (2000, 2001 and 2004). Trainee AI Jabberwacky won in 2005 and again in 2006.

The Loebner Prize evaluates conversational intelligence, the winners are typically chatbots. The rules of the first instances of the competition restricted the conversations: each participating program and a hidden human conversed on a single topic, and the interrogators were limited to a single question per interaction with the entity. The restricted conversations were eliminated in the 1995 competition. The interaction between the evaluator and the entity has varied in the different instances of the Loebner Prize. At the 2003 Loebner Prize at the University of Surrey, each interrogator was allowed to interact with the entity for 5 minutes whether human or machine. Between 2004 and 2007, the allowed interaction was more than 20 minutes. In 2008, the interaction allowed was 5 minutes per pair because, the organizer Kevin Warwick, and the coordinator Huma Shah, considered that this should be the duration of any test, as Turing put it in his 1950 text: "... the correct identification after 5 minutes of questioning." They felt that the lengthy tests previously implemented were inappropriate for the state of artificial conversational technologies. Ironically the 2008 competition was won by Elbot of Artificial Solutions, it did not simulate a human personality but that of a robot and still managed to fool three human judges that it was the human acting as a robot.

During the 2009 competition. Based in Brighton, UK, the allowed interaction was 10 minutes per round, 5 minutes to talk to the machine and 5 minutes to talk to the human. It was implemented this way to test the alternative reading on Turing's prediction that the 5 minute interaction should be with the computer. For the 2010 competition, the sponsor increased the interrogator-system interaction time to 25 minutes.

Competition at the University of Reading 2014

A Turing test competition organized by Kevin Warwick and Huma Shah for the 60th anniversary of Turing's death was held on June 7, 2014 and was held at the Royal Society of London. It was won by Russian chatbot Eugene Goostman. The robot, during a series of 5-minute long conversations, managed to convince 33% of the contest judges that it was human. Included among the judges were John Sharkley, sponsor of the Turing pardon bill, AI professor Aaron Sloman and Red Dwarf actor Robert Llewellyn.

The organizers of the competition believed that the test had been “passed for the first time” at the event saying: “some will say that the test has already been passed. The words 'Turing Test' have been applied to similar competitions around the world. However, this event involves more simultaneous comparison testing at the same time than ever before, was independently verified, and crucially, conversations were not restricted. A true Turing test does not establish the questions or the topics of the conversations.”

The competition has faced criticism, first, only a third of the judges were fooled by the computer. Second, the computer character appeared to be a 13-year-old Ukrainian girl who learned English as a second language. The award required 30% of the judges to be fooled which is consistent with Turing's text Computing Machinery and Intelligence. Joshua Tenenbaum, an expert in mathematical psychology at MIT, called the result unimpressive.

Versions of the Turing test

Saul Traigner argues that there are at least 3 primary versions of the Turing test, of which two are proposed in “Computing Machinery and Intelligencee” and another that he describes as “the standard interpretation”. ”. Although there is controversy as to whether this "standard interpretation" was described by Turing or if it is based on misinterpretation of the text, these three versions are not classified as equivalent and their strengths and weaknesses are different.

Huma Shah points to the fact that Turing himself was appalled by the possibility that a machine could think and was providing a simple method of examining this through question-and-answer sessions between human and machine. Shah argues that There is an imitation game that Turing could have implemented in two different ways: a) a one-on-one test between the interrogator and the machine or b) a simultaneous comparison between a human and a machine interrogated in parallel by the same interrogator. Because the Turing test assesses indistinguishability in performance ability, the verbal version naturally generalizes to all human ability, verbal and nonverbal (robotic).

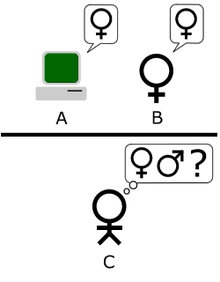

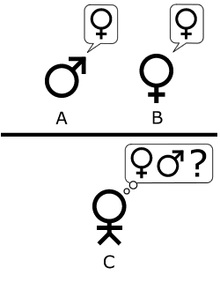

Imitation game

The original game described by Turing proposed a party game involving three players. Player A is a man, Player B is a woman, and Player C (who has the role of interrogator) is either gender. In the game, Player C does not have eye contact with any of the other players and can communicate with them by means of written notes. By asking the players questions, Player C tries to determine which of the two is the man and which is the woman. Player A will try to trick the questioner into choosing the wrong player while Player B will help the questioner to choose the correct player.

Sterret refers to this game as "The Original Imitation Game Test". Turing proposed that the role of player A be filled by a computer so that the computer would have to pretend to be a woman and try to guide the questioner to the answer. incorrect. The success of the computer would be determined by comparing the outcome of the game when player A is the computer with the outcome of the game when player A is a man. Turing stated that if "the interrogator misjudges as often when the game is played [with the computer] as when the game is played between a man and a woman", it can be argued that the computer is intelligent.

The second version appeared later in Turing's 1950 text. Similar to the Original Imitation Game Test, the role of player A would be performed by a computer. However, the role of player B would be performed by a man and not a woman.

“Let’s direct our attention to a specific digital computer called C. Is it true that by modifying the computer so that it has a storage that will increase its reaction speed appropriately and by providing it with an appropriate program, C can successfully perform the role of A in the imitation game with the role of B made by a man?”

In this version both player A (the computer) and player B will try to guide the questioner to the wrong answer.

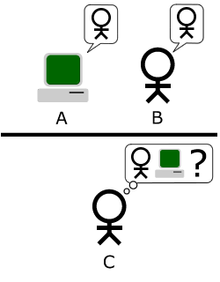

Standard interpretation

General understanding dictates that the purpose of the Turing test is not specifically to determine whether a computer will be able to fool the interrogator into believing that it is a human, but its ability to imitate the human. Although there is some dispute as to which interpretation Turing was referring to, Sterret believes this was it and therefore combines the second version with it while others, such as Traiger, do not however this has not led to a consensus. standard interpretation really. In this version, player A is a computer and the gender of player B is irrelevant. The interrogator's goal is not to determine which of them is male and which is female, but which is computer and which is human. The fundamental problem with the standard interpretation is that the interrogator cannot differentiate which respondent is human and which is machine. There are other issues with duration, but the standard interpretation generally sees this limitation as something that should be reasonable.

Game of imitation vs. Standard Turing Test

There has been controversy over which of the alternative formulas Turing came up with. Sterret argues that two proofs can be obtained from reading Turing's 1950 text and that, according to Turing, they are not equivalent. The test consisting of the party game and comparing success rates is referred to as the "Original Imitation Game Test" while the test consisting of a human judge conversing with a human and a machine is known as " Turing Standard Test”, note that Sterret treats this equally with the “standard interpretation” instead of treating it as the second version of the imitation game. Sterret agrees that the Standard Turing Test (PET) has the problems his critics cite but feels that, in contrast, the Original Imitation Game Test (JIO Test) proved immune to many of these due to one crucial difference: Unlike PET, it makes no similarity to human performance, although it does use human performance in defining criteria for machine intelligence. A human can fail the JIO Test but it is argued that this test is for intelligence and failure only indicates a lack of creativity. The JIO Test requires creativity associated with intelligence and not just "simulation of human conversational behavior." The general structure of the JIO Test can be used with non-verbal versions of imitation games.

Other writers continue to interpret Turing's proposal as the imitation game itself without specifying how to take into account Turing's claim that the proof he proposed using the festive version of the imitation game is based on a criterion of frequency comparison of success in the game instead of the ability to win in just one round.

Saygin suggested that the original game is probably a way of proposing a less biased experimental design, since it hides the involvement of the computer. The imitation game includes a "social trick" not found in the standard interpretation, since in the game both the computer and the man are required to pretend to be someone they are not.

Should the computer interrogator know?

A vital piece of any laboratory test is the existence of a control. Turing never makes it clear whether the questioner in his tests is aware that one of the participants is a computer. However, if there were a machine that had the potential to pass the Turing test, it would be best to assume that a double-blind check is necessary.

Returning to the Original Imitation Game, Turing states that only player A will be replaced with a machine, not that player C is aware of this change. When Colby, FD Hilf, S Weber, and AD Kramer examined PARRY, did so by assuming that the interrogators did not need to know that one or more of the interrogees was a computer. As Ayse Saygin, Peter Swirski and others have highlighted, this makes a big difference to the implementation and outcome of the test. In an experimental study examining violations of Grice's conversational maxims using transcripts of test winners (hidden interlocutor and questioner) one by one between 1994 and 1999, Ayse Saygin observed significant differences between the responses of participants who knew computers were involved. and those who don't.

Huma Shah and Kevin Warwick, who organized the 2008 Loebner Prize at the University of Reading which hosted simultaneous comparative tests (one judge and two hidden speakers), showed that knowledge about the speakers did not make a difference. significant in determining the judges. These judges were not explicitly told the nature of the pairs of hidden interlocutors they were going to question. The judges were able to differentiate between human and machine, including when they were facing control pairs of two machines or two humans infiltrating between the machine and human pairs. Misspellings gave away hidden humans, machines were identified by their speed of response and larger expressions.

Strengths of the test

Treatability and simplicity

The power and appeal of the Turing test derives from its simplicity. Philosophy of mind, psychology, and modern neuroscience have been unable to provide definitions for "intelligence" and "thinking" that are precise and general enough to apply to machines. Without these definitions, the main unknowns of the philosophy of artificial intelligence cannot be answered. The Turing test, while imperfect, at least provides something that can be measured and as such is a pragmatic solution to a difficult philosophical question.

Variety of topics

The format of the test allows the interrogator to give the machine a wide variety of intellectual tasks. Turing wrote that: "Question and answer methods seem to be adequate to introduce any of the fields of human endeavor that we wish to include." John Haugeland added: "understanding the words is not enough, one must also understand the theme.”

To pass a properly designed Turing test, the machine must use natural language, reason, have knowledge, and learn. The test can be extended to include video as a source of information along with a "hatch" through which objects can be transferred, this would force the machine to test its vision and robotics ability at the same time. Together they represent almost all the problems that artificial intelligence research would like to solve.

The Feigenbaum test is designed to use the range of topics available for a Turing test to its advantage. It is a limited form of the Turing question and answer game which pits the machine against the skill of experts in specific fields such as literature and chemistry. IBM's Watson machine achieved success in a television show that asked human knowledge questions to human and machine contestants alike and at the same time called Jeopardy!.

Emphasis on emotional and aesthetic intelligence

As a Cambridge honors mathematics graduate, Turing was expected to propose a test of computational intelligence that would require expert knowledge of some highly technical field and as a result would need to anticipate a different approach. Instead, the test he described in his 1950 text only requires that the computer be able to compete successfully in a common party game, by which he means that its performance can be compared to that of a typical human in answering series of questions to appear to be the female participant.

Given the status of human sexual dimorphism as one of the oldest topics, it is implicit in the above scenario that the questions asked cannot involve specialized factual knowledge or information processing techniques. The challenge for the computer will be to exhibit empathy for the role of women as well as demonstrate a characteristic aesthetic sensibility, qualities both of which are displayed in this excerpt imagined by Turing:

- Questioner: Could X tell me the length of your hair?

- Participant: My hair is in layers and the longer strands are approximately 9 inches long.

When Turing introduces some specialized knowledge to his imaginary dialogues, the subject is not mathematics or electronics but poetry:

- Interrogator: In the first line of your sonnet you read: “I will compare it with a summer day,” would it not work “a spring day” in the same way or better?

- Witness: It wouldn't work.

- Questioner: How about “a winter day”? That should work.

- Witness: Yes, but no one wants to be compared to a winter day.

Turing, again, demonstrates his interest in empathy and aesthetic sensibility as a component of artificial intelligence, and in light of growing concerns about AI running amok, it has been suggested that this approach may represent a critical insight. by Turing, i.e. that intelligence and aesthetics will play a key role in creating a “friendly AI”. However, it has been observed that whichever direction Turing inspires us depends on the preservation of his original vision, that is, the promulgation of a "Standard Interpretation" of the Turing test (i.e. one that focuses only in discursive intelligence) must be taken with caution.

Weaknesses of the test

Turing did not explicitly state that the Turing test could be used as a measure of intelligence, or any other human quality. He wanted to provide a clear and understandable alternative to the word 'think', which could later be used to respond to criticism of the possibility of 'thinking machines', and to suggest ways research could be further advanced. However, the use of the Turing test has been proposed as a measure of the "ability to think" or "intelligence" of a machine. This proposal has received criticism from philosophers and computer scientists. This assumes that an interrogator can determine whether a machine is "thinking" by comparing its behavior to that of a human. Each element of this assumption has been questioned: the reliability of the interrogator's judgment, the value of comparing behavior alone, and the value of comparing machine to human, it is because of these assumptions and other considerations that some AI researchers question the relevance. of the Turing test in the field.

Human intelligence vs. General Intelligence

The Turing test does not directly assess whether a computer is behaving intelligently, only whether it is behaving like a human. Since human behavior and intelligent behavior are not exactly the same, the test can err in precisely measuring intelligence in two ways:

- Certain human behaviors are not intelligent

- The Turing test requires the machine to deploy all human behaviors, regardless of whether they are smart or not. He even examines in search of behaviors that we consider, at all, intelligent. Among them is susceptibility to insults, the temptation to lie or, simply, writing errors. If a machine fails to imitate these unintelligent behaviors, it would rebut the test.: This objection was released in a publication in “The Economist” entitled “Artificial Stupidity” (Artificial Stupidity in English) shortly after the first competition of the Loebner Prize in 1992. The article claimed that the winner of the competition was, in part, by the machine's ability to "imitate human writing errors." The same Turing suggested that the programs add errors to the information they conveyed to appear “players” of the game. [chuckles]

Certain intelligent behaviors are inhumane

- The Turing test does not examine highly intelligent behaviors such as the ability to solve difficult problems or the occurrence of original ideas. In fact, it requires specifically disappointment on the part of the interrogator: if the machine is smarter than a human, it should appear not to be “too much” intelligent. If it solved a computer problem that is practically impossible for a human being, the questioner would know that it is not human and as a result the computer would reprobate the test.

- Since the intelligence beyond human intelligence cannot be measured, the test cannot be used to build or evaluate systems that are more intelligent than humans. This is why several alternative tests have been proposed to evaluate a super intelligent system

Real intelligence vs. Simulated intelligence

The Turing test evaluates only and strictly how the subject behaves (that is, the external behavior of the machine). In this regard, a behaviorist or fundamentalist perspective is taken to the study of intelligence. The ELIZA example suggests that a machine that passes the test could simulate human conversational behavior by following a simple (but long) list of mechanical rules without thinking or having a mind at all.

John Searle has argued that external behavior cannot be used to determine whether a machine is "really" thinking or just "faking thinking". His Chinese room purports to demonstrate this, although the Turing test is a good definition. operational intelligence, it does not indicate whether the machine has a mind, consciousness, or intentionality. (Intentionality is a philosophical term for the power of thoughts to be "about" something.)

Turing anticipated this criticism in his original text, writing:

I do not wish to give the impression that I do not believe in the mystery of consciousness. There is, for example, something paradoxical connected to any attempt to locate it. But I don't think these mysteries need to be solved before we can answer the question we're dealing with in this text.

Interrogator naivety and the anthropomorphic fallacy.

In practice, test results can easily be dominated, not by the intelligence of the computer but by the attitudes, skill, or ingenuity of the interrogator.

Turing does not specify the required skills and knowledge of the integrator in his test description but did include the term “average interrogator”: “[the] average interrogator should have no more than a 70% chance of successful identification after five minutes of questioning."

Shah and Warwick (2009b) demonstrated that experts are deceived and that the interrogator's “power” vs. “solidarity” strategy influences identification with the latter being more successful.

Chatbots like ELIZA have repeatedly fooled people into believing they are communicating with humans. In this case, the interrogator was unaware of the possibility that his interaction was with a computer. To successfully pretend to be a human, there is no need for the machine to have any intelligence, just a superficial resemblance to human behavior.

Early Loebner Prize competitions used unsophisticated questioners who were easily fooled by machines. Since 2004, Loebner Prize organizers have deployed philosophers, computer scientists, and journalists among the questioners. However, some of these experts have been fooled by the machines.

Michael Shermer points out that human beings consistently regard non-human objects as human whenever given the opportunity to do so, an error called the “anthropomorphic fallacy”: they talk to their vehicles, attribute desires and intentions to natural forces (e.g. “nature hates the void") and reveres the solo as a human being with intelligence. If the Turing test were applied to religious objects, then inanimate statues, rocks, and places would consistently pass the test throughout history according to Shermer.[citation needed] This human tendency toward anthropomorphism effectively reduces the standard to the Turing test unless interrogators are trained to avoid it.

Errors in human identification

An interesting feature of the Turing test is the frequency with which researchers mistake human participants for machines. It has been suggested that this is because researchers are looking for expected human responses rather than typical responses. This results in the incorrect categorization of some individuals as machines, which may favor it.

Irrelevance and impracticability: Turing and AI research.

Famous AI researchers argue that trying to pass the Turing test is a distraction from more fruitful research. The test is not an active focus of academic research or commercial endeavor, as Stuart Russel and Peter Norvig wrote: "AI researchers have devoted little attention to passing the Turing test." There are several reasons for this.

First, there are easier ways to test a program. Most of the research in AI-related fields is devoted to more specific and modest goals like automated planning, object recognition, or logistics. To test the intelligence of programs in performing these tasks, researchers simply give them the task directly. Russell and Norvig proposed an analogy with the history of flight: Airplanes are tested on their ability to fly, not by comparing them to birds. Texts of "Aeronautical Engineering" mention: "the goal of the field should not be defined as flying machines that fly so similarly to pigeons that they could deceive them."

Second, creating simulations of humans is a difficult problem that does not need to be solved to meet the basic goals of AI research. Believable human characters are interesting for a work of art, a game, or a fancy user interface but have no place in the science of creating intelligent machines that solve problems with this intelligence.

Turing wanted to provide a clear and understandable example to aid in the discussion of the philosophy of artificial intelligence. John McCarthy mentions that the philosophy of AI is "unlikely to have any more effect on the practice of AI research than philosophy of science have in the practice of science."

Variants of the Turing test

Numerous versions of the Turing test, including those mentioned above, have been debated over the years.

Reverse Turing test and CAPTCHA

A modification of the Turing test where the goals between machines and humans is a reverse Turing test. An example is used by the psychoanalyst Wilfren Bion, who has a particular fascination with the "storm" that resulted from the meeting of one mind by another. In his 2000 book, among other original ideas on the Turing test, Swirski discusses in detail what he defines as the Swirski Test (basically the reverse Turing test). He points out that it overcomes all common objections to the standard version.

R.D. Hinshelwood continued to develop this idea by describing the mind as an "apparatus for recognizing minds". The challenge would be for the computer to determine if it is interacting with a human or with another computer. This is an extension of the original question that Turing was trying to answer, and probably offers a high enough standard for defining that a machine can "think" in the same way that we describe as a human.

CAPTCHA is a form of the Turing test in reverse. Before being able to perform an action on a website, the user is presented with a series of alphanumeric characters in a distorted image and asked to enter it into a text field. This is for the purpose of preventing entry into automated systems commonly used for website abuse. The reason behind this is that software sophisticated enough to accurately read and reproduce the image does not yet exist (or is not available to the average user) so any system capable of passing the test must be human.

Software capable of solving CAPTCHAs accurately by analyzing patterns on the generating platform is being actively developed. Optical Character Recognition or OCR is under development as a solution for system inaccessibility CAPTCHA for humans with disabilities.

Subject Matter Expert Turing Tests.

Another variation is described as the variation of the Subject Matter Expert Turing Test in which one cannot distinguish between the answer of a machine and an answer given by a subject matter expert. It is known as the Feigenbaum Test and was proposed by Edward Feigenbaum in a 2003 paper.

Turing Total Test

The “Turing Total Test” is a variation that adds requirements to the traditional test. The interrogator also assesses the subject's perceptual abilities (requiring computer vision) and the subject's ability to manipulate objects (requiring robotics).

Minimum Intelligence Mark Test

The Minimum Intelligence Signal Test was proposed by Chris McKinstry as the "maximum abstraction of the Turing test", in which only binary inputs (true/false or yes/no) are allowed for the purpose of to focus on the ability to think. Problems of textual conversation such as anthropomorphic bias are eliminated, and it does not require the simulation of non-intelligent human behaviors, allowing it to enter systems that exceed human intelligence. The questions are not dependent on others, however this is similar to an IQ test than a question mark. It is typically used to collect statistical information against which the performance of AI programs is measured.

Hutter Award

The organizers of the Hutter Prize believe that natural language compression is a difficult problem for artificial intelligences equivalent to passing the Turing test.

The data compression test has certain advantages over most versions of the Turing test including:

- It gives a single number that can be used directly to compare which of the two machines in smarter.

- It doesn't require the computer to lie to the judge.

The main disadvantages of this test are:

- It is not possible to evaluate humans in this way.

- It is unknown that the score of this test is equivalent to passing the Turing test.

Other tests based on compression or Kolmogorov Complexity

A similar approach to the Hutter Prize that appeared much earlier in the late 1990s is the inclusion of compression problems in an extended Turing test, or by tests completely derived from Kolmogorov Complexity Other tests related are presented by Hernandez-Orallo and Dowe.

Algorithmic IQ, or CIA, is an attempt to convert Legg and Hutter's theoretical Universal Measure of Intelligence (based on Solomonoff's inductive indifference) into a practical functional test of machine intelligence.

Two of the biggest advantages of these tests are their applicability to non-human intelligences and the absence of the need for human interrogators.

Ebert's test

The Turing test inspired the Ebert test proposed in 2011 by film critic Robert Ebert, which assesses whether a computer-synthesized voice is capable of producing the intonations, inflections, timing, and other things to do to the people laugh.

Predictions

Turing predicted that machines would eventually pass the test, in fact, he estimated that by the year 2000, machines with at least 100 MB of storage could fool 30% of human judges in a 5 minute test and that people would not consider the phrase "thinking machine" as contradictory. (In practice, from 2009 to 2012, chatbots that participated in the Loebner Prize only managed to fool a judge once, and this was because the human participant pretended to be a conversational robot) Turing also predicted that machine learning would be an essential part of building powerful machines, this claim is now considered possible by AI researchers.

In a 2008 paper submitted to the 19th Midwest Conference on Artificial Intelligence and Cognitive Science, Dr. Shane T. Mueller predicted that a variant of the Turing test called the "Cognitive Decathlon" would be completed in 5 years.

By extrapolating the exponential growth of technology over several decades, futurist Ray Kurzweil predicted that machines that would pass the Turing test would be manufactured in the near future. In 1990, Kurzweil defined this near future to be around the year 2020, by 2005 he changed his estimate to the year 2029.

The “Long Bet Project Nr. 1” is a $20,000 bet between Mitch Kapor (pessimist) and Ray Kurzweil (optimist) on the possibility of a machine passing the Turing test by the year 2029. In the Long Now Turing test, each of the three judges will conduct interviews with each of the four participants (i.e. the computer and three humans) for two hours for a total of 8 hours of interviews. The bet specifies conditions in detail.

Conferences

Turing Colloquium

The year 1990 marked the 40th anniversary of the first publication of Turing's text “Computing Machinery and Intelligence” and interest in it was revived. Two important events were held that year, the first being the Turing Colloquium held at the University of Sussex in April, which brought together academics and researchers from different disciplines to discuss the Turing Test in terms of its past, present and future; the second event was the annual Loebner Prize competition.

Blay Whitby listed 4 key points in the history of the Turing test being this: the publication of “Computing Machinery and Intelligence” in 1950, the announcement of ELIZA in 1966 by Joseph Weizenbaum, the creation of PARRY by Kenneth Colby and the 1990 Turing Colloquium.

2005 Conversational Systems Colloquium

In November 2005, the University of Surrey hosted a meeting of artificial conversational entity developers attended by Loebner Prize winners Robby Garner, Richard Wallace and Rollo Carpenter. Guest speakers included David Hamill, Hugh Loebner (sponsor of the Loebner Prize) Huma Shah.

IASC Turing Test Symposium 2008

In parallel with the 2008 Loebner Prize based at the University of Reading, the Society for the Study of Artificial Intelligence and Behavioral Simulation (IASC) hosted a symposium dedicated to discussing the Turing Test, was hosted by John Barnden, Mark Bishop, Huma Shah and Kevin Warwick Speakers included Royal Institution Director Susan Greenfield, Selmer Bringsjord, Andrew Hodges (Turing biographer) and Owen Holland (consciousness scientist). No agreement was reached for a canonical Turing test but Bringsjord noted that a higher prize would result in Turing test passing more quickly.

IASC Turing Test Symposium 2010

60 years after its introduction, the debate over Turing's experiment on whether “Can machines think?” led to its reconsideration for the AISB Convention of the XXI century, held from March 29 to April 1, 2010 at De Monthfort University, UK. The IASC is the Society for the Study of Artificial Intelligence and Behavior Simulation.

The Year of Turing and Turing100 in 2012

Throughout 2012, a considerable number of events to celebrate Turing's life and scientific impact took place. The Turing100 group supported these events and organized a special Turing Test at Bletchley Park on June 23, 2012 to celebrate the 100th anniversary of Turing's birth.

IASC/IACAP Turing Test Symposium 2012

The latest discussions on the Turing Test in a symposium with 11 speakers, hosted by Vincent C. Müller (ACT and Oxford) and Aladdin Ayeshm (De Montfort) with Mark Bishop, John Barnden, Alessio Piebe and Pietro Perconti.

Contenido relacionado

Command & Conquer

Random access memory

Richard Stallmann