Synthesizer

A synthesizer is an electronic musical instrument that, through circuits, generates electrical signals that are later converted into audible sounds. One feature that differentiates the synthesizer from other electronic instruments is that its sounds can be created and modified. Synthesizers can imitate other instruments or generate new timbres. They are usually executed through a keyboard. Synthesizers that do not have some kind of controller are called "sound modules" (or in the case of being isolated vertical modules in rack format: modular) and are controlled through MIDI or voltage control. The concept of a sound module (a synthesizer without a keyboard, governed by a MIDI input) should not be confused with that of a modular synthesizer, which are usually complete analog devices, although divided into modules.

Synthesizers use various methods to generate and process a signal, which can be analog or digital. Among the most popular synthesis techniques are: additive synthesis, subtractive synthesis, frequency modulation, physical modeling, phase modulation, and sample-based synthesis. Other not-so-common forms of synthesis (see Synthesis Types) include subharmonic synthesis, a variant of additive synthesis via subharmonics (used by the Trautonium mixture), and granular synthesis, a synthesis based on samples through sound synthesis, generally resulting in soundscapes.

First electrical instruments

One of the first electrical instruments, the "musical telegraph," was invented by electrical engineer Elisha Gray who applied for a patent on January 27, 1876. He accidentally discovered the generation of sounds through the vibration of an electromagnetic circuit itself, and invented a basic one-note oscillator. This "musical telegraph" he used a steel reed with oscillations created by electromagnets transmitted by a telegraph line. Gray also implemented a simple horn on the following models. It consisted of a diaphragm that vibrated in a magnetic field, making the oscillator audible.

This instrument was a remote electromechanical musical instrument that used telegraphy and an electric buzzer that could generate a fixed-sounding timbre. However, it lacked an arbitrary sound synthesis function. Some have mistakenly called it "The first synthesizer".

Early auditory synthesizers – tonewheel organs

In 1897, Thaddeus Cahill invented the teleharmonium (or dynamophone) which used dynamos (old-fashioned electrical generators), was capable of additive synthesis like the Hammond organ, which was invented in 1934. However, the business of Cahill was not successful due to various reasons (the size of the system, the rapid evolution of electronics, crosstalk on the telephone line, etc.), and similar but more compact instruments were subsequently developed, such as tonewheel organs.

Emergence of electronics and the first electronic instruments

In 1906, American engineer Lee De Forest, guided by the "electronic age," invented the first amplifier tube, called the Audion. This led to the development of new entertainment technologies, including radio and talkies. These new technologies also influenced the music industry resulting in several of the first electronic musical instruments to use tubes, including:

- Audio piano by Lee De Forest in 1915

- Theremin by Léon Theremin in 1920

- Ondes Martenot by Maurice Martenot in 1928

- Trautonium by Friedrich Trautwein in 1929

Most early instruments used "heterodyne circuits" to produce audio frequencies, and were limited by their synthesis capabilities. Ondes Martenot and Trautonium found themselves in continuous development for several decades, eventually developing similar qualities to later synthesizers.

Sound Graphics

In the 1920s, Arseny Avraamov developed various graphic sound systems, and systems similar to graphic sound were developed around the world, as seen in Holzer, 2010. In 1938, USSR engineer Yevgeny Murzin designed a composition tool called ANS, it was one of the first real-time additive synthesizers using optoelectronics. Although his idea of reconstructing sound from its image was very simple, the instrument was not realized until after 20 years, in 1958, Murzin was "an engineer who worked in areas not related to music" 3. 4; (Kreichi, 1997).

Subtractive synthesis and polyphonic synthesizers

By the 1930s and 1940s, the basic elements required for modern analog subtractive synthesis — oscillators, filters, envelope controls, and various effects units — had already appeared and were implemented. in various electronic instruments.

The first polyphonic synthesizers were developed in Germany and the United States. The Warbo Formant organ was developed by Harald Bode in Germany in 1937, it was a four-key/voice keyboard with two formant filters and dynamic envelope control and possibly manufactured commercially by a factory in Dachau, according to 120 years of Electronic Music,. The "Novachord" Released in 1939, the Hammond keyboard was an electronic keyboard that used twelve sets of oscillators per octave with octave dividers to generate sound, complete with vibrato, a resonator with filter bank, and a dynamic envelope controller. During the three years that Hammond manufactured this model, 1,069 units were shipped, but production was stopped due to the start of World War II. Both instruments were forerunners of electronic organs and polyphonic synthesizers.

Monophonic Electronic Keyboards

During the 1940s and 1950s, before electronic organs became popular and combo organs were introduced, a number of portable monophonic instruments with small keyboards were developed and marketed. These little instruments consisted of an electronic oscillator, vibrato effect, passive filters, etc., and most of them brackets (with the exception of the Clavivox) were designed for use with conventional ensembles, rather than experimental instruments for music studios. electronics that would later be involved in modern synthesizers. Some of these small instruments are:

- Solovox (1940) by the company Hammond: an instrument aditement with monophonic keyboard that consisted of a large cabinet of tones with a small unit of the keyboard, intended to accompany pianos with a main monophonic voice of organ or with an orchestral sound.

- Multimonica (1940) designed by Harald Bode and produced by Hohner: an instrument with two keyboards that consisted of an electrical armony at the bottom and a monophonic wave synthesizer of saw tooth at the top.

- Ondioline (1941) designed by Georges Jenny in France.

- Clavioline (1947) designed by Constant Martin, produced by Selmer and Gibson, etc., this instrument was included in several popular recordings of the 1960s, including "Runaway" (1961) by Del Shannon, "Telstar" (1962) by The Tornados and "Baby, You're a Rich Man" (1967) by The Beatles.

- Clavivox (1952) by Raymond Scott.

Other innovations

In the late 1940s, Canadian inventor and composer Hugh Le Caine invented the Electronic Sackbut, the hero of the first instruments to allow three aspects of sound to be controlled in real time (volume, tone and timbre), nowadays, pressure sensitivity, tone knob. Controllers were initially implemented as "multidimensional pressure sensitive keyboards" in 1945, then they were changed to a rogue group of reclaimed left-hand controllers in 1948.

In Japan, in early 1935, Yamaha released the Magna organ, a multi-timbral keyboard instrument constructed from electrically blown pickups and holes. It was similar to the electrostatic harmonium Developed by Frederick Albert Hoschke in 1934 and manufactured by Everett and Wurlitzer until 1961.

However, at least one Japanese was not satisfied with that situation back then. In 1949, the Japanese composer Minao Shibata discussed the "concept of a musical instrument with a high level of performance that could synthesize any type of sound waves", and that "..was operated in a simple", predicting that with such an instrument, "...the music scene would change drastically".

Electronic music studies as sound synthesizers

After World War II, electronic music, including electroacoustic music and música concrete, was created by contemporary composers, and numerous "electronic music studios" they were established all over the world, especially in Bonn, Cologne, Paris and Milan. These studios were often packed with electronic equipment including oscillators, filters, tapes, audio consoles, etc., and the entire studio functioned as a single sound synthesizer.

Origin of the term "sound synthesizer"

Between 1951 and 1952, RCA produced a machine called an "electronic music synthesizer"; however, it was a "composition machine", as it did not produce sounds in real time. RCA developed the first 'programmable sound synthesizer', the RCA Mark II Sound Synthesizer installing it at the Columbia-Princeton Electronic Music Center in 1957. Prominent composers including Vladimir Ussachevsky, Otto Luening, Milton Babbitt, Halim El-Dabh, Bülent Arel, Charles Wuorinen and Mario Davidovsky used the RCA synthesizer in several of their compositions.

From the modular synthesizer to popular music

Between 1959 and 1960, Harald Bode developed a modular synthesizer and sound processor, and in 1961, wrote exploring the concept of a portable modular synthesizer using new transistor technologies. His ideas were taken up by Donald Buchla and Robert Moog in the United States and Paul Knetoff in Italy at the same time: between them, Moog is known as the first synthesizer designer to popularize the "voltage control" in analog musical instruments. Parallel to the work of Moog, Buchla and Ketoff, other electronic luthiers were developing inventions around this idea in different parts of the world. In the mid-sixties Raúl Pavón Sarrelangue developed an analog synthesizer in Mexico which he baptized "Omnifón". The author will explain in his book (decades later) that this synthesizer had a "wave oscillator sinusoidal, square and ramp, continuously variable between 10hz and 20kHz, and with a wave purity that I have not seen to date in any commercial synthesizer”. It also had “an envelope generator, a series of timbral filters, a white noise generator and other facilities”. However, the Omnifón failed to transcend the fact of being an isolated invention developed for personal use. Pavón Sarrelangue bitterly explains the fate of the instrument: "My gadgets? that there is no other engineer more unsuccessful than me, with older instruments. They also represent one more industry that died without being born, due to the work and grace of our traditional apathy and indifference (manufacturing was offered to various manufacturers of electronic equipment). Thus, the electronic keyboard returned to its primitive muteness, before the powerful voices of Moog, Buchla, EMS... and all those who burst forth at every moment with new ideas and with capital and support for their manufacture and commercialization, which will surely find a warm acceptance in more progressive environments than ours".

Robert Moog built his first prototype between 1963 and 1964, and it was commissioned by Alwin Nikolais Dance Theater in New York; while Donald Buchla was commissioned by Morton Subotnick. Until the late 1960s and early 1970s, the development of small solid-state components allowed synthesizers to be portable instruments, as Harald Bode had proposed in 1961. In the early 1980s, synthesizers Companies were selling low-priced, compact versions of the synthesizers to the public. This, along with the development of the Musical Instrument Digital Interface (MIDI) protocol, made it easier to integrate and synchronize synthesizers and other electronic instruments for use in musical composition. In the 1990s, synthesizer emulators began to appear for computers, known as soft synthesizers. Subsequently, VST's and other plugins were able to emulate vintage synth hardware to some degree.

The synthesizer had a considerable effect on music of the 20th century. Micky Dolenz of The Monkees bought one of the early Moog synthesizers. The band was the first to release an album implementing the Moog synthesizer with Pisces, Aquarius, Capricorn & Jones Ltd. in 1967, which led to number one on the charts. The album The In Sound From Way Out! by Perrey and Kingsley using the moog and tapes was released in 1966. A few months later, the Rolling Stones' "2000 Light Years from Home" and the title track from the album "Strange Days" (1967) by The Doors also included a Moog, played by Brian Jones and Paul Beaver respectively. In the same year, Bruce Haack built a homemade synthesizer that he showed at Mister Rogers Neighborhood. The synthesizer included a sampler that recorded, stored, played, and repeated sounds controlled by switches, light sensors, and contact with human skin. Switched-On Bach (1968) by Wendy Carlos, recorded using Moog synthesizers, influenced different musicians of the time and is one of the most popular classical music recordings, along with Isao Tomita's records (particularly 1974's Snowflakes are Dancing), who in the early 1970s used synthesizers to create new artificial sounds (instead of using real instruments). The Moog sound reached the mass market with "Bookends" (1968) by Simon and Garfunkel and "Abbey Road" of The Beatles the following year; Hundreds of subsequent popular recordings used synthesizers, frequently the Minimoog. The electronic music hawks of Beaver and Krause, Tonto's Expanding Head Band, The United States of America and White Noise went on to reach a sizeable audience and musicians belonging to the progressive rock genre such as Pink Floyd's Richard Wright and Rick Wakeman. of Yes began to use the new portable synthesizers actively. Stevie Wonder and Herbie Hancock also contributed to the popularity of synthesizers in African-American music. Early adopters included Keith Emerson of Emerson, Lake & Palmer, Todd Rundgren, Pete Townshend and Vincent Crane from The Crazy World of Arthur Brown. In Europe, the first number-one single to prominently feature a Moog was Chicory Tip's Son of My Father (1972).

Polyphonic Keyboards and the Digital Revolution

In 1978, the success of the Prophet-5, a keyboard-controlled microprocessor polyphonic synthesizer, helped adapt synthesizers to a more modern environment, compared to modular units and more focused on small keyboard instruments. This factor helped accelerate the integration of synthesizers into popular music, a shift from the Minimoog, and later ARP Odyssey. The first polyphonic electronic instruments of the 1970s began as string synthesizers before advancing to multi-synths. incorporating monopoly functions and more, they gradually fell out of favor with new synthesizer models that allowed note assignment. These polyphonic synthesizers were mainly manufactured in the United States and Japan in the mid-1970s and early 1980s, such as the Yamaha CS-80 (1976), Oberheim polyphonic and Oberheim OB-X (1975 and 1979), Prophet-5 (1978), and Roland Jupiter-4 and Roland Jupiter 8 (1978 and 1981).

By the late 1970s, digital synthesizers and samplers were brought to the world market (and are sold today), as a result of extensive research and development. Compared to the sounds of analog synthesizers, the digital friends produced by these new instruments had a different number of characteristics: clearer attack and defined sounds, with a tonal quality with enharmonic content, and complex control of sound texture, among others. While these new instruments were expensive, these features were quickly adopted by musicians, especially in the United Kingdom and the United States. This led to a trend towards music production using digital sounds and set the stage for the development of popular low-cost digital instruments over the next decade. Relatively successful instruments, each selling hundreds of units per series, including the NED Synclavier (1977), Fairlight CMI (1979), E-mu Emulator (1981), and PPG Wave (1981).

Some of the first successful digital synthesizers and digital samplers introduced in the late 1970s and early 1980s (each selling hundreds of units per run) are:

- NED Synclavier (1977-1992) by New England Digital, based on research Dartmouth Digital Synthesizer since 1973.

- Fairlight CMI (1979-1988, more than 300 units) in Sydney, based on the first developments of Qasar M8 by Tony Furse in Canberra since 1972.

- Yamaha GS-1, GS-2 (1980, about 100 units) and CE20, CE25 (1982) in Hamamatsu, based on the investigation of synthesis by frequency modulation by John Chowning between 1967-1973 and the first developments of the TRX-100 and Programmable Algorithm Music Synthesizer (PAMS) by Yamaha between 1973-1979. (Yamaha, 2014)

- E-mu Emulator (1981-2000s) in California, vaguely based on the notion of synthesis by wave board seen in the MUSIC-N programming language in the 1960s.

- PPG Wave (1981-1987, about 1000 units) in Hamburg, based on synthesis using previously implemented wave board PPG Wavecomputer 360, 340 and 380 in 1978.

Most of the products on this list are still being sold in the 21st century, eg. Yamaha DX200 in 2001, E-mu Emulator X in 2009, Fairlight CMI 30A in 2011, and wavetable synthesis products by Waldorf as new versions of PPG Wave.

The history of additive synthesis also represents important research in relation to digital synthesis, which is not on the list above due to a lack of commercial success; most of the products on the list above, and even Yamaha's Vocaloid (EpR based on Spectrum Modeling Synthesis) in 2003 was influenced by it. </ref>

In 1983, however, Yamaha's revolutionary DX7 digital synthesizer dominated throughout popular music, leading to the adoption and development of digital synthesizers in various forms throughout the 1980s and the rapid decline of synth technology. analog synthesizers. In 1987, the Roland company released the Roland D-50 synthesizer, which combined existing sampler synthesis and built-in digital effects, while the popular Korg M1 (1988) heralded the era of workstation synthesizers., based on ROM sound samples to compose and sequence complete songs, instead of traditional sound synthesis.

Throughout the 1990s, the popularity of dance music using analog sounds, and the advent of digital analog synthesizers to recreate these sounds, and the development of the Eurorack modular synthesizer system, initially introduced with the Doepfer A-100 and since adopted by other manufacturers, all contributed to a revival of interest in analog technology. The turn of the new millennium saw further improvements in technology that led to the popularity of software synthesizers. In the 2010s, new analog synthesizers, including a keyboard and in their modular form, were released alongside digital instruments.

Impact on popular music

During the 1970s, Jean Jacques Perrey, Jean Michel Jarre, Gary Numan, and Vangelis released hit albums where synthesizers were prominent. Over time, this helped influence the rise of synthpop, a subgenre of new wave music, in the late 1970s. The work of German electronic music bands such as Kraftwerk (1970) and Tangerine Dream, British artists such as Gary Numan and David Bowie, and the Japanese band Yellow Magic Orchestra were influential in the development of the genre. The hits "Are & #39;Friends' Electric?" and "Cars" (1979) by Gary Numan made heavy use of synthesizers. "Enola Gay" (1980) by OMD used distinctive electronic percussion and a synthesized melody. Soft Cell used a synthesized melody on their 1981 hit 'Tainted Love'. Nick Rhodes, keyboardist for Duran Duran, used various synthesizers including the Roland Jupiter-4 and Jupiter-8.

Chart hits include "Just Can't Get Enough" (1981) by Depeche Mode, "Don't You Want Me" by The Human League and "Flashdance... What a Feeling" (1983) by Giorgio Moroder for Irene Cara. Other notorious groups within synthpop are New Order, Visage, Japan, Ultravox, Spandau Ballet, Culture Club, Eurythmics, Yazoo, Thompson Twins, A Flock of Seagulls, Heaven 17, Erasure, Soft Cell, Blancmange, Pet Shop Boys, Queen, Bronski Beat, Kajagoogoo, ABC, Naked Eyes, Devo and early Tears for Fears and Talk Talk materials. Giorgio Moroder, Howard Jones, Kitaro, Stevie Wonder, Peter Gabriel, Thomas Dolby, Kate Bush, Dónal Lunny, Deadmau5, Frank Zappa and Grimes also used synthesizers. The synthesizer became one of the most important instruments in the music industry.

Types of synthesis

The additive synthesis builds sounds from the sum of waves (which are usually harmonically related). The earliest analog examples of additive synthesis are on the teleharmonium and the Hammond organ. To implement real-time additive synthesis, wavetable synthesis was helpful due to limited hardware and processing power, it is commonly implemented in simple MIDI instruments (such as educational keyboards) and sound cards.

The subtractive synthesis is based on the filtering of waves with great harmonic content. Due to its simplicity it is the basis of early synthesizers such as the Moog synthesizer. Subtractive synthesizers employ simple acoustic modeling that assumes that an instrument can be approximated by a signal generator (producing saw waves, square waves, etc.) followed by a filter. The combination of simple modulations (such as PWM modulation or oscillator synchronization), accompanied by exaggerated low-pass filters, are responsible for the sound of the "classic synths" commonly associated with "analog synthesis"—a term commonly misused when referring to software synthesizers using subtractive synthesis.

Frequency modulation synthesis (also known as FM synthesis) is a process which involves at least two signal generators (sine wave generators, commonly referred to as operators only on synthesizers). FM) to create and modify a voice. Usually, this is done through the generation of an analog or digital signal that modulates the tonal or amplitude characteristics of a main signal. FM synthesis began with John Chowning, who patented the idea and sold it to Yamaha. Unlike the exponential relationship between amplitude, frequency, and multiple waveforms in classical one volt per octave oscillators, the synthesis created by Chowning employs a linear relationship between voltage, frequency, and sine wave oscillators. The resulting complex waveform can be composed of various frequencies and there is no requirement that they share a harmonic relationship. More sophisticated FM synthesizers like the Yamaha DX-7 series can have six operators per voice. ICONA some FM synths can even have filters and different types of variable pads to alter the characteristics of the signal into a sonic voice that can mimic acoustic instruments or create unique sounds. FM synthesis is special due to its characteristics in recreating percussive sounds such as bells, timpani or other types of percussion.

Phase distortion synthesis is a method implemented in Casio CZ synthesizers. It is similar to FM synthesis but avoids infringing Chowning's patent on it. It can be categorized as modulation synthesis (along with FM synthesis) and distortion synthesis along with waveform synthesis and discrete summation formulas.

Granular synthesis is a type of synthesis based on the manipulation of small sound samples.

The synthesis by physical modeling is the synthesis of sound using equations and algorithms to simulate a real instrument or some physical source of sound. This involves modeling components of music objects and creating systems that define such action, filters, envelopes, and other parameters over time. Several of them can be combined, for example the modeling of a violin with the characteristics of a steel guitar and the hammer action of a piano. When an initial parameter set is run through a physical simulation, the simulated sound is generated. However, physical modeling was not a new concept in acoustics and sound synthesis until the development of the Karplus-Strong algorithm and the increase in digital signal processing power in the late 1980s when commercial implementations became affordable. The quality and speed of physical modeling on computers improves with increased processing power.

Sound sample synthesis involves recording an actual instrument as a digitized waveform and playing it back at different speeds (pitches) to produce different tones. This technique refers to "sampling". Most samplers designate a part of the sample for each component of the ADSR envelope, repeating that section while changing the volume according to the envelope. This allows samplers to vary the envelope while playing the same note. See also wavetable synthesis and vector synthesis.

Analysis/resynthesis is a form of synthesis that uses a series of filters or Fourier transforms to analyze the harmonic content of a sound. The results are used to "resynthesize" the sound using a series of oscillators. Vocoder, productive line coding and other forms of speech synthesis are based on analysis/resynthesis.

Essynth is a mathematical model for interactive sound synthesis based on evolutionary computation and uses genetic operators fitted functions to create the sound.

Imitative synthesis

Sound synthesis can be used to imitate acoustic sound sources. Generally, a sound that does not change over time includes a fundamental or harmonic frequency and any number of partials. The synthesis may attempt to imitate the amplitude and pitch of the partials of an acoustic sound source.

When natural sounds are analyzed in the frequency domain (as a spectrogram), the frequency spectrum of their sounds exhibits amplitude peaks in each harmonic series with respect to a fundamental corresponding to the resonance properties of the instruments (the peaks within the spectrogram are also known as formants). Some harmonics may have greater amplitudes than others. The harmonics in relation to their amplitude are known as harmonic content. A synthesized sound requires an accurate reproduction of the original sound in both the frequency and time domains. A sound does not necessarily have the same harmonic content throughout its duration. Normally, high frequency harmonics decay faster than low frequency harmonics.

In most synthesizers, for re-synthesis purposes, recordings of real instruments are integrated with different components representing the acoustic responses of different parts of the instrument, the sounds played by the instrument during different parts of the performance, or the behavior of the instrument under different playing conditions (tone, intensity, strumming, etc.).

Components

Royalty Free Music: Funk – incompetech (mp3d). Kevin MacLeod (incompetech.com). +Funk+%E2%80%93+incompetech&rft.genre=book&rft.pub=Kevin+MacLeod+%28incompetech.com%29&rft_id=http%3A%2F%2Fincompetech.com%2Fm%2Fc%2Froyalty-free %2Findex.html%3Fgenre%3DFunk&rft_val_fmt=info%3Aofi%2Ffmt%3Akev%3Amtx%3Abook" class="Z3988">

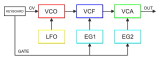

Synthesizers generate sound through analog and digital techniques. Early synthesizers had analog hardware, but modern synthesizers use a combination of digital signal processing software and hardware, or software alone. Digital synthesizers commonly emulate classic analog synthesizer designs. The sound is controllable by an operator through circuits or virtual stages such as:

- Electronic Oscillators – create sounds with a bell that depends on the generated waveform. Voltage-controlled oscillators (VCO) And digital oscillators can be implemented. The sounds resulting from the harmonic additive synthesis of pure sinusoidal waves have to some extent relation to the organs, while the synthesis by "frequency modulation" and "phase distortion" use one oscillator to modulate another. The synthesis subtractive depends on infiltrating an oscillator with great harmonic content. La synthesis by sound samples and the granular synthesis use one or more recorded sounds instead of a oscillator.

- Voltage-controlled filter (VCF) – "modean" the sound generated by the oscillators in the frequency domain, usually under the control of a envelope or an LFO. These are essential for synthesis subtractive.

- Voltage-controlled amplifier (VCA) – after the signal is generated by one (or several) VCOs, has been modified by the filters, LFOs, its waveform and has been modeled through an ADSR envelope generator, passes by one or more voltage-controlled amplifiers (VCA). A VCA is a preamplifier that increases the energy of the electronic signal before reaching an integrated or external amplifier, controlling the amplitude (volumen) using a dimmer. The gain of a VCA is affected by a voltage control (VC) that comes from a wrapper generator, LFO, keyboard or any other source.

- Enveloping ADSR - provide a envelope modulation to the shape the volume or harmonic content of the note produced in the domain of time with the attack parameters (attack), decay (decay), sustain and release. These are used in most of the synthesis. ADSR control is produced by "enveloping generators".

- Low frequency oscillator (LFO) – is a frequently adjustable oscillator that can be used to modulate sound in a rhythmic way, for example to create a tremolo or vibrate or control the frequency in which a filter affects the signal. LFOs are used in several types of synthesis.

- Other other effects processors such as ring modulation can be found.

Filter

Electronic filters are particularly important in subtractive synthesis as they are designed to filter out regions of frequencies while others are attenuated (subtracted). The low pass filter is commonly used but band filtering and high pass filters are sometimes available.

The filter can be controlled by a second ADSR envelope. An "envelope modulation" on many synths that include filtered envelopes determines how much the filter is affected by the envelope. If they are not used, the filter produces a full sound without any envelope. When the envelope is on it becomes more noticeable, expanding the minimum and maximum range of the filter.

ADSR Envelope

| Key | on | off | |||

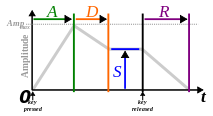

When an acoustic musical instrument produces a sound, the volume and spectral content of that sound changes over time in ways that vary from instrument to instrument. The "attack" (attack) and "decay" (decay) of a sound have a large effect on the sonic qualities of an instrument. Sound synthesis techniques commonly employ an "envelope generator" which controls the parameters of the sound at different points in its duration. It is used in the so-called ADSR envelope (Attack Decay Sustain Release), which can be applied to amplitude control, as a frequency filter, etc. The envelope can be a discrete circuit or a module that is implemented in software. The behavior of an ADSR envelope is specified by four parameters:

- Attack time (time of attack): it is the time that takes you to go from an initial value to a peak value. Start when the key is pressed.

- Decay time (time of decay): it is time, after attack, that takes you to reach a certain level of sustain.

- Sustain level (sustainment time): is the level that keeps the sequence of the sound during the time that lasts the same until the key stops pressing.

- Release time (release time): is the time it takes to decay the sound, after sustain, to a level equal to zero after the key stopped pressing.

One of the earliest implementations of ADSR is found in the Hammond Novachord organ in 1938 (which predates the moog synthesizer by 25 years). A seven-position knob controls the ADS parameter for all 72 notes, and a pedal controls the release time. The notion of ADSR was specified by Vladimir Ussachevsky (then director of the Columbia-Princeton Electronic Music Center) in 1965 while suggesting improvements to Bob Moog's work on synthesizers, however the notations for this parameter were (T1, T2, Esus, T3) and were later simplified to the current form (Attack time, Decay time, Sustain level, Release time) by ARP Instruments.

Some electronic instruments allow the ADSR envelope to be inverted, resulting in the opposite behavior of the normal ADSR envelope. During the attack phase, the modulation parameter of the sound goes from a maximum amplitude value to zero, and during the decay phase it increases to a value specified by the sustain parameter. After the played key has been released, the sound parameter increases from the sustain amplitude to a maximum amplitude.

A common variation of ADSR on some synthesizers, such as the Korg MS-20, was ADSHR (attack, decay, sustain, hold, release). By adding the "hold" parameter, the system allows notes to be held at a sustain level for a set period of time before decaying. The General Instruments AY-3-8912 integrated circuit included a "hold" parameter; the sustain level was not programmable. Another common variation is the AHDSR (attack, hold, decay, sustain, release) envelope, in which the "hold" controls how much the envelope will be at maximum volume before reaching the decay phase. Multiple attack, decay and release parameters can be found on more sophisticated models.

Some synths allow a delay parameter before the attack, modern synths like Dave Smith Instruments' Prophet '08 have DADSR delay, attack, decay envelopes, sustain, release). The delay parameter determines the duration of the silence when playing a note and with its attack. Some software synthesizers like Image-Line's 3xOSC (included in their FL Studio DAW) have DAHDSR (delay, attack, hold, decay, sustain, release) envelopes.

LFO

A low-frequency oscillation (LFO) generates an electrical signal, usually below 20 Hz. LFO signals create a periodic or sweeping control signal, commonly used for vibrato, tremolo, and other effects. In certain genres of dystonic music, the LFO signal can control the cutoff frequency of a VCF to recreate a rhythmic wah-wah sound or wobble bass in dubstep.

Patch

The "patch" The patchbay of a synthesizer (some manufacturers use the term program) is a configuration of the sound. Moog synthesizers use cables ("patch cords") to connect the different sound modules. Because these machines did not have a memory to store settings, musicians wrote down the locations of the connected cables and the positions of the knobs on a sheet (which usually showed a diagram of the synthesizer). Since then, any configuration of any type of synthesizer is known as a patch.

In the late 1970s, a patch memory (allowing you to save and load "patches" or "programs") began to appear on synthesizers such as the Oberheim Four-voice (1975/1976), Sequential Circuits Model 700 Programmer (1977) and Prophet-5 (1977/1978). After MIDI was introduced in 1983, more synthesizers emerged that could import or export patches via MIDI SYSEX commands. When a synthesizer patch is loaded onto a computer that has patch editing software installed, the user can modify the patch's parameters and download them back to the synthesizer. Because there is no standard patch language, it is rare that a patch generated with one synth can work on a different model. However, some manufacturers have designed a family of synthesizers that are compatible.

Control interfaces

Modern synthesizers usually have the appearance of small pianos, some with additional knobs and buttons. These are onboard controls, where the electronics for sound synthesis are integrated within the controller itself. However, several of the first synthesizers were modular and lacked a keyboard unlike modern synthesizers that can be controlled via MIDI, allowing other types of performance such as:

- Fingerboards and touchpads

- Electronic wind instruments

- Interfaces with guitar style

- Drum pads

- Sequential

- Contactless interfaces like theremines

- Tangible interfaces like Reactable, AudioCubes

- Various input auxiliary devices such as: pitch and modulation knobs, expression and sustain pedals, breathing controls and light detectors, etc.

Fingerboard

A ribbon controller or any violin-like interface can be used to control the parameters of a synthesizer. The idea stems from Léon Theremin's concept in 1992, his Fingerboard Theremin and Theremin keyboard, Ondes Martenot (1928) by Maurice Martenot (a metal ring that slides), Trautonium (1929) by Friedrich Trautwein (tactile) and was later used by Robert Moog. The ribbon controller has parts that move, instead a finger presses on the ribbon and moves across it creating an electrical contact somewhere on the thin, flexible band whose electrical potential varies from end to end. The old fingerboards used a long cable that pressed against a plate with some resistance. A ribbon controller is similar to a touchpad, but the ribbon controller only registers linear movement. Although it can be used to operate any parameter that is affected by voltage control, a ribbon controller is commonly associated with pitch bending.

Some instruments that are controlled with the Fingerboard are the Trautonium (1929), the Hellertion (1929), the Heliophon (1936), the Electro-Theremin (Tannerin, late 1950s), Persephone (2004), and the Swarmatron (2004).

A ribbon controller is additionally used as a controller on the Yamaha CS-80 and CS-60, the Korg Prophecy and Korg Trinity series, Kurzweil synthesizers, the Moog synthesizer, among others.

Rock musician Keith Emerson has only played the Moog modular synthesizer since 1970. In the late 1980s, keyboards in the Berklee College of Music Synthesis Lab were equipped with ribbon controllers made up of a thin membrane that generated values MIDI. They functioned like MIDI controllers, with their programming language printed on the surface, like performance or expression tools. Designed by Jeff Tripp of Perfect Fretworks Co. they were known as Tripp Strips. Such ribbon controllers would be like a main MIDI controller instead of the keyboard like a Continuum.

Breath Controllers

Electronic wind instruments (and wind synthesizers) poll activity for woodwind players, because they are designed to avoid wind instruments. They can usually be analog or MIDI controllers and can sometimes include their own sound modules (synthesizers). In addition to tracking arrangements and pulsations, the controllers have transducers that detect pressure changes through breath, velocity, and bite sensors. Some saxophone-like controllers include the Lyricon and products from Yamaha, Akai, Casio. The pieces range from clarinets to saxophones. The Eigenharp, a bassoon-like controller, was released by Eigenlabs in 2009. Melodica-like controllers include the Martinetta (1975), the Variophon (1980), the Variophon (1980), and the Korg Pepe created by Joseph Zawinul. A harmonica-like interface was Millionizer 2000 (1983).

Trumpet-like controllers include products from Steiner/Crumar/Akai and Yamaha. Breath controllers can also be used to control conventional masters as well, for example Crumar Steiner Masters Touch, Yamaha Breath Controller and compatible products. Yamaha Breath Controller and compatible products.

Accordion controllers use pressure changes on the transducers to articulate.

Others

Other controllers can be mentioned such as: the Theremin, the buttons (touche d'intensité) on the Ondes Martenot and various types of pedalboards. Envelope-based systems, the most sophisticated being the vocoder, are controlled by the energy or amplitude of an audio signal, the talk box allows sound to be manipulated using a voice, although it is rarely categorized as a synthesizer.

MIDI control

Synthesizers became easy to integrate and synchronize with other electronic and controlled instruments with the introduction of the Musical Instrument Digital Interface (MIDI) in 1983. First proposed in 1981 by engineer Dave Smith of Sequential Circuits, the standard MIDI was developed by a consortium now known as the MIDI Manufacturers Association. MIDI is an optocoupled serial interface and communications protocol. It allows the transmission of information from one device or instrument to another in real time. This information includes events, commands for selecting instrument presets (for example sounds, programs, or patches previously saved in the instrument's memory), control of performance parameters such as volume and effect levels, as well as timing, transport control and other types of data. MIDI interfaces are ubiquitous on musical equipment today and are commonly available on personal computers (PC's).

The General MIDI (GM) software standard was conceived in 1991 to serve as a consistent way to describe a set of more than 200 sounds (including percussion) available on PCs for playback or musical scores. For the first time, a given MIDI preset consisting of a specific instrumental sound on any GM compatible device. The Standard MIDI File (SMF) format (extension .mid) combined with MIDI events with delta times - a way of marking time - became a popular standard for sharing musical scores between computers. In the case of SMF playback using built-in synthesizers (such as computers or cell phones), the hardware component of MIDI interface design is often unnecessary.

OpenSound Control (OSC) is another music data specification designed for online use. In contrast to MIDI, OSC allows hundreds of synthesizers or computers to share music.

Typical Roles

Synth lead

In popular music, a synth lead is generally used to play the main melody of a song, but it is also used to create rhythm or bass effects. Although commonly heard in dance music, synth leads have been used extensively in hip-hop since the 1980s and in rock songs from the 1970s. Most modern music employs synth leads in song hooks to elicit the listener's interest through the song.

Synth pad

A synth pad is a sustained chord or tone generated by a synthesizer, it is commonly used to create background harmony or atmosphere in the same way that a string section is commonly used. used in acoustic music. Typically a synth pad plays several steps or semitones, sometimes the same note of the lead voice or the entire musical phrase. Commonly the sounds for synth pads have a timbre similar to organs, strings or voices. Synth pads were used in much of the popular music of the 1980s, giving rise to polyphonic synthesizers, as well as new styles of smooth jazz and new age music. One of the best-known songs of the era that incorporated a synth pad is "West End Girls" by Pet Shop Boys, known to be users of the technique.

The main characteristic of a synth pad is its long attack and decay time with extended sustains. In some cases Pulse Width Modulation (PWM) using a square wave oscillator can be used to give a 'vibrant' sound effect.

Synth bass

The "bass synth" (or "synth bass") is used to create sounds in the bass range from simulations of an electric bass or double bass to distorted sounds, generated and combined with different frequencies. Synth bass patches can incorporate a range of sounds and tones including analog and wavetable style synthesis, sounds generated from FM, delay effects, distortion effects, or filters with envelopes. Modern digital synthesizers use a microprocessor with a frequency synthesizer to generate signals of different frequencies. While most bass synths are controlled by electronic keyboards or pedals, some players choose to use electric basses with a MIDI interface to trigger the bass synth.

During the late 1970s miniaturized versions of solid-state components allowed instruments to become portable such as the Moog Taurus, a 13-note keyboard that could be played with your feet. The Moog Taurus was used in live performances within the pop, rock, and blues genres. One of the earliest uses of bass synthesizers can be heard on John Entwistle's (bassist for The Who) solo album Whistle Rymes (1972). Mike Rutherford bassist for the band Genesis used a "Mister Bassman" for the recording of the album Nursery Cryme in August 1971. Stevie Wonder introduced bass synthesizers to a wider audience in the early 1970s, most notably with "Superstition" (1972) and "Boogie On Reggae Woman" (1974). In 1977 the funk band Parliament with the song Flash Light used a bass synthesizer. Lou Reed, considered a pioneer of electric guitar-generated textures, used a bass synth on the song "Families" (1979) from their album The Bells.

When sequencers became available in the 1980s (such as the Synclavier), bass synths were often used to create fast, complex, syncopated bass lines. Bass synth patches incorporated a range of sounds and tones, including wavetable and analog synthesis, sounds generated from frequency modulation, delay effects, distortion effects, or filters with envelopes. A particular bass synthesizer influence was the Roland TB-303 followed by the Firstman SQ-01. Released at the end of 1981, it included a sequencer and would later be associated with acid house music. This method gained popularity after Phuture used it on the single "Acid Tracks" in 1987.

During the 2000s, various manufacturers such as BOSS and Akai produced bass synth effects pedals for electric basses, which simulated the sounds of an analog or digital bass synthesizer. With these devices, a bass player could use the synthesizer to generate new sounds. The BOSS SYB-3 was one of the first pedals to emulate an analog bass synthesizer through digital signal processing of a sawtooth, quadrature, or pulse wave, plus an adjustable filter. The Akai Bass Synth Pedal uses four oscillators with parameters of (attack, decay, envelope depth, dynamics, cutoff, and resonance). Bass synth software allows you to use MIDI to integrate bass sounds with other synthesizers or drum machines. Bass synths usually include bass samples from the 1970s and 1980s. Some synths are built like pedalboards.

Arpeggiator

An arpeggiator is a feature available on various synthesizers that automatically plays pitches in a given sequence from a chord and thereby generates a chord. The notes can normally be transmitted through a MIDI sequencer for recording and later editing. An arpeggiator may have controls for the speed, range, and order in which notes are played; ascending, descending or in a random order. More advanced arpeggiators allow the user to play complex pre-programmed sequences of notes or play multiple arpeggios at once. Some allow you to maintain a pattern after the keys have been pressed: in this way, the arpeggio sequence can be developed over time by pressing different keys, one after the other. Arrangers are also commonly found in software sequencers. Some arpeggiators/sequencers have expanded functions such as a phrase sequencer, which allows the user to trigger multiple complex sets of sequenced data from a keyboard or input device, usually synchronized to the time of a master clock.

Arrangers have grown from electric organ accompaniment systems in the mid-1960s and 1970s, and possibly hardware sequencers in the mid-1960s, such as the 8/16-step analog sequencer on modular synthesizers (the Buchla 100 series (1964/1966)). They were also adapted for keyboard instruments in the late 1970s and early 1980s. Examples can be found in the RMI Harmonic Synthesizer (1974), Roland Jupiter 8, Oberheim OB-8, Roland SH-101, Sequential Circuits Six-Trak and Korg Polysix. A famous example can be heard in the song Rio by Duran Duran, in which the arpeggiator of a Roland Jupiter-4 is heard playing a C minor chord in random mode. Their fame waned in the late 1980s and early 1990s and they were absent from the more popular synths of the day, but the revival of analog synths during the 1990s and the use of fast arpeggios in various dance songs brought them back. lap.

Further reading

- Gorges, Peter (2005). Programming Synthesizers. Germany, Bremen: Wizoobooks. ISBN 978-3-934903-48-7.

- Schmitz, Reinhard (2005). Analog Synthesis. Germany, Bremen: Wizoobooks. ISBN 978-3-934903-01-2.

- Shapiro, Peter (2000). Modulations: A History of Electronic Music: Throbbing Words on Sound. ISBN 1-891024-06-X.

Contenido relacionado

Moonspell

Patricia Kaas

Mail server