Scalar product

In mathematics, the dot product, also known as the inner product or dot product, is an algebraic operation that takes two vectors and returns a scalar, y which satisfies certain conditions.

Of all the products that can be defined in different vector spaces, the most relevant is the so-called "scaler" (usual or standard) in the euclid space Rn{displaystyle mathbb {R} ^{n}. Given two vectors u=(u1,u2,...,un){displaystyle =(u_{1},u_{2},...,u_{n}}} and v=(v1,v2,...,vn){displaystyle =(v_{1},v_{2},...,v_{n}}, his product scale defined as

- u ⋅ ⋅ {displaystyle cdot } v =u1⋅ ⋅ v1+u2⋅ ⋅ v2+...+un⋅ ⋅ vn{displaystyle =u_{1}cdot v_{1}+u_{2}cdot v_{2}+...+u_{n}cdot v_{n}}}},

that is, the sum of the component-by-component products. This expression is equivalent to the matrix product of a row matrix and a column matrix, so the usual scalar product can also be written as

- u ⋅ ⋅ {displaystyle cdot } v = uT{displaystyle ^{T}} v,

where the agreement to write the vectors in column and uT{displaystyle ^{T}} represents the transition u.

The numerical value of the scalar product is equal to the product of the modules of the two vectors and the cosine of the angle between them, which allows us to use the scalar product to study typical concepts of Euclidean geometry in two and three dimensions, such as lengths, angles and orthogonality. The scalar product can also be defined in Euclidean spaces with a dimension greater than three, and in general in real and complex vector spaces, to which these same geometric concepts can be transferred. Vector spaces endowed with an inner product are called pre-Hilbert spaces.

The name of the dot product is derived from the symbol used to denote this operation (« »). The name dot product emphasizes the fact that the result is a scalar rather than a vector, unlike for example the vector product. Both denominations are usually reserved for the usual scalar product, while in the general case the use of the expression internal product is more frequent.

General definition

In a vector space, an inner product is a map

- ⋅ ⋅ ,⋅ ⋅ :V× × VΔ Δ K(x,and) a= x,and {displaystyle {begin{array}{rccl}langle cdotcdot rangle: fakeVtimes V fakelongrightarrow &mathbb {K} {cdotcdot rangle}{longmapsto &a=langle x,yrangle end{array}}}}}}}}

where V{displaystyle V} It's a vector space. K{displaystyle mathbb {K} } the body on which it is defined. The binary operation ⋅ ⋅ ,⋅ ⋅ {displaystyle langle cdotcdot rangle } (which takes as arguments two elements of V{displaystyle V;}and returns an element of the body K{displaystyle mathbb {K} }) must satisfy the following conditions:

- Linearity on the left: ax+band,z =a x,z +b and,z {displaystyle langle ax+by,zrangle =alangle x,zrangle +blangle y,zrangle }, and linearity conjugated by the right: x,aand+bz =a! ! x,and +b! ! x,z {displaystyle langle x,ay+bzrangle ={overline {a}}{langle x,yrangle +{overline {b}}langle x,zrangle }.

- Hermiticity: x,and = and,x ! ! {displaystyle langle x,yrangle ={overline {langle y,xrangle }}}.

- Positive defined: x,x ≥ ≥ 0{displaystyle langle x,xrangle geq 0,}and x,x =0{displaystyle langle x,xrangle =0,} Yes and only if x = 0,

where x,and,z한 한 V{displaystyle x,y,zin V} They're vectors, a,b한 한 K{displaystyle a,bin mathbb {K} } They're climbers, and c! ! {displaystyle {overline {c}}} is the conjugate of the complex scaler c.

Consequently, an internal product defined in a vector space is a form sesquilineal, hermitic and positive defined. If the underlying body K{displaystyle mathbb {K} } has a null imaginary part (e.g., R{displaystyle mathbb {R} }), the property of being sesquilineal becomes bilineal and the hermitic being becomes symmetrical. Therefore, in a real vector space, an inner product is a positive defined bilineal shape.

Alternatively, the operation is usually represented by the point symbol (⋅ ⋅ {displaystyle cdot }), with what the product of the vectors u{displaystyle {bf {u}}} and v{displaystyle {bf {v}}} represented as u⋅ ⋅ v{displaystyle {bf {{u}cdot {bf}}}}}.

A vector space on the body R{displaystyle mathbb {R} } or C{displaystyle mathbb {C} } endowed with an internal product is called prehilbert space, prehilbert space or unit space. If it is also complete, it is said to be a space of Hilbert. If the dimension is finite and the body is that of the actual numbers, it will be said that it is an euclid space.

Every inner product induces a quadratic form, which is positive definite, and is given by the product of a vector with itself. Likewise, it induces a vector norm as follows:

x := x,x {displaystyle intxint:={sqrt {langle x,xrangle }}}}

that is, the norm of a vector is the square root of the image of that vector in the associated quadratic form.

Inner Product Examples

The following is a list of some products generally studied in the theory of pre-Hilbertian spaces. All these products —called canonical— are just some of the infinite interior products that can be defined in their respective spaces.

- In the vector space Rn{displaystyle mathbb {R} ^{n} the interior product is usually defined (called, in this particular case, product point) by:

- A⋅ ⋅ B=(a1,a2,a3,...,an)⋅ ⋅ (b1,b2,b3,...,bn)=a1b1+a2b2+...anbn=␡ ␡ ai⋅ ⋅ bi{displaystyle mathbf {A} cdot mathbf {B} =(a_{1},a_{2},a_{3},a_{n})cdot (b_{1},b_{2},b_{3},b_{n}}{a_{1b_{1b}{1b}{1⁄2}{1⁄2}{b}}{1⁄2}.

- In the vector space Cn{displaystyle mathbb {C} ^{n} the interior product is usually defined by:

- A⋅ ⋅ B=(a1,a2,a3,...,an)⋅ ⋅ (b1,b2,b3,...,bn)=a1b1! ! +a2b2! ! +...an⋅ ⋅ bn! ! =␡ ␡ ai⋅ ⋅ bi! ! {cdot mathbf {a}{cdot mathbf {B} =(a_{1},a_{2},a_{3},a_{n}}{cdot}{b_{1},b_{2}{3over,b_{n}}}{a.

- where bn! ! {displaystyle {overline {b_{n}}}}} is the complex conjugate number of bn{displaystyle b_{n}}.

- In the vector space of the matrices m x nwith real tickets

- A⋅ ⋅ B=tr (AT⋅ ⋅ B){displaystyle mathbf {A} cdot mathbf {B} =operatorname {tr} (A^{T}cdot B)}

- where tr(M) is the trace of the M matrix and AT{displaystyle A^{T}} is the transposed matrix of A.

- In the vector space of the matrices m x n with complex inputs

- A⋅ ⋅ B=tr (A↓ ↓ ⋅ ⋅ B){displaystyle mathbf {A} cdot mathbf {B} =operatorname {tr} (A^{*}cdot B)}

- where tr(M) is the trace of the M matrix and A↓ ↓ {displaystyle A^{}} is the transposed conjugate matrix of A.

- In the vector space of the continuous complex functions in the interval a and b, denoted by C[chuckles]a,b]{displaystyle {mathcal {C}[a,b]}:

- f⋅ ⋅ g=∫ ∫ abf(x)g(x)! ! dx{displaystyle mathbf {f} cdot mathbf {g} =int _{a}^{bf(x){overline {g(x)}mathrm {d} x}.

- In the vector space of polynomials less or equal to n, dice n+1 numbers [chuckles]x1,x2,x3,...,xn,xn+1] R{displaystyle textstyle [x_{1},x_{2},x_{3},...,x_{n},x_{n+1}]subseq mathbb {R} } such that <math alttext="{displaystyle textstyle x_{1}<x_{2}<x_{3}<...<x_{n}x1.x2.x3.....xn.xn+1{displaystyle textstyle x_{1} visx_{2} visx_{3} inherent...<img alt="{displaystyle textstyle x_{1}<x_{2}<x_{3}<...<x_{n}:

- p⋅ ⋅ q=p(x1)q(x1)+p(x2)q(x2)+...+p(xn)q(xn)+p(xn+1)q(xn+1)=␡ ␡ p(xi)⋅ ⋅ q(xi){displaystyle mathbf {p} cdot mathbf {q} =p(x_{1})q(x_{1})+p(x_{2})q(x_{2}})q(xx_{n})q(x(x_{n})(x(x1}{n+1}.

Inner Product Properties

Sean A, B and C vectors, and be α α {displaystyle alpha } and β β {displaystyle beta } scales:

- It is a linear application for the first operation:

α α A+β β B,C =α α A⋅ ⋅ C +β β B⋅ ⋅ C {displaystyle langle alpha mathbf {A} +beta mathbf {B}mathbf {C} rangle =alpha langle mathbf {A} cdot mathbf {C} rangle +beta langle mathbf {B} cdot mathbf {C} rangle

- It's a hermitian form:

A,B = B,A ! ! .{displaystyle langle mathbf {A}mathbf {B} rangle ={overline {langle mathbf {B}mathbf {A} rangle }}}}}}}. !

- In a real vector space, this condition is reduced to

A⋅ ⋅ B=B⋅ ⋅ A,{displaystyle mathbf {A} cdot mathbf {B} =mathbf {B} cdot mathbf {A}}}

- so in this case the internal product is commutative (or symmetrical).

- The two previous combined properties siginify that the interior product is sesquilineal:

A,α α B+β β C =α α ! ! A⋅ ⋅ B +β β ! ! A⋅ ⋅ C {displaystyle langle mathbf {A}alpha mathbf {B} +beta mathbf {C} rangle ={overline {alpha }}}langle mathbf {A} cdot mathbf {B} rangle +{overline {beta }{bf}{bd} mathbf}{b} bbbb} bbbb

- again, in the real case, this property is reduced to linearity in the second operating.

- Distribution with respect to the sum:

A,B+C = A,B + A,C {displaystyle langle mathbf {A}mathbf {B} +mathbf {C} rangle =langle mathbf {A}mathbf {B} rangle +langle mathbf {A}mathbf {C} rangle }

A+B,C = A,C + B,C {displaystyle langle mathbf {A} +mathbf {B}mathbf {C} rangle =langle mathbf {A}mathbf {C} rangle +langle mathbf {B}{B}mathbf {C} rangle }}

- Possivity: in real or complex vector spaces, the internal product of a vector itself is a non-negative real number:

A,A ≥ ≥ 0.{displaystyle langle mathbf {A}mathbf {A} rangle geq 0. !

Consequently, it is possible to define a vector standard of value 日本語日本語A日本語日本語= A,A {displaystyle 日本語mathbf {A} LICKINGS ={sqrt {langle mathbf {A}mathbf {A} rangle }}}}}}}which is defined for all the vectors of space and fulfills all the required axioms. Therefore, every prehilbertian space is a standard space. In the usual climbing product, the associated standard equals the length of the vector in the euclid space.

- Cauchy-Bunyakovsky-Schwarz:

日本語 A,B 日本語≤ ≤ 日本語日本語A日本語日本語⋅ ⋅ 日本語日本語B日本語日本語.{displaystyle Δlangle mathbf {A}mathbf {B} rangle Δleq ⋅mathbf {A} أعربي cdot أعربيmathbf {B} Русский !

- As a result, for two non-null vectors A and B have the inequalities:

− − 1≤ ≤ A,B 日本語日本語A日本語日本語⋅ ⋅ 日本語日本語B日本語日本語≤ ≤ 1.{displaystyle -1leq {frac {langle mathbf {A}mathbf {B} rangle }{ complete }{mathbf {A}... !

In the usual climbing product, this quotient is equal to the angle cosine between the two vectors. This leads to defining the concept of angle in arbitrary vector spaces as the angle θ θ {displaystyle theta } such as

# θ θ = A,B 日本語日本語A日本語日本語⋅ ⋅ 日本語日本語B日本語日本語.{displaystyle cos theta ={frac {langle mathbf {A}mathbf {B rangle }{associatedmathbf {A} أعربي cdot أعربيmathbf {B} مع}}}}} !

The usual dot product in real Euclidean space

Analytic expression

Be vectors U=(U1,U2,U3)T{displaystyle =(U_{1},U_{2},U_{3})^{T}}} and V=(V1,V2,V3)T{displaystyle =(V_{1},V_{2},V_{3})^{T}}} in the three-dimensional eucliding space R3{displaystyle mathbb {R} ^{3}. The climbing product U and V is defined as the matrix product:

U⋅ ⋅ V=UTV=[chuckles]U1U2U3][chuckles]V1V2V3]=U1V1+U2V2+U3V3{displaystyle mathbf {U} cdot mathbf {V} =U^{T}V={begin{bmatrix}U_{1}{1}{1}{2}{3}{3}{bmatrix}{bmatrix}{bmatrix}

If the vectors are expressed through their coordinates regarding a certain base B={e1,e2,e3!{displaystyle {mathcal {B}}={e_{1},e_{2},e_{3}}}}, by application of the properties of the climbing product, this takes the form

| U⋅ ⋅ V{displaystyle mathbf {U} cdot mathbf {V} } | ={displaystyle} | (u1e1+u2e2+u3e3)⋅ ⋅ (v1e1+v2e2+v3e3){displaystyle (u_{1}mathbf {e} _{1}+u_{2mathbf {e} _{2}+u_{3}mathbf {e}{3}{3}{3}{cdot (v_{1}mathbf {e}{1}{1⁄2}{2}{ethbf}{e}{e |

| ={displaystyle} | u1v1e1⋅ ⋅ e1+u2v1e2⋅ ⋅ e1+u3v1e3⋅ ⋅ e1+u1v2e1⋅ ⋅ e2+u2v2e2⋅ ⋅ e2+u3v2e3⋅ ⋅ e2+u1v3e1⋅ ⋅ e3+u2v3e2⋅ ⋅ e3+u3v3e3⋅ ⋅ e3♪ | |

The above expression can be condensed in matrix form as

U⋅ ⋅ V=[chuckles]u1u2u3][chuckles]a11a12a13a21a22a23a31a32a33][chuckles]v1v2v3]=UBTAVB,{cHFFFFFF}{cdot mathbf {V} ={begin{bmatrix}{1}{1}{1}{2}{3}{3}{bmatrix}}{b}{bmatrix}{b}{bmatrix}{b}{1}{x1}{b}{x1}{b}{b}{x1}{x1}{b}{b}}{b}{x1}{x1ccccccccccccccccccccH00}}}}}}}}{x1st}{x1st}}{x1st}}}}}{x1st

where A is the Gram matrix of the product, whose entries are the scale products of the base vectors: aij=ei⋅ ⋅ ej{displaystyle a_{ij}=e_{i}cdot e_{j}}}. In the particular case that the base is orthonormal, the Gram matrix is the identity matrix.

Previous expressions can be generalized to spaces of n dimensions. Yeah. U and V are vectors in Rn{displaystyle mathbb {R} ^{n} Then:

U⋅ ⋅ V=U1V1+U2V2+...+UnVn.{displaystyle mathbf {U} cdot mathbf {V} =U_{1}V_{1}V_{2}V_{2}+...+U_{n}V_{n}. !

Similarly, given the coordinates of the vectors regarding a base B={e1,e2,...,en!{displaystyle {mathcal {B}}={e_{1},e_{2},e_{n}}}}}, the product is given by

U⋅ ⋅ V=UBTAVB,{displaystyle mathbf {U} cdot mathbf {V} =U_{mathcal {B}^{T} A V_{mathcal {B},}

where the Gram matrix A is of order n x n.

Vector modulus

The dot product of a vector with itself is equal to the sum of the squares of its components:

U⋅ ⋅ U=U12+U22+...+Un2.{displaystyle mathbf {U} cdot mathbf {U} =U_{1}{2}{2}^{2}+...+U_{n}^{2}. !

Since it is a sum of squares, all the addends are non-negative, and it is only zero when all the components are zero, that is, when U is the null vector. Otherwise it is a positive real number that, by successive application of the Pythagorean theorem, is equal to the square of the distance between the origin of coordinates and the extreme point of the vector U. In summary:

U⋅ ⋅ U=日本語日本語U日本語日本語2,{displaystyle mathbf {U} cdot mathbf {U} =:

In other words, the magnitude (or norm) of the vector U is the square root of the scalar product of U with itself.

A module vector equal to the unit is called a unit vector, and it is usually denoted with a circumflex accent, as u^ ^ {displaystyle {hat {u}}}. It is always possible to obtain a unitary vector in the direction of any non-null vector vmultiplying it by the reverse of its rule. This process is called normalization of the vector v.

Angle between two vectors

Applying the cosine theorem to the triangle defined by two vectors u and v, we have that

日本語日本語v− − u日本語日本語2=日本語日本語u日本語日本語2+日本語日本語v日本語日本語2− − 2⋅ ⋅ 日本語日本語u日本語日本語⋅ ⋅ 日本語日本語v日本語日本語⋅ ⋅ # θ θ ,{displaystyle LICKIDSmathbf {v} -mathbf {u} LINKS^{2}=SYBHYMAthbf {u} LINKS LINK{2} LINKSmathbf {v}{v1}{2}{2}{cdot}{cdot}{mathbf}{cdotcdcdcdcdcdcdcdcdcd

which can be rewritten as

2⋅ ⋅ 日本語日本語u日本語日本語⋅ ⋅ 日本語日本語v日本語日本語⋅ ⋅ # θ θ =日本語日本語u日本語日本語2+日本語日本語v日本語日本語2− − 日本語日本語v− − u日本語日本語2.{displaystyle 2cdot LINK LINKmathbf {u} LINKS LINKcdot LINK GENTScdotcdot cos theta = LINK LINKmathbf {u} LINK{2}{2}{2}{mathbf^ {v}{2}{mathbf !

The square of the norm of each of these vectors is:

日本語日本語u日本語日本語2=u⋅ ⋅ u=u12+u22+...+un2.{displaystyle ёmathbf {u} Русский^{2}=mathbf {u} cdot mathbf {u} =u_{1}^{2}+u_{2}^{2}+...+u_{n}{2}{2}. !

日本語日本語v日本語日本語2=v⋅ ⋅ v=v12+v22+...+vn2.{displaystyle 日本語mathbf {v} أعربية{2}=mathbf {v} cdot mathbf {v} =v_{1}{2}+v_{2}^{2}+...+v_{n}{2}{2}. !

日本語日本語v− − u日本語日本語2=(v− − u)⋅ ⋅ (v− − u)=(v1− − u1)2+(v2− − u2)2+...+(vn− − un)2.{displaystyle ̄s. !

Substituting in the previous expression, and since (vi− − ui)2=vi2− − 2uivi+ui2{displaystyle (v_{i}-u_{i})^{2}=v_{i}{i}-2u_{i}v_{i}after canceling all the sums the way ui2{displaystyle u_{i}{2}{2}} and vi2{displaystyle v_{i}{2}{2}} Just stay.

2⋅ ⋅ 日本語日本語u日本語日本語⋅ ⋅ 日本語日本語v日本語日本語⋅ ⋅ # θ θ =2u1v1+2u2v2+...+2unvn=2u⋅ ⋅ v,{displaystyle 2cdot 日本語mathbf {u}

from where, if the norm of both vectors is non-zero, we can solve for

# θ θ =u⋅ ⋅ v日本語日本語u日本語日本語⋅ ⋅ 日本語日本語v日本語日本語=u⋅ ⋅ vu⋅ ⋅ uv⋅ ⋅ v.{cHFFFFFF}{cHFFFFFF}{cHFFFFFF}{cHFFFFFF}{cHFFFFFF}{cHFFFFFFFF}{cHFFFFFFFFFFFF}{cHFFFFFFFF}{cHFFFF}{cHFFFF}{cHFFFFFFFF}{cHFFFFFFFFFFFFFFFFFFFFFFFFFFFF}{cH}{cH}{cHFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFF}{cHFFFFFFFFFFFFFFFF}{cH}{cHFFFFFFFFFFFFFFFFFF}{cHFFFFFFFFFFFFFFFF}{cHFFFFFFFFFFFFFFFFFF}{cHFFFFFF}{cH}{cHFFFFFFFFFFFFFFFFFFFF}{cHFFFFFFFFFFFFFFFFFFFFFFFFFFFF}{cH !

The denominator of this expression is always positive, so the sign of the climbing product coincides with that of the angle cosine θ θ {displaystyle theta }. The climbing product is positive when this angle is less than the right angle, and it is negative when the angle is greater.

Geometric definition

The above expression for the cosine of the angle between the vectors can be used to give an alternative definition of the dot product. This definition of a geometric character is independent of the chosen coordinate system and therefore of the chosen basis of the vector space. However, it is equivalent to the analytical definition given above, given in terms of the components of these vectors.

The two vector scaling product in an euclid space, usually denoted A⋅ ⋅ B{displaystyle mathbf {A} cdot mathbf {B} }, is geometrically defined as the product of its modules by the angle cosine θ θ {displaystyle theta } That they form.

A⋅ ⋅ B=日本語A日本語日本語B日本語# θ θ =AB# θ θ {displaystyle mathbf {A} cdot mathbf {B} =healthymathbf {A}.

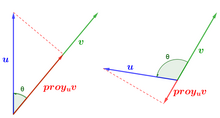

Projection of one vector onto another

The orthogonal projection of a vector u onto (the direction of) a vector v, other than the null vector, is defined as the vector parallel to v that results from projecting u onto the subspace spanned by v. In other words, it is the shadow that one vector casts on the other.

The vector projection module u on the direction of the vector v That's it. 日本語日本語Pv(u)日本語日本語=日本語日本語u日本語日本語corsθ θ {displaystyle 日本語P_{v}(u)S.U.S.:with which

u⋅ ⋅ v=日本語日本語Pv(u)日本語日本語⋅ ⋅ 日本語日本語v日本語日本語,{displaystyle mathbf {u} cdot mathbf {v} =ATAP_{v}(u)SUBMITTEDcdot

that is, the scalar product of two vectors can also be defined as the product of the magnitude of one of them by the projection of the other onto it.

Consequently, the projection can be calculated as the dot product between both vectors, divided by the magnitude of the vector v squared, multiplied by the vector v:

- Pv(u)= u,v 日本語日本語v日本語日本語⋅ ⋅ v日本語日本語v日本語日本語= u,v v,v v{displaystyle P_{v}(u)={frac {bf}{{bf}{bf}{bf}{{{v}}}{bf}}{v}}}}}{cdot {f}{v}}{bn}{bf}{bf}{bf}}{bf}}}{bn}}}}}}}{b.

For example, in the case of Euclidean space in two dimensions, the projection of the vector u=(4, 5) onto the vector v= (5, - 2) is the vector

- Pv(u)=4⋅ ⋅ 5+5⋅ ⋅ (− − 2)52+(− − 2)2(5,− − 2)=(50/29,− − 20/29).{displaystyle P_{v}(u)={frac {4cdot 5+5cdot (-2}{5^{2}+(-2)^{2}}}}}(5,-2)=(50/29,-20/29). !

In the particular case that the direction of a unit vector e is considered, the expression for the projection of a vector u onto e > takes the simplified form

Pe(u)= u,e e{displaystyle P_{e}(u)=langle {bf {{{u},{bf {{e}rangle {bf {e}}}}}}}}}}.

The above expressions are used in the Gram-Schimdt method to obtain an orthonormal basis.

Orthogonal Vectors

Two vectors are orthogonal or perpendicular when they form a right angle to each other. If the dot product of two vectors is zero, then both vectors are orthogonal.

A⋅ ⋅ B=0Δ Δ A B{displaystyle mathbf {A} cdot mathbf {B} =0qquad Leftrightarrow qquad mathbf {A} bot mathbf {B} } }

since the # π π 2=0{displaystyle cos {frac {pi }{2}}=0}.

Vectors parallel or in the same direction

Two vectors are parallel or have the same direction if the angle they make is 0 radians (0 degrees) or π radians (180 degrees).

When two vectors form a zero angle, the value of the cosine is unity, therefore the product of the modules is equal to the scalar product.

A⋅ ⋅ B=AB# θ θ " & 日本語A⋅ ⋅ B日本語=日本語A日本語日本語B日本語▪ ▪ 日本語# θ θ 日本語=1▪ ▪ A日本語日本語B{displaystyle mathbf {A} cdot mathbf {B} =A,B,cos theta quad nightmarequad 日本mathbf {A} cdot mathbf {B} Supplies=associatedAwis, whether or not you're wrong?

Generalizations

Quadratic forms

Given a symmetrical bilinear shape B(⋅ ⋅ ,⋅ ⋅ ){displaystyle scriptstyle B(cdotcdot)} defined on a vector space V=Rn{displaystyle scriptstyle V=mathbb {R} ^{n}} a different climbing product can be defined using the formula:

(u,v)B=[chuckles]u1...... un][chuckles]B11...... B1n...... ...... ...... Bn1...... Bnn][chuckles]v1...... vn]=␡ ␡ i=1n␡ ␡ j=1nBijuivj♪♪ ♪♪

Where:

- Bij:=B(ei,ej){displaystyle B_{ij}:=B(mathbf {e} _{i},mathbf {e} _{j})}

- {e1,...... ,en!{displaystyle {mathbf {e} _{1},dotsmathbf {e} is a base of the vector space V{displaystyle scriptstyle V}

It can be verified that the previous operation (⋅ ⋅ ,⋅ ⋅ )B:V× × V→ → R{displaystyle scriptstyle (cdotcdot)_{B}:Vtimes Vto mathbb {R} } satisfies all properties to satisfy a climbing product.

Metric Tensioners

You can define and handle non-euclid space or more exactly Riemann varieties, that is, non-plane spaces with a different curvature tensor of zero, in which we can also define lengths, angles and volumes. In these more general spaces the concept of geodesic is adopted instead of that of segment to define the shortest distances in between points and, also, the operative definition of the usual climbing product is slightly modified by introducing a metric tensioner g:M× × TM× × TM→ → R{displaystyle scriptstyle g:{mathcal {M}}times T{mathcal {M}}times T{mathcal {M}}to mathbb {R} }such that the limiting of the tensor to a point of the Riemann variety is a bilineal form gx(⋅ ⋅ ,⋅ ⋅ )=g(x;⋅ ⋅ ,⋅ ⋅ ){displaystyle scriptstyle g_{x}(cdotcdot)=g(x;cdotcdot)}.

So, given two vectors vector fields u{displaystyle mathbf {u} } and v{displaystyle mathbf {v} } from tangent space to the Riemann variety is defined your internal product or scaling as:

u,v =gx(u,v)=␡ ␡ i␡ ␡ jgij(x)uivj{displaystyle langle mathbf {u}mathbf {v} rangle =g_{x}(mathbf {u}mathbf {v}sum _{i}sum _{j}g_{ij}(x)u_{i}v_{j}}}}

The length of a rectifying curve C between two points A and B can be defined from its tangent vector T{displaystyle scriptstyle mathbf {T} } as follows:

LC=∫ ∫ sasbg(x,T,T)ds=∫ ∫ sasbgijdxidsdxidsds{displaystyle L_{C}=int _{s_{a}}{s_{b}{s}{sqrt {g(mathbf {x}mathbf {T}mathbf {T}}}{s}{s}{s}{s}{s}{s}{s}{s}{s}{s}{x}{s}{s}{

Contenido relacionado

Alternating current

Injective function

Higgs mechanism

![{displaystyle {mathcal {C}}[a,b]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/71788880eb47d1e93d9064d6f949abd3206c57bd)

![textstyle [x_1,x_2,x_3,...,x_n,x_{n+1}] subseteq mathbb{R}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c65fd43627b1a599942f9189f5c2c0abf64b5240)