Reduced instruction set computing

In computer architecture, RISC (from English Reduced Instruction Set Computer, in Spanish Computer with reduced instruction set) is a type of CPU design generally used in microprocessors or microcontrollers with the following fundamental characteristics:

- Fixed size instructions and presented in a small number of formats.

- Only load and storage instructions access data memory.

In addition, these processors usually have many general purpose registers.

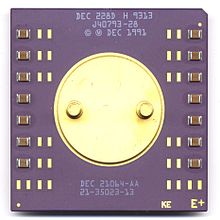

The objective of designing machines with this architecture is to enable segmentation and parallelism in the execution of instructions and reduce memory accesses. RISC machines are at the forefront of the current microprocessor building trend. PowerPC, DEC Alpha, MIPS, ARM, SPARC are examples of some of them.

RISC is a computer CPU design philosophy that favors small, simple instruction sets that take less time to execute. The most commonly used type of processor in desktop computers, x86, is based on CISC rather than RISC, although newer versions translate CISC x86-based instructions to simpler RISC-based instructions for internal use before execution.

The idea was inspired by the fact that many of the features that were included in traditional CPU designs to increase speed were being ignored by the programs that ran on them. Also, the speed of the processor in relation to the accessing computer memory was getting higher and higher. This led to the appearance of numerous techniques to reduce processing within the CPU, as well as to reduce the total number of memory accesses.

Modern terminology refers to such designs as load-store architectures.

Design philosophy before RISC

One of the basic design principles for all processors is to add speed by providing them with some very fast memory to temporarily store information, these memories are known as registers. For example, each CPU includes a command to add two numbers. The basic operation of a CPU would be to load those two numbers into registers, add them together, and store the result in another register, finally taking the result of the last register and returning it to main memory.

However, registries have the drawback of being somewhat complex to implement. Each is represented by transistors on the chip, in this aspect main memory tends to be much simpler and cheaper. Also, the registers add complexity to the wiring, because the central processing unit needs to be connected to each and every register in order to use them equally.

As a result of this, many CPU designs limit register usage in one way or another. Some include few records, although this limits their speed. Others dedicate their records to specific tasks to reduce complexity; for example, one register might be able to do operations on one or more of the other registers, while the result might be stored in any one of them.

In the world of microcomputing in the 1970s, this was one more aspect of CPUs, since processors were then too slow - in fact there was a tendency for the processor to be slower than the memory it came with. communicated- In those cases it made sense to eliminate almost all registers, and then provide the programmer with a number of ways to deal with memory to make his job easier.

Given the addition example, most CPU designs focused on creating an order that could do all the work automatically: call the two numbers to be added, add them, and then store them out directly. Another version could read the two numbers from memory, but would store the result in a register. Another version could read one from memory and another from a register and store it in memory again. And so on.

The overall goal at the time was to provide every possible mode of addressing for every instruction, a principle known as orthogonality. This led to a complex CPU, but in theory capable of configuring each possible command individually, making design faster instead of the programmer using single commands.

The latest representation of this type of design can be seen on two computers, the MOS 6502 on one side, and the VAX on the other. The $25 6502 chip actually had only one register, and with careful configuration of the memory interface it was able to outperform designs running at higher speeds (like the 4MHz Zilog Z80). The VAX was a minicomputer that on initial installation required 3 hardware cabinets for a single CPU, and was notable for the amazing variety of memory access styles it supported, and the fact that each of these was available for every instruction..

RISC Design Philosophy

In the late 1970s, research at IBM (and similar projects elsewhere) showed that most of these orthogonal addressing modes were ignored by most programs. This was a side effect of the increased use of compilers to generate programs, as opposed to writing them in assembly language. Compilers tended to be too dumb in terms of the features they used, a side effect of trying to make them small. The market was moving towards more widespread use of compilers, further diluting the usefulness of orthogonal models.

Another discovery was that because those operations were sparsely used, they actually tended to be slower than a small number of operations doing the same thing. This paradox was a side effect of the time spent designing CPUs, designers simply did not have time to optimize every possible instruction, and instead only optimized the most frequently used. A famous example of this was the VAX INDEX statement, which executed more slowly than a loop implementing the same code.

About the same time, CPUs began to run at faster speeds than the memory they communicated with. Even in the late 1970s, it was apparent that this disparity would continue to widen for at least the next decade, by which time CPUs could be hundreds of times faster than memory. This meant that advances to optimize any addressing mode would be completely overwhelmed by the very slow speeds at which they were carried out.

Another part of RISC design came from practical measurements of programs in the real world. Andrew Tanenbaum collected many of these, proving that most processors were oversized. For example, he showed that 98% of all constants in a program could be accommodated in 13 bits, even though every CPU design dedicated some multiples of 8 bits to storing them, typically 8, 16, or 32, a whole word. Taking this fact into account he suggests that a machine should allow constants to be stored in the unused bits of other instructions, decreasing the number of memory accesses. Instead of loading numbers from memory or registers, these could be right there by the time the CPU needed them, and therefore the process would be much faster. However, this required that the instruction itself be very small, otherwise there would not be enough free space in the 32 bits to hold constants of a reasonable size.

It was the small number of modes and commands that gave rise to the term reduced instruction set. This is not a correct definition, since RISC designs have a vast number of instruction sets for them. The real difference is the philosophy to do everything in registers and call and save the data to and in themselves. This is why the more correct way to name this layout is load-store. Over time the older design techniques became known as Complex Instruction Set Computer, CISC, although this was just to give them a different name for comparison purposes.

This is why the RISC philosophy was to create small instructions, meaning there were few, hence the name reduced instruction set. The code was implemented as a series of these simple instructions, rather than a single complex instruction that gave the same result. This made it possible to have more space within the instruction to carry data, resulting in the need for fewer registers in memory. At the same time the interface with the memory was considerably simple, allowing to be optimized.

However, RISC also had its drawbacks. Because a number of instructions are needed to complete even the simplest tasks, the total number of instructions to read from memory is larger, and therefore takes longer. At the same time it was unclear where there would or would not be a net performance gain due to this limitation, and there was an almost continuous battle in the press and design world over RISC concepts.

Multitask

Due to the redundancy of microinstructions, the operating systems designed for these microprocessors contemplated the ability to subdivide a microprocessor into several, reducing the number of redundant instructions for each instance of it. With an optimized software architecture, the visual environments developed for these platforms contemplated the possibility of executing several tasks in the same clock cycle. Likewise, the paging of the RAM memory was dynamic and a sufficient amount was assigned to each instance, existing a kind of 'symbiosis' between the power of the microprocessor and the RAM dedicated to each instance of it.

Multitasking within the CISC architecture has never been real, just as it is in RISCs. In CISC, the microprocessor as a whole is designed in so many complex and different instructions, that subdivision is not possible, at least at the logical level. Therefore, multitasking is apparent and by order of priority. Each clock cycle tries to attend to a task instantiated in RAM and pending to be attended. With a service queue per task, FIFO for data generated by the processor, and LIFO for user interruptions, they tried to prioritize the tasks that the user triggered on the system. The appearance of multitasking in a traditional CISC comes from scalar data models, converting the flow into a vector with different stages and creating pipeline technology.

Today's microprocessors, being hybrids, allow for some real multitasking. The final layer to the user is like a traditional CISC, while the tasks that the user leaves pending, depending on the idle time, the system will translate the instructions (the software must be compatible with this) CISC to RISC, passing the execution of the task at a low level, where the resources are processed with the RISC philosophy. Since the user only attends to one task due to his attention span, the rest of the tasks that he leaves pending and that are not compatible with the CISC/RISC translation model, are now attended to by the traditional pipeline, or if they are low-cost tasks. level, such as disk defragmentation, data integrity checking, formatting, graphics tasks, or intense math tasks.

Instead of trying to subdivide a single microprocessor, a second twin microprocessor was incorporated, identical to the first. The drawback is that the RAM had to be treated at the hardware level and the modules designed for uniprocessor platforms were not compatible or with the same efficiency as for multiprocessor platforms. Another drawback was the fragmentation of the word BYTE. In a traditional RISC, the BYTES are occupied in the following way: If the word is 32 BITS (4 BYTES word of 8 BITS each, or two of 16 or one of 32), depending on the depth of the data carried, within the same BYTE, parts of other instructions and data were included. Now, being two different microprocessors, both used independent registers, with their own memory accesses (in these platforms, the ratio of RAM per processor is 1/1). In its origins, the solutions resembled the typical bricklayer's sheets, each motherboard incorporated a solution only approved by the chip set used and the drivers that accompanied it. Although fragmentation has always been like that mosquito that buzzes in the ear, but we allow it to bite us out of laziness, there came a time when it was impossible to avoid the buzz. This time came with 64-BIT platforms.

History

While the RISC design philosophy was taking shape, new ideas were beginning to emerge with a single goal: to drastically increase CPU performance.

In the early 1980s it was thought that existing designs were reaching their theoretical limits. Speed improvements in the future would be made based on improved processes, that is, small features on the chip. Chip complexity could continue as before, but a smaller size could result in better chip performance when operating at higher clock speeds. A great deal of effort was put into designing chips for parallel computing, with built-in communication links. Instead of making the chips faster, a large number of chips would be used, dividing the problem between them. However, history showed that these fears did not come true, and there were a number of ideas that drastically improved performance in the late 1980s.

One idea was to include a pipeline whereby you could break instructions into steps and work on each step with many different instructions at the same time. A normal processor might read an instruction, decode it, send the source instruction to memory, perform the operation, and then send the results. The key to the pipeline is that the processor can start reading the next instruction as soon as the last instruction has finished, meaning that now two instructions are being worked on (one is being read, the other is starting to be decoded), and in The next loop will have three instructions. While a single instruction would not complete any faster, the next instruction would complete quickly. The illusion was that of a much faster system. This technique is known today as Channel Segmentation.

Yet another solution was to use several processing elements within the processor and run them in parallel. Instead of working on an instruction to add two numbers, those superscalar processors could see the next instruction on the pipeline and try to execute it at the same time on an identical unit. This was not very easy to do, however, since some instructions depended on the result of other instructions.

Both techniques were based on increasing speed by adding complexity to the basic design of the CPU, as opposed to the instructions that were executed on it. With chip space being a finite amount, in order to include all those features something else would have to be removed to make room. RISC took it upon itself to take advantage of these techniques, this because its logic for the CPU was considerably simpler than that of CISC designs. Even with this, early RISC designs offered very little performance improvement, but they were able to add new features and by the late 1980s they had completely left their CISC counterparts behind. Over time this could be addressed as a process improvement to the point where all of this could be added to CISC designs and still fit on a single chip, but this took almost a decade between the late 1980s and early 1980s. ninety.

Features

In a nutshell this means that for any given performance level, a RISC chip will typically have fewer transistors dedicated to the main logic. This allows designers considerable flexibility; So they can, for example:

- Increase the size of the set of records.

- Increased speed in the execution of instructions.

- Implement measures to increase internal parallelism.

- Add huge caches.

- Add other features such as E/S and watches for mini-controllers.

- Build chips in old production lines that otherwise would not be usable.

- Do not expand functionalities, and therefore offer the chip for low-energy or limited-size applications.

The characteristics that are generally found in RISC designs are:

- Uniform instruction coding (e.g., the operating code is always in the same position in each instruction, which is always a word), which allows a faster decoding.

- A set of homogeneous records, allowing any record to be used in any context and thus simplify the design of the compiler (although there are many ways to separate the entire log files and floating comma).

- Simple direction modes with more complex modes replaced by simple arithmetic instructions sequences.

- Data types supported on hardware (e.g., some CISC machines have instructions to deal with byte, chain types) are not found in a RISC machine.

RISC designs also prefer to feature a Harvard memory model, where instruction sets and data sets are conceptually separated; this means that changing the addresses where the code is located might not have any effect on the instructions executed by the processor (because the CPU has a separate instruction and data cache, at least while a special synchronization instruction is used). On the other hand, this allows both caches to be accessed separately, which can sometimes improve performance.

Many of those earlier RISC designs also shared an unfriendly feature, the Delay Slot. A delayed jump slot is an instruction space immediately following a jump. The instruction in this space is executed regardless of whether the jump occurs or not (in other words the jump is delayed). This instruction keeps the CPU ALU busy for the extra time normally needed to execute a gap. To use it, the responsibility falls on the compiler to reorder the instructions so that the code is consistent to execute with this feature. Nowadays the delayed jump slot is considered an unfortunate side effect of the particular strategy for implementing some RISC designs. This is why modern RISC designs, such as ARM, PowerPC, and newer versions of SPARC and MIPS, generally remove this feature.

Early RISC Designs

The first system that could be considered in our days as RISC was not so in those days; it was the CDC 6600 supercomputer, designed in 1964 by Seymour Cray.

Cray designed it as a large-scale computing CPU (with 74 codes, compared to an 8086 400, plus 12 single computers to handle the I/O processes (most of the operating system was on one of the these).

The CDC 6600 had a load/store architecture with only two addressing modes. There were eleven functional pipeline units for arithmetic and logic, plus five load units and two storage units (the memory had multiple banks so all load/storage units could operate at the same time). The average level of operation per cycle/instruction was 10 times faster than the memory access time.

The most popular RISC designs, however, were those where the results of university research programs were carried out with funds from the DARPA VLSI program. The virtually unknown VLSI program today led to a number of advances in chip design, manufacturing, and even computer-aided graphics.

One of the first loading/storage machines was the Data General Nova minicomputer, designed in 1968 by Edson de Castro. It had an almost pure RISC instruction set, very similar to that of today's ARM processors, however it has not been cited as having influenced ARM designers, although these machines were in use at the Cambridge University Computer Laboratory in the early 1990s. from 1980.

The UC Berkeley RISC project began in 1980 under the direction of David A. Patterson, based on gaining performance through the use of pipelines and aggressive use of registers known as register windows. In a normal CPU with a small number of registers, a program can use any register at any time. In a CPU with register windows, there are a large number of registers (138 on the RISC-I), but only a small number of these (32 on the RISC-I) can be used by programs at any one time.

A program that is also limited to 32 registers per procedure can make very fast procedure calls: the call, and return, simply move the current 32-register window to clear enough work space for the subroutine, and return resets those values.

The RISC project delivered the RISC-I processor in 1982. Consisting of just 44,420 transistors (compared to averages of about 100,000 in a CISC design of that time) RISC-I had only 32 instructions, and yet it still outperformed from any other single chip design. This trend continued and RISC-II in 1983 had 40,760 transistors and 39 instructions, with which it executed 3 times faster than RISC-I.

Around the same time, John Hennessy started a similar project called MIPS at Stanford University in 1981. MIPS focused almost entirely on segmentation, making sure it ran as full as possible. Although segmentation had already been used in other designs, several features of the MIPS chip made its segmentation much faster. The most important, and perhaps annoying, feature was the requirement that all instructions be able to complete in a single cycle. This requirement allowed the channel to be executed at higher speeds (there was no need for induced delays) and is responsible for most of the processor's speed. However, it also had a negative side effect by removing many of the potentially usable instructions, such as multiplication or division.

The first attempt to make a CPU based on the RISC concept was made at IBM which began in 1975, preceding the two previous projects. Named the RAN project, the work led to the creation of the IBM 801 family of processors, which was widely used in IBM computers. The 801 was eventually produced in chip form as ROMP in 1981, which is short for Research Office Products Division. Mini Pprocessor. As the name implies, this CPU was designed for small tasks, and when IBM released the design based on the IBM RT-PC in 1986, performance was not acceptable. Despite this, the 801 inspired several research projects, including some new ones within IBM that would eventually lead to their IBM POWER system.

In the early years, all RISC efforts were well known, but largely confined to the labs of the universities that created them. Berkeley's effort became so well known that it eventually became the name for the entire project. Many in the computer industry were critical that the performance benefits could not be translated into real-world results due to the efficiency of multi-instruction memory, and that was the reason no one was using them. But beginning in 1986, all RISC research projects began to deliver products. In fact, almost all modern RISC processors are direct copies of the RISC-II design.

Modern RISC

Berkeley's research was not directly commercialized, but the RISC-II design was used by Sun Microsystems to develop the SPARC, by Pyramid Technology to develop its midrange multiprocessor machines, and by almost every company for a few more years late. It was the SUN chip's use of RISC in the new machines that demonstrated that the benefits of RISC were real, and its machines quickly displaced the competition and essentially took over the entire workstation market.

John Hennessy left Stanford to commercialize the MIPS design, starting a company known as MIPS Computer Systems Inc. His first design was the second-generation MIPS-II chip known as the R2000. MIPS designs became one of the most widely used chips when they were included in the Nintendo 64 and PlayStation game consoles. Today they are one of the most commonly used embedded processors in high-end applications by Silicon Graphics.

IBM learned from the RT-PC failure and had to continue designing the RS/6000 based on its then new IBM POWER architecture. They then moved their S/370 mainframes to IBM POWER-based chips, and were surprised to find that even the very complex instruction set (which had been part of the S/360 since 1964) ran considerably faster. The result was the new System/390 series that is still marketed today as the zSeries. The IBM POWER design has also found itself moving down in scale to produce the PowerPC design, which eliminated many of the IBM-only instructions and created a single-chip implementation. The PowerPC was used in all Apple Macintosh computers until 2006, and is starting to be used in automotive applications (some vehicles have more than 10 inside them), next generation video game consoles (PlayStation 3, Wii and Xbox 360) They are PowerPC based.

Almost all other vendors quickly joined in. Similar efforts in the UK resulted in the INMOS Trasputer, the Acorn Archimedes and the Advanced RISC Machine line, which is highly successful today. Existing companies with CISC designs also joined the revolution. Intel released the i860 and i960 in the late 1980s, although they weren't very successful. Motorola built a new design but it didn't see much use and eventually dropped it, joining IBM to produce the PowerPC. AMD released its 29000 family which became the most popular RISC design in the early 1990s.

Today RISC CPUs and microcontrollers represent the vast majority of all CPUs used. The RISC design technique offers power even in small measures, and this has come to completely dominate the market for low-power embedded CPUs. Embedded CPUs are by far the most common processors on the market: consider that an entire family with one or two personal computers may own several dozen devices with embedded processors. RISC completely took over the workstation market. After the release of the SUN SPARCstation, other vendors rushed to compete with their own RISC-based solutions. Although around 2006-2010 workstations moved to x86-64 architecture from Intel and AMD. Even the world of mainframe computers is now based entirely on RISC.

This is surprising given Intel's x86 and x86 64 dominance in the desktop PC market (now also the workstation market), laptops, and low-end servers. Although RISC was able to speed up very quickly and cheaply.

RISC designs have led a large number of platforms and architectures to success, some of the largest:

- The MIPS Technologies Inc. line, which was in most of the Silicon Graphics computers up to 2006, was on the Nintendo 64, PlayStation and PlayStation 2. It is currently used on PlayStation Portable and some routers.

- The IBM POWER series, mainly used by IBM in Servers and Superorderers.

- The PowerPC version of Motorola and IBM (a version of the IBM POWER series) used on AmigaOne computers, Apple Macintosh as iMac, eMac, Power Mac and later (up to 2006). It is currently used in many systems embedded in cars, routers, etc, as well as in many video game consoles, such as PlayStation 3, Xbox 360 and Wii.

- Sun Microsystems and Fujitsu’s SPARC and UltraSPARC processor, which is found in its latest server models (and up to 2008 also in workstations).

- The PA-RISC and the HP/PA of Hewlett-Packard, already discontinued.

- The DEC Alpha on HP AlphaServer servers and AlphaStation workstations, already discontinued.

- The ARM – The passage of x86 instruction hardware in RISC operations becomes significant in the area and energy for mobile and integrated devices. Therefore, ARM processors dominate PALM, Nintendo DS, Game Boy Advance and multiple PDAs, Apple iPods, Apple iPhone, iPod Touch (Samsung ARM1176JZF, ARM Cortex-A8, Apple A4), Apple iPad (Apple A4 ARM -based SoC), video consoles like Nintendo DS (ARM7TDMI, ARM946E-).

- The Atmel AVR used in a variety of products, from Xbox controllers to BMW cars.

- Hitachi's SuperH platform, originally used for Sega Super 32X consoles, Saturn and Dreamcast, are now part of the heart of many electronic equipment for consumption.SuperH is the base platform of the Mitsubishi - Hitachi group. These two groups, united in 2002, left aside Mitsubishi's own RISC architecture, the M32R.

- The XAP processors used in many low-consumption wireless chips (Bluetooth, wifi) of CSR.

Contenido relacionado

BitTorrent

Portable Network Graphics

OSI model