Probability distribution

In probability theory and statistics, the probability distribution of a random variable is a function that assigns to each event defined on the variable, the probability of that event occurring. The probability distribution is defined on the set of all events and each of the events is the range of values of the random variable. It can also be said that it has a close relationship with frequency distributions. In fact, a probability distribution can be understood as a theoretical frequency, since it describes how outcomes are expected to vary.

The probability distribution is completely specified by the distribution function, whose value at each real x is the probability that the random variable is less than or equal to x.

Types of variables

- Random variable: It is the one whose value is the result of a random event. Which means that they are the results presented at random in any event or experiment.

- Variable discreet random: It is the one that only takes certain (frequently integer) values and which results mainly from the counting performed.

- Continuous random variable: It is the one that usually results from the measurement and can take any value within a given interval.

Division of distributions

This division is made depending on the type of variable to study. The four main ones (from which all the others are born) are:

a) If the variable is a discrete variable (integers), it will correspond to a discrete distribution, of which there are:

- Binomial distribution (independent events).

- Distribution of Poisson (independent events).

- Hypergeometric distribution (dependent events).

b) If the variable is continuous (real numbers), the distribution that will be generated will be a continuous distribution. Examples of them are:

- Normal or gaussiana.

- Distribution of Cauchy

- Exponential distribution

In addition, the “Poisson distribution as an approximation of the binomial distribution” can be used when the sample to be studied is large and the probability of success is small. From the combination of the two types of distributions above (a and b), one known as the “normal distribution as an approximation of the binomial and Poisson distribution” arises.

Definition of distribution function

Given a random variable X{displaystyle scriptstyle X}, his distribution function, FX(x){displaystyle scriptstyle F_{X}(x)}}It is

FX(x)=Prorb(X≤ ≤ x)=μ μ P{ω ω 한 한 Ω Ω 日本語X(ω ω )≤ ≤ x!{displaystyle F_{X}(x)=mathrm {Prob} (Xleq x)=mu _{P{omega in Omega /25070/X(omega)leq x}}}}}

By simplicity, when there is no room for confusion, the subscript is usually omitted X{displaystyle scriptstyle X} and it is written, simply, F(x){displaystyle scriptstyle F(x)}. Where in the previous formula:

- Prorb{displaystyle mathrm {Prob} ,}, is the defined probability on a probability space and a unitary measure on the sample space.

- μ μ P{displaystyle mu _{P},} is the measure on the σ-algebra of sets associated with the probability space.

- Ω Ω {displaystyle Omega ,} is the sample space, or set of all possible random events, on which the probability space in question is defined.

- X:Ω Ω → → R{displaystyle X:Omega to mathbb {R} } is the random variable in question, that is, a defined function on the sample space to the actual numbers.

Properties

As an almost immediate consequence of the definition, the distribution function:

- It's a continuous function on the right.

- It's an undecreasing monotonous function.

In addition, it complies

limx→ → − − ∞ ∞ F(x)=0,limx→ → +∞ ∞ F(x)=1{displaystyle lim _{xto -infty }F(x)=0,qquad lim _{xto +infty }F(x)=1}

For two real numbers a{displaystyle a} and b{displaystyle b} such as <math alttext="{displaystyle (a(a.b){displaystyle (a tax)}<img alt="(athe events (X≤ ≤ a){displaystyle (Xleq a)} and <math alttext="{displaystyle (a(a.X≤ ≤ b){displaystyle (a habitXleq b)}<img alt="(a they are mutually exclusive and their union is the event (X≤ ≤ b){displaystyle (Xleq b)}, So we have to:

- <math alttext="{displaystyle P(Xleq b)=P(Xleq a)+P(aP(X≤ ≤ b)=P(X≤ ≤ a)+P(a.X≤ ≤ b){displaystyle P(Xleq b)=P(Xleq a)+P(a `Xleq b)}<img alt="P(Xleq b)=P(Xleq a)+P(a

- <math alttext="{displaystyle P(aP(a.X≤ ≤ b)=P(X≤ ≤ b)− − P(X≤ ≤ a){displaystyle P(a backwardXleq b)=P(Xleq b)-P(Xleq a)}<img alt="P(a

and finally

- <math alttext="{displaystyle P(aP(a.X≤ ≤ b)=F(b)− − F(a){displaystyle P(a ingredientXleq b)=F(b)-F(a)}<img alt="P(a

Therefore once known the distribution function F(x){displaystyle F(x)} for all random variable values x{displaystyle x} We will fully know the probability distribution of the variable.

To carry out calculations it is more comfortable to know the probability distribution, and nevertheless to see a graphical representation of the probability it is more practical to use the density function.

Distributions of discrete variables

It is called discrete variable distribution to that whose probability function only takes positive values in a set of values X{displaystyle X} finite or infinite numberable. This function is called a probability mass function. In this case the distribution of probability is the sum of the mass function, so we have to:

- F(x)=P(X≤ ≤ x)=␡ ␡ k=− − ∞ ∞ xf(k){displaystyle F(x)=P(Xleq x)=sum _{k=-infty }^{x}f(k)}

And, as corresponds to the definition of probability distribution, this expression represents the sum of all odds from − − ∞ ∞ {displaystyle} up to the value x{displaystyle x}.

Types of discrete variable distributions

Defined on a finite domain

- The binomial distribution, which describes the number of successes in a series of n independent experiments with possible binary results, i.e., of "yes" or "no", all of them with probability of success p and probability of failure q = 1 − p.

- The distribution of Bernoulli, the classic binomial, which takes values "1", with probability p, or "0", with probability q = 1 − p (experiment of Bernoulli).

- The distribution of Rademacher, which takes values "1" or "-1" with probability 1/2 each.

- The beta-binomial distribution, which describes the number of successes in a series of n independent experiments with possible "yes" or "no", each of them with a probability of variable success defined by a beta.

- Degenerate distribution in x0in which X takes the value x0 likely 1. Although it does not appear to be a random variable, the distribution meets all the requirements to be considered as such.

- The discreet uniform distribution, which collects a finite set of values that are turned out to be all equally probable. This distribution describes, for example, the random behavior of a coin, a dice, or a balanced casino roulette (without bias).

- The hypergeometric distribution, which measures the likelihood of obtaining x (0 ≤ x ≤ d) elements of a certain class formed by d elements belonging to a population of N elements, taking a sample of n elements of the population without replacement.

- Fisher's non-central hypergeometric distribution.

- Wallenius' non-central hypergeometric distribution.

- Benford law, which describes the frequency of the first digit of a set of numbers in decimal notation.

Defined on an infinite domain

- The negative binomial distribution or distribution of Pascal, which describes the number of independent Bernoulli essays necessary to get n successes, given an individual probability of success p constant.

- The geometric distribution, which describes the number of attempts needed to get the first hit.

- The negative beta-binomial distribution, which describes the number of experiments of the "yes/no" type necessary to get n hits, when the probability of success of each of the attempts is distributed according to a beta.

- The extended negative binomial distribution.

- The distribution of Boltzmann, important in statistical mechanics, which describes the occupation of discrete energy levels in a system in thermal balance. Several special cases are:

- Gibbs' distribution.

- Maxwell-Boltzmann's distribution.

- The asymmetric elliptic distribution.

- Parabolic fractal distribution.

- Extended hypergeometric distribution.

- The logarithmic distribution.

- The widespread logarithmic distribution.

- The distribution of Poisson, which describes the number of individual events that occur in a period of time. There are several variants such as the distribution of displaced Poisson, the hyperdistribution of Poisson, the binomial distribution of Poisson and the distribution of Conway-Maxwell-Poisson, among others.

- The distribution of Polya-Eggenberger.

- The Skellam distribution, which describes the difference of two independent random variables with Poisson distributions of different expected value.

- Yule-Simon's distribution.

- The zeta distribution, which uses the Riemman zeta function to assign a probability to each natural number.

- The law of Zipf, which describes the frequency of use of the words of a language.

- The law of Zipf-Mandelbrot is a more precise version of the previous one.

Distributions of continuous variables

A continuous variable is one that can take on any of the infinitely many existing values within an interval. In the case of a continuous variable, the probability distribution is the integral of the density function, so we then have:

- F(x)=P(X≤ ≤ x)=∫ ∫ − − ∞ ∞ xf(t)dt{displaystyle F(x)=P(Xleq x)=int _{-infty ^}{x}f(t),dt}

Types of continuous variable distributions

Distributions defined in a bounded interval

- The rainbow distribution, defined in the interval [a,b].

- The beta distribution, defined in the interval [0, 1], which is useful when estimating probabilities.

- The distribution of the raised cosine, on the [μ-s,μ+s] interval.

- Degenerate distribution in x0in which X takes the value x0 likely 1. It can be considered both a discreet and continuous distribution.

- The distribution of Irwin-Hall or distribution of the uniform sum is the distribution corresponding to the sum of n random variables i. i. d. ~ U(0, 1).

- Kent's distribution, defined on the surface of a unitary sphere.

- The distribution of Kumaraswamy, as versatile as beta, but with FDC and FDP simpler.

- The logarithmic distribution continues.

- The logit-normal distribution in (0, 1).

- The normal truncated distribution, over the interval [a, b].

- Mutual distribution, reverse distribution.

- The triangular distribution, defined in [a, b], of which a particular case is the distribution of the sum of two uniformly distributed independent variables (the convolution of two uniform distributions).

- The continuous uniform distribution defined in the closed interval [a, b], in which the probability density is constant.

- Rectangular distribution is the particular case in the interval [-1/2, 1/2].

- The U-Table distribution, defined in [a, b], used to model symmetrical bimodal processes.

- The von Mises distribution, also called normal circular distribution or distribution Tikhonov, defined on the unitary circle.

- The von Mises-Fisher distribution, generalization of the previous one to a N-dimensional sphere.

- Wigner's semicircular distribution, important in the study of random matrices.

Defined on a semi-infinite interval, usually [0,∞)

- The beta premium distribution.

- The distribution of Birnbaum-Saunders, also called the distribution of resistance to material fatigue, used to model failure times.

- The chi distribution.

- The non-central chi distribution.

- The distribution χ2 or distribution of Pearson, which is the sum of squares of n independent gaussian random variables. It is a special case of the gamma, used in adjustment goodness problems.

- The reverse chi-square distribution.

- Inverse chi-square distribution escalated.

- The non-central chi-square distribution.

- The Dagum distribution.

- The exponential distribution, which describes the time between two consecutive events in a memory-free process.

- F distribution, which is the reason between two variables χ χ n2{displaystyle mathbf {chi } _{n}^{2}} and χ χ m2{displaystyle mathbf {chi } _{m}^{2}} independent. It is used, among other uses, to perform variance analysis through the F test.

- Non-central F distribution.

- Fréchet's distribution.

- The gamma distribution, which describes the time needed for an event to happen in a process without memory.

- Erlang's distribution, special case of the gamma with an entire k parameter, developed to predict waiting times in waiting lines systems.

- The reverse gamma distribution.

- The gamma-Gompertz distribution, which is used in models to estimate life expectancy.

- The distribution of Gompertz.

- The distribution of displaced Gompertz.

- The distribution of Gumbel type-2.

- The distribution of Lévy.

Distributions in which the logarithm of a random variable is distributed according to a standard distribution:

- The log-Cauchy distribution.

- The log-gamma distribution.

- The log-Laplace distribution.

- The log-logistic distribution.

- The log-normal distribution.

- The distribution of Mittag-Leffler.

- Nakagami's distribution.

- Variants of the normal or Gauss distribution:

- The normal distribution folded.

- Semi-normal distribution.

- The distribution of reverse Gauss, also known as Wald's distribution.

- The distribution of Pareto and the distribution of Generalized Pareto.

- Pearson's type III distribution.

- Bi-exponential phase distribution, commonly used in pharmacokinetics.

- Bi-Weibull phase distribution.

- Rayleigh's distribution.

- Rayleigh's mixing distribution.

- Rice's distribution.

- Hotelling's T2 distribution.

- The distribution of Weibull or distribution of Rosin-Rammler, to describe the distribution of sizes of certain particles.

- Fisher's Z distribution.

Defined on the complete real line

- The distribution of Behrens-Fisher, which arises in the Behrens-Fisher problem.

- The distribution of Cauchy, an example of distribution that has neither expectation nor variance. In physics it is called Lorentz function, and is associated with several processes.

- Chernoff's distribution.

- The stable distribution or asymmetrical alpha-stable distribution of Lévy is a family of used distributions and a multitude of fields. The normal, Cauchy, Holtsmark, Landau and Lévy distributions belong to this family.

- The stable geometric distribution.

- The Fisher-Tippett distribution or distribution of the generalized extreme value.

- The distribution of Gumbel or log-Weibull, a special case of the Fisher-Tippett.

- The distribution of Gumbel type-1.

- The distribution of Holtsmark, an example of a distribution with finite expectation but infinite variance.

- Hyperbolic distribution.

- Hyperbolic dry distribution.

- Johnson's SU distribution.

- Landau's distribution.

- Laplace's distribution.

- Linnik's distribution.

- The logistics distribution, described by the logistics function.

- Generalized logistical distribution.

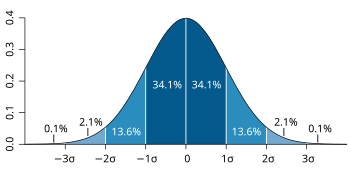

- Map-Airy distribution.

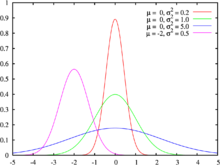

- The normal distribution, also called Gaussian distribution or Gauss bell. It is very present in many natural phenomena due to the theorem of the central boundary: any random variable that can be modeled as the sum of several independent and identically distributed variables with expectation and finite variance, is approximately normal.

- Generalized normal distribution.

- Asymmetrical normal distribution.

- The exponentially modified gaussian distribution, the convolution of a normal with an exponential.

- The normal-exponential-gamma distribution.

- The less exponential gaussian distribution is the convolution of a normal distribution with an exponential (negative) distribution.

- Voigt distribution, or Voigt profile, is the convolution of a normal distribution and a Cauchy. It is mainly used in spectroscopy.

- Pearson's type IV distribution.

- Student's t distribution, useful for estimating unknown stocks of a Gaussian population.

- Non-central distribution.

Defined in a variable domain

- The Fisher-Tippett distribution or distribution of the generalized extreme value may be defined in the complete real straight or at an agreed interval, depending on its parameters.

- The generalized Pair distribution is defined in a domain that may be lowered or bound by both ends.

- Tukey's lambda distribution can be defined in the complete real straight or in an agreed interval, depending on its parameters.

- Wakeby's distribution.

Discrete/continuous mixed distributions

- The corrected gaussian distribution is a normal distribution in which negative values are replaced by a discreet value at zero.

Multivariate distributions

- The distribution of Dirichlet, generalization of the beta distribution.

- The Ewens sampling formula or multivariate distribution of Ewens, is the probability distribution of all the partitions of an integer n, used in the genetic analysis of populations.

- The Balding-Nichols model, used in genetic population analysis.

- Multinomial distribution, generalization of binomial distribution.

- The normal multivariate distribution, generalization of normal distribution.

- Negative multinomial distribution, generalization of negative binomial distribution.

- The widespread multivariate log-gamma distribution.

Matrix distributions

- Wishart Distribution

- The distribution of Wishart inverse

- Normal matrix distribution

- The tripartite distribution

Non-numeric distributions

- The categorical distribution

Miscellaneous distributions

- Cantor Distribution

- Phase type distribution

Contenido relacionado

Gödel's incompleteness theorems

Roger Penrose

Seventeen