Parallel computing

Parallel computing is a form of computing in which many instructions are executed simultaneously, operating on the principle that large problems can often be broken down into smaller ones, which are then solved simultaneously (in parallel). There are several different forms of parallel computing: bit-level parallelism, instruction-level parallelism, data parallelism, and task parallelism. Parallelism has been used for many years, primarily in high-performance computing, but interest in it has recently grown due to physical limitations that prevent increased frequency. Such as power consumption—and consequently heat generation—of computers is a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, primarily in the form of multicore processors.

Parallel computers can be classified according to the level of parallelism their hardware supports: computers with multicore and multi-processor processors that have multiple processing elements within a single machine, and clusters, MPPS, and grids that use multiple computers to work on the same task. Many times, to speed up specific tasks, specialized parallel computing architectures are used alongside traditional processors.

Parallel computer programs are more difficult to write than sequential ones, because concurrency introduces new types of software bugs, race conditions being the most common. Communication and synchronization between different subtasks are some of the biggest obstacles to getting good performance from the parallel program.

The maximum possible speedup of a program as a result of parallelization is known as Amdahl's law.

Basics

Traditionally, computer programs have been written for serial computing. To solve a problem, an algorithm is built and implemented as a serial stream of instructions. These instructions are executed in a central processing unit in a computer. Only one instruction can be executed at a time, and some time after the instruction has finished, the next one is executed.

Parallel computing, by contrast, uses multiple processing elements simultaneously to solve a problem. This is achieved by dividing the problem into independent parts so that each processing element can execute its part of the algorithm simultaneously with the others. Processing elements are diverse and include resources such as a multi-processor computer, multiple networked computers, specialized hardware, or any combination of the above.

Increased frequency was the dominant reason for improvements in computer performance from the mid-1980s to 2004. The execution time of a program is equal to the number of instructions multiplied by the average time per instruction. Holding everything else constant, increasing the clock frequency reduces the average time it takes for an instruction to execute, thus increasing the frequency reduces the execution time of computer programs.

However, the power consumption of a chip is given by the equation P = C × V2 × F, where P is the power, C is the capacitance change per clock cycle —proportional to the number of transistors whose inputs change—, V is the voltage, and F is the processor's frequency (cycles per second). An increase in frequency increases the amount of power used in a processor. Increased processor power consumption led Intel in May 2004 to cancel its Tejas and Jayhawk processors, generally cited as the end of frequency scaling as the dominant paradigm of computer architecture.

Moore's law is the empirical observation that the transistor density in a microprocessor doubles every 18 to 24 months. Despite power consumption issues, and repeated predictions of its doom, Moore's law Moore is still going strong. With the end of the frequency increase, these additional transistors—no longer used for frequency increase—can be used to add additional hardware that enables parallel computing.

Amdahl's Law and Gustafson's Law

Ideally, the speedup from parallelization is linear, doubling the number of processing elements should halve the execution time, and doubling it a second time should again halve the time. However, very few parallel algorithms achieve optimal speedup. Most have a nearly linear speedup for a small number of render elements, and it becomes constant for a large number of render elements.

The potential acceleration of an algorithm on a parallel computing platform is given by Amdahl's law, originally formulated by Gene Amdahl in the 1960s. It points out that a small portion of the program that cannot be paralleled will limit the acceleration achieved with the parallelization. Programs that solve mathematical or naivel problems typically consist of several paralyzable parts and several non-parallelable (sequential). Yeah. α α {displaystyle alpha } is the fraction of time a program spends on non-sliding parts, then

- S=1α α =limP→ → ∞ ∞ 11− − α α P+α α {displaystyle S={frac {1}{alpha }}}{qquad qquad =lim _{Pto infty }{frac {1}{{{frac {1-alpha }{P}}} +alpha }}}}}}

is the maximum acceleration that can be achieved by parallelizing the program. If the sequential part of the program takes up 10% of the execution time, no more than 10x speedup can be achieved, regardless of how many processors are added. This puts an upper limit on the usefulness of adding more parallel execution units. “When a task cannot be split due to sequential constraints, applying more effort has no effect on the schedule. The gestation of a child takes nine months, no matter how many women are assigned to it."

Gustafson's law is another computer law that is closely related to Amdahl's law. It notes that the speed increase with P{displaystyle P} processors are

- S(P)=P− − α α (P− − 1)=α α +P(1− − α α ).{displaystyle S(P)=P-alpha (P-1)qquad =alpha +P(1-alpha).

Both laws assume that the running time of the sequential part of the program is independent of the number of processors. Amdahl's law assumes that the entire problem is of fixed size, so the total amount of work to be done in parallel is also independent of the number of processors, while Gustafson's law assumes that the total amount of work to be will do in parallel varies linearly with the number of processors.

Dependencies

Understanding data dependency is critical in implementing parallel algorithms. No program can execute faster than the longest chain of dependent calculations (known as the critical path), since calculations that depend on previous calculations in the chain must be executed in order. However, most algorithms do not just consist of a long chain of dependent computations; there are usually opportunities to run independent computations in parallel.

Let Pi and Pj be two segments of the program. Bernstein's conditions describe when the two segments are independent and can be executed in parallel. For Pi, let Ii all the input variables and Oi the output variables, and likewise for Pj. P i and Pj are independent if satisfy

- Ij Oi=∅ ∅ ,{displaystyle I_{j}cap O_{i}=varnothing,}

- Ii Oj=∅ ∅ ,{displaystyle I_{i}cap O_{j}=varnothing,}

- Oi Oj=∅ ∅ .{displaystyle O_{i}cap O_{j}=varnothing.

A violation of the first condition introduces a stream dependency, corresponding to the first segment producing a result used by the second segment. The second condition represents an anti-dependency, when the second segment (Pj) produces a variable that the first segment ( Pi). The third and last condition represents an output dependency: When two segments write to the same place, the result comes from the last executed segment.

Consider the following functions, which demonstrate various types of dependencies:

1: Dep(a, b) function 2: c: = a · b 3: d: = 3 · c 4: End function

Operation 3 in Dep(a, b) cannot be executed before—or even in parallel with—operation 2, since a result of operation 2 is used in operation 3. This violates condition 1, and thus introduces a stream dependency.

1: NoDep function (a, b) 2: c: = a · b 3: d: b = 3 · 4: e: = a + b 5: end function

In this example, there are no dependencies between the statements, so they can all be executed in parallel.

Bernstein's conditions do not allow memory to be shared between different processes. For this reason, some means are necessary to impose an ordering between the accesses, such as traffic lights, barriers or some other method of synchronization.

Race Conditions, Mutual Exclusion, Synchronization, and Parallel Deceleration

Subtasks in a parallel program are often called threads. Some parallel computing architectures use smaller, lighter versions of threads known as threads, while others use larger versions known as processes. However, "threads" is generally accepted as a generic term for subtasks. Threads will often have to update some variables that are shared between them. The instructions between the two programs can be interleaved in any order. For example, consider the following program:

| Hilo A | Hilo B |

| 1A: Read variable V | 1B: Read variable V |

| 2A: Add 1 to variable V | 2B: Add 1 to variable V |

| 3A: Write in variable V | 3B: Write in variable V |

If instruction 1B is executed between 1A and 3A, or if instruction 1A is executed between 1B and 3B, the program will produce incorrect data. This is known as a race condition. The programmer must use a lock (lock) to provide mutual exclusion. A lock is a programming language construct that allows a thread to take control of a variable and prevent other threads from reading or writing it, until the variable is unlocked. The thread holding the lock is free to execute its critical section—the section of a program that requires exclusive access to some variable—and unlock the data when done. Therefore, to ensure proper execution of the program, the above program can be rewritten using locks:

| Hilo A | Hilo B |

| 1A: Variable Block V | 1B: Variable Block V |

| 2A: Read variable V | 2B: Read variable V |

| 3A: Add 1 to variable V | 3B: Add 1 to variable V |

| 4A: Write in variable V | 4B: Write in variable V |

| 5A: Unlock variable V | 5B: Unlock variable V |

One thread will successfully lock the variable V, while the other thread will not be able to continue until V is unlocked. This guarantees the correct execution of the program. While locks are necessary to ensure proper program execution, they can greatly slow down a program.

Locking multiple variables using non-atomic locks introduces the possibility of the program reaching a deadlock (deadlock). An atomic lock locks multiple variables at once, if it can't lock all of them then none of them are locked. If there are two threads and they each need to lock the same two variables using non-atomic locks, it is possible for one thread to lock one of them and the other to lock the second variable. In such a case a deadlock occurs where no thread can complete the execution.

Many parallel programs require their subtasks to act in sync. This requires the use of a barrier. Barriers are typically implemented using a lock. A class of algorithms, known as lock-free and wait-free algorithms, avoid the use of locks and barriers. However, this approach is generally difficult to implement and requires properly designed data structures.

Not all parallelizations lead to speedup. Typically, as a task is divided into more and more threads, these threads spend an increasing portion of their time communicating with each other. Eventually, the communication overhead dominates the time taken to solve the problem, and additional parallelization—splitting the workload among even more threads—increases the amount of time required to finish. This is known as parallel deceleration.

Fine-grained, coarse-grained, and embarrassing parallelism

Apps are often categorized by how often their subtasks sync or communicate with each other. An application exhibits fine-grained parallelism if its subtasks need to communicate many times per second, coarse-grained parallelism if they don't communicate many times per second, and embarrassingly parallel if they never or rarely have to communicate. Embarrassingly parallel apps are considered the easiest to parallelize.

Consistency models

Parallel programming languages and parallel computers must have a data consistency model—also known as a memory model. The consistency model defines rules for operations on the computer's memory and how the results are produced.

One of the first consistency models was Leslie Lamport's sequential consistency model. Sequential consistency is the property of a program that its parallel execution produces the same results as a sequential program. Specifically, it is a consistent sequential program if "...the results of an execution are the same as those obtained if the operations of all processors are executed in a sequential order, and the operations of each individual processor appear in this sequence in the order specified by the program".

Transactional memory is a type of consistency model. Transactional memory borrows from database theory the concept of atomic transactions and applies it to memory accesses.

Mathematically, these models can be represented in several ways. Petri nets, which were introduced in 1962 as Carl Adam Petri's doctoral thesis, were an early attempt to codify the rules of consistency models. Later, data flow architectures were created to physically implement the ideas of data flow theory. In the early 1970s, process calculus such as Systems Communication and Sequential Process Communication were developed to allow algebraic reasoning about systems composed of interacting elements. More recent additions to the process calculus family, such as π-calculus, have added the ability to reason about dynamic topologies. Logics such as Lamport's TLA+, and mathematical models have been developed to describe the behavior of concurrent systems.

Flynn's Taxonomy

Michael J. Flynn created one of the first computer classification systems, parallel and sequential programs, now known as Flynn's taxonomy. Flynn classifies programs and computers according to whether they are operating on one or more sets of instructions and whether those instructions are used on one or more sets of data.

| Individual instruction | Multiple instruction | |

| Individual data | SISD | MISD |

| Multiple data | SIMD | MIMD |

The single-instruction-single-data (SISD) classification is equivalent to a fully sequential program. Single-instruction-multiple-data (SIMD) classification is analogous to doing the same operation multiple times on a large data set. This is commonly done in signal processing applications. Multiple-Instructions-Single-Data (MISD) is a classification that is rarely used. Although computer architectures in this category—such as systolic arrays—were designed, very few applications materialized. Multiple-instruction-multiple-data (MIMD) programs are the most common type of parallel programs.

According to David A. Patterson and John L. Hennessy, “Some machines are hybrids of these categories, of course, this classic model has survived because it is simple, easy to understand, and gives a good first approximation. Furthermore, it is, perhaps because of its comprehensibility, the most widely used scheme."

Types of parallelism

Bitwise parallelism

From the advent of large-scale integration (VLSI) as a computer chip manufacturing technology in the 1970s until around 1986, acceleration in computer architecture was largely achieved by doubling the size of the word in the computer, the amount of information the processor can handle per cycle. Increasing the word size reduces the number of instructions the processor must execute to perform an operation on variables whose sizes are greater than the length of the word. word. For example, when an 8-bit processor must add two 16-bit integers, the processor must first add the low-order 8 bits of each integer with the add instruction, then add the high-order 8 bits using the add instruction. carry-add instruction that takes into account the carry bit of the low-order addition, in this case an 8-bit processor requires two instructions to complete a single operation, whereas a 16-bit processor requires a single instruction to complete complete it.

Historically, 4-bit microprocessors were replaced by 8-bit ones, then 16-bit and 32-bit, this general trend came to an end with the introduction of 64-bit processors, which has been a standard in general purpose computing over the past decade.

Parallelism at the instructional level

A computer program is essentially a sequence of instructions executed by a processor. These instructions can be reordered and combined into groups that are then executed in parallel without changing the result of the program. This is known as instructional level parallelism. Advances in instruction-level parallelism dominated computer architecture from the mid-1980s to the mid-1990s.

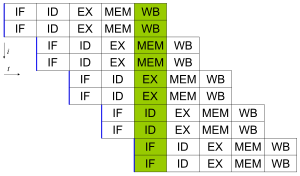

Modern processors have a ''pipeline'' multi-stage instructions. Each stage in the pipeline corresponds to a different action that the processor performs in the instruction corresponding to the stage; a processor with a pipeline of N stages can have up to n different instructions in different stages of completion. The canonical example of a pipelined processor is a RISC processor, with five stages: instruction request, decode, execute, memory access, and write. The Pentium 4 processor had a pipeline of 35 stages.

In addition to the instruction-level parallelism of pipelining, some processors can execute more than one instruction at a time. These are known as superscalar processors. Instructions can be grouped together only if there is no data dependency between them. Scoreboarding and Tomasulo's algorithm—which is similar to scoreboarding but makes use of record renaming—are two of the most common techniques for implementing out-of-order execution and instruction-level parallelization.

Data parallelism

Data parallelism is the parallelism inherent in programs with loops, which focuses on the distribution of data among the different computational nodes that must be treated in parallel. "Cycle parallelization often leads to similar—not necessarily identical—sequences of operations or functions being performed on elements of a large data structure." Many scientific and engineering applications exhibit data parallelism.

A loop termination dependency is the dependency of an iteration of a loop on the output of one or more previous iterations. Loop termination dependencies prevent parallelization of loops. For example, consider the following pseudocode that computes the first few Fibonacci numbers:

1: PREV1:= 0 2: PREV2:= 1 3: do: 4: CUR:= PREV1 + PREV2 5: PREV1:= PREV2 6: PREV2:= CUR 7: while (CUR 10)

This loop cannot be parallelized because CUR depends on itself (PREV2) and PREV1, which are computed on each iteration of the loop. Since each iteration depends on the result of the previous one, they cannot be performed in parallel. As the size of a problem gets larger, the available data parallelization generally does too.

Task parallelism

Task parallelism is the characteristic of a parallel program in which "entirely different computations can be performed on either the same or any different set of data". This is in contrast to data parallelism, where the same computation is performed on different or same data sets. Task parallelism typically does not scale with the size of a problem.

Hardware

Memory and communication

Main memory in a parallel computer can be shared—shared among all processing elements in a single address space—or distributed—each processing element has its own local address space. The term memory distributed refers to the fact that memory is logically distributed, but often implies that it is also physically distributed. Distributed-shared memory and memory virtualization combine the two approaches, where the processor has its own local memory and allows non-local processors access to memory. Accesses to local memory are usually faster than accesses to non-local memory.

Computer architectures in which every element of main memory can be accessed with equal latency and bandwidth are known as Uniform Memory Access (UMA) architectures. Typically, it can only be achieved with a shared memory system, where the memory is not physically distributed. A system that does not have this property is known as a non-uniform memory access (NUMA) architecture. Distributed memory systems have non-uniform access to memory.

Computer systems often make use of caches, small fast memories located near the processor that store temporary copies of memory values—close, both in the physical and logical sense. Parallel computing systems have difficulties with caches and the possibility of incorrect program execution because the same value may be stored in more than one place. These computers require system cache consistency, typically keeping track of cached values and strategically deleting them, ensuring proper program execution. Bus sniffing is one of the most common methods of keeping track of the values being accessed. The design of large, high-performance cache coherence systems is a very difficult problem in computer architecture. As a result, shared memory architectures are not as scalable as distributed memory systems.

Processor-processor and processor-memory communication can be implemented in hardware in several ways: via shared memory—either multiport or multiplexed—, a crossbar switch, or a crossbar switch, a shared bus or an interconnected network of a wide variety of topologies such as star, ring, tree, hypercube, coarse hypercube -a hypercube with more than one processor in a node-, or n-dimensional mesh.

Parallel computers based on interconnected networks must have some kind of routing to allow messages to pass between nodes that are not directly connected. The medium used for communication between the processors of large multiprocessor machines is likely to be hierarchical.

Parallel Computer Classes

Parallel computers can be classified according to the level at which the hardware supports parallelism. This classification is analogous to the distance between basic computing nodes. These are not mutually exclusive, for example symmetric multiprocessor clusters are relatively common.

Multicore Computing

A multicore processor is a processor that includes multiple execution units (cores) on the same chip. Superscalar processors can execute multiple instructions per cycle of an instruction stream (thread), unlike this, a multicore processor can execute multiple instructions per cycle of multiple instruction sequences. Each core in a multicore processor can potentially be superscalar, that is, in each cycle, each core can execute multiple instructions from an instruction stream.

The ''Multithreading'' Simultaneous—of which Intel HyperThreading is the best known—was a form of pseudo-multicore. A concurrent multithreading capable processor has a single execution unit (core), but when that execution unit is idle—for example, during a cache miss—it is used to process a second thread.. IBM's Cell microprocessor, designed for use in the Sony PlayStation 3 console, is another prominent multicore processor.

Symmetric Multiprocessing

A symmetric multiprocessor (SMP) is a computer system with multiple identical processors that share memory and are connected via a bus. Bus contention prevents scaling in this architecture. As a result, SMPs generally comprise no more than 32 processors. "Due to the small size of the processors and the significant reduction in bus bandwidth requirements, such symmetric multiprocessors are extremely cost-effective, provided there are a sufficient number of them." of bandwidth”.

Cluster Computing

A cluster is a group of loosely coupled computers that work closely together so that in some respects they can be thought of as a single computer. Clusters are made up of several independent machines connected by a network. While the machines in a cluster have to be symmetric, otherwise load balancing is more difficult to achieve. The most common type of cluster is the Beowulf cluster, which is a cluster implemented with multiple identical business computers connected to a TCP/IP Ethernet local area network. Beowulf technology was originally developed by Thomas Sterling and Donald Becker. The vast majority of TOP500 supercomputers are clusters.

Massively Parallel Processing

A massively parallel processor (MPP) is a single computer with multiple processors networked together. They have many of the features of clusters, but have specialized interconnection networks—whereas clusters use off-the-shelf hardware for networking. MPPs also tend to be larger than clusters, with "much more" than 100 processors. In an MPP, "each CPU has its own memory and a copy of the operating system and application. Each subsystem communicates with the others through a high-speed interconnect."

Distributed computing

Distributed computing is the most distributed form of parallel computing. Computers that communicate over the Internet are used to work on a given problem. Due to the low bandwidth and extremely high latency of the Internet, distributed computing is typically only concerned with embarrassingly parallel problems. Many distributed computing applications have been created, SETI@home and Folding@home being the best known examples.

Most distributed computing applications use middleware, software that sits between the operating system and the application to manage network resources and standardize the software interface. The most common is the Berkeley Open Infrastructure for Network Computing (BOINC). Distributed computing programs often make use of "spare cycles," performing calculations when a computer's processor is idle.

Specialized Parallel Computers

Within parallel computing, there are specialized parallel devices that generate interest. Although they are not domain specific, they tend to be applicable to only a few classes of parallel problems.

Reconfigurable computing with programmable gate arrays

Reconfigurable computing is the use of a programmable gate array (FPGA) as a coprocessor for a general purpose computer. An FPGA is essentially a computer chip that can be reconfigured for a given task.

FPGAs can be programmed with hardware description languages such as VHDL or Verilog. However, programming languages can be tedious. Several vendors have created "C to HDL" languages that try to emulate the syntax and/or semantics of the C programming language, with which most programmers are familiar. The most popular "C to HDL" languages are Mitrion-C, C Impulse, DIME C and C-Handel. Specific subsets of SystemC based on C++ can also be used for this purpose.

AMD's decision to open up HyperTransport to other vendors has made it the technology that enables high-performance reconfigurable computing. According to Michael D'Amour R., COO of DRC Computer Corporation, “When we entered AMD, they called us socket thieves. Now they call us partners."

General Purpose Computing on Graphics Processing Units (GPGPU)

General purpose computing on graphics processing units (GPGPUs) is a relatively recent trend in computer engineering research. GPUs are co-processors that have been heavily optimized for computer graphics processing. Computer graphics processing is a field dominated by operations on parallel data, particularly linear algebra and matrix operations.

In the beginning, GPGPU programs typically used the graphics API to run programs. However, several new programming languages and platforms have been built to perform general purpose computing on GPUs, both Nvidia and AMD have released programming environments with CUDA and the Stream SDK, respectively. Other GPU programming languages include: BrookGPU, PeakStream, and RapidMind. Nvidia has also released computing-specific products in its Tesla series. The Khronos Group technology consortium has released OpenCL, which is a framework for writing programs that run on different platforms made up of CPUs and GPUs. AMD, Apple, Intel, Nvidia and others are supporting OpenCL.

Application Specific Integrated Circuits

Several application-specific integrated circuits (ASICs) have been designed to deal with parallel applications.

Because an ASIC (by definition) is specific to a given application, it can be fully optimized for that application. As a result, for a given application, an ASIC tends to outperform a general purpose computer. However, ASICs are created using X-ray lithography. This process requires a mask, which can be extremely expensive. A mask can cost over a million dollars. The smaller the transistors needed for the chip, the more expensive the mask will be. Meanwhile, increased performance in general-purpose computers—as described by Moore's Law—tends to eliminate this difference in just one or two generations of chips. The high initial cost, and the tendency to be outpaced by Moore's law has made the use of ASICs unfeasible for most parallel applications. However, a few have been built, one example being the RIKEN MDGRAPE-3 peta-flop machine that uses ASICs for molecular dynamics simulation.

Vector processors

A vector processor is a CPU or computer system that can execute the same instruction on large data sets. «Vector processors have high-level operations that work on linear arrays of numbers or vectors. An example of an operation on vectors is: A = B × C, where A, B, and C are 64-element vectors, where each is a 64-bit floating-point number". They are closely related to Flynn's SIMD classification.

Cray computers became famous for their vector processing in the 1970s and 1980s. However, vector processors, both CPUs and computer systems, have disappeared. The instruction sets of modern processors include some vector processing instructions, for example: AltiVec and Streaming SIMD Extensions (SSE).

Software

Parallel Programming Languages

Concurrent programming languages, libraries, APIs, and parallel programming models have been created for programming parallel computers. These can generally be divided into classes based on the assumptions made about the underlying memory architecture: shared, distributed, or shared-distributed. Shared memory programming languages communicate by manipulating variables in shared memory. Message passing is used in the distributed memory architecture. POSIX Threads and OpenMP are two of the most widely used APIs with shared memory, while the Message Passing Interface (MPI) is the most widely used API in message passing systems. The concept "value" future" is widely used in parallel program programming, where one part of a program promises to provide required data to another part of the program at a future time.

The companies CAPS enterprise and Pathscale are trying to convert the HMPP (Hybrid Multicore Parallel Programming) directives into an open standard called OpenHMPP. The directive-based OpenHMPP programming model provides a syntax for efficiently offloading computations onto hardware accelerators and optimizing data movement to and from hardware memory. OpenHMPP directives describe remote procedure calls (RPC) on an accelerator device—for example, the GPU—or more generally a set of kernels. Directives allow you to annotate C or Fortran code to describe two groups of functionality: downloading procedures to a remote device and optimizing data transfers between CPU main memory and accelerator memory.

Automatic parallelization

Automatic parallelization of a sequential program by a compiler is the holy grail of parallel computing. Despite decades of work by researchers, automatic parallelization has had limited success.

Major parallel programming languages remain explicitly parallel or at best partially implicit, in which a programmer gives the compiler parallelization directives. Few fully implicit parallel programming languages exist: SISAL, Parallel Haskell, and (for FPGAs) Mitrion C.

Checkpoint

As a computer system grows in complexity, the mean time between failures typically decreases. An application checkpoint is a technique whereby the computer system takes a "snapshot" of the application, a record of all current resource allocations and variable states, similar to a memory dump, this information can be used to restore the program if the computer fails. Having a checkpoint means that the program can restart from it and not from the beginning. While checkpoints provide benefits in a variety of situations, they are especially useful in highly parallel systems with large numbers of processors that are used in high performance computing.

Parallel commands

The processes of a parallel command must be unconnected to any other command, this considering that no variable is mentioned to occur as a target variable in any other process. The subscripts are integer constants that serve as the name for the command list. So a process whose label subscripts include one or more ranges for a series of processes, each with the same label and command list each have a combination of different values that are substituted for the combined variables. A parallel command specifies the concurrent execution of its constituent processes. They all start simultaneously and the parallel command ends successfully only as long as they all have finished successfully.

Algorithmic methods

As parallel computers get bigger and faster, it becomes feasible to solve problems that used to take too long to run. Parallel computing is used in a wide range of fields, from bioinformatics (protein folding and sequence analysis) to economics (financial mathematics). The types of problems commonly encountered in parallel computing applications are:

- Linear algebra densa

- Linear algebra dispersed

- Spectral methods (such as Cooley-Tukey's quick Fourier transformation)

- N-bodies problems (such as the Barnes-Hut simulation)

- Problems grids Structures (Lattice Boltzmann Methods)

- Problems grids unstructured (such as those found in the analysis of finite elements)

- Monte Carlo Simulation

- Combination logic (e.g., brute force cryptographic techniques)

- Guided by graphs (e.g., system algorithms)

- Dynamic programming

- Ramification and pruning methods

- Graph models (such as the detection of hidden Markov models and the construction of bayesian networks)

- Simulation of finite automatons

History

The origins of true parallelism (MIMD) can be traced back to Federico Luigi, Menabrea Conte and their "Sketch of the Analytical Engine Invented by Charles Babbage". IBM introduced the IBM 704 in 1954, through a project at the that Gene Amdahl was one of the main architects. It became the first commercially available computer to use fully automatic floating-point arithmetic commands.

In April 1958, S. Gill (Ferranti) discussed parallel programming and the need for branching and waiting. Also in 1958, IBM researchers John Cocke and Daniel Slotnick first discussed the use of the parallelism in numerical computations. Burroughs Corporation introduced the D825 in 1962, a four-processor computer that accesses up to 16 memory modules via a crossbar switch. In 1967, Amdahl and Slotnick published a discussion on the feasibility of parallel processing at the American Federation of Information Processing Societies Conference. It was during this debate that Amdahl's Law was coined to define the speedup limits that can be achieved due to parallelism.

In 1969, the American company Honeywell introduced its first Multics system, a symmetrical multiprocessor system capable of running up to eight processors in parallel. In 1970, C.mmp, a project at Carnegie Mellon University with multiple processors, was launched. "one of the first multiprocessors with more than a few processors". "The first bus with multi-processor connection and spy cache was the Synapse N+1 in 1984".

SIMD parallel computers date back to the 1970s. The motivation behind the first SIMD computers was to amortize the gate delay of the processor control unit over multiple instructions. In 1964, Slotnick had proposed the construction of a massively parallel computer for the Lawrence Livermore National Laboratory. Its design was funded by the United States Air Force, which was the first effort to achieve SIMD parallel computing. The key to its design was fairly high parallelism, with up to 256 processors, allowing the machine to work on large data sets in what would later be known as vector processing. However, ILLIAC IV was called "the most infamous of supercomputers", as only a quarter of the project had been completed. It took 11 years, costing almost four times the original estimate. When it was ready to run a real application for the first time in 1976, it was surpassed by commercial supercomputers, such as the Cray-1.

Further reading

- Rodriguez, C.; Villagra, M.; Baran, B. (August 29, 2008). «Asynchronous team algorithms for Boolean Satisfiability». Bio-Inspired Models of Network, Information and Computing Systems, 2007. Bionetics 2007. 2nd (in English): 66-69. doi:10.1109/BIMNICS.2007.4610083.

Contenido relacionado

Aerospace engineering

European Organization for Space Research

Alternator