Optimization (mathematics)

In mathematics, statistics, empirical sciences, computer science or economics, optimization (also, mathematical optimization or mathematical programming) is the selection of the best item (with respect to some criterion) from a set of available items. Operations research is one of the fields of mathematics on whose bases optimization works.

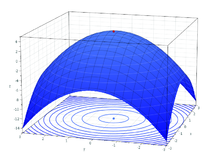

In the simplest case, an optimization problem consists of maximizing or minimizing a real function by systematically choosing input values (from an allowed set) and computing the value of the function. The generalization of optimization theory and techniques to other formulations comprises a large area of applied mathematics. In general, optimization includes discovering the "best values" of some objective function given a defined domain, including a variety of different types of objective functions and different types of domains.

Optimization refers to the action and effect of optimizing. In general terms, it refers to the ability to do or solve something in the most efficient way possible and, in the best of cases, using the least amount of resources.

In recent decades, the term optimization has been linked to the world of computing. However, it is a concept that is also used in mathematics, process management and economics.

Optimization issues

An optimization problem can be represented as follows:

- Dada: a function f: A → → {displaystyle to } R.

- Search: an element x0 in A such as f(x0) ≤ f(x) for everything x in A or such that f(x0≥ f(x) for everything x in A (“maximization”).

Such a formulation is called an optimization problem or a mathematical programming problem (a term not directly related to computer programming but still in use, for example in linear programming - see the History section). Many theoretical and real-world problems can be modeled using this general scheme. Problems formulated using this technique in the fields of physics and computer vision refer to the technique as energy minimization, speaking of the value of the function f representing the energy of the system being modeled.

Typically, A is some subset of the Euclidean space Rn, often bounded by a set of constraints, equalities or inequalities that the elements of A have to satisfy. The domain A of f is called the search space or the choice set, while the elements of A are called candidate solutions or feasible solutions.

The function f is variously called the objective function, cost function (minimization), utility function (maximization), indirect utility function (minimization), or, in certain fields, energy function, or functional energy. A feasible solution that minimizes (or maximizes, if this is the purpose) the objective function is called an optimal solution.

By convention, the standard format of an optimization problem is stated in terms of minimization. Generally, unless both the objective function and the feasible region are convex in a minimization problem, there can be several local minima, where a local minimum x* is defined as a point for which there exists some δ > 0, where for all x such that

- x− − x⋆ ⋆ ≤ ≤ δ δ {displaystyle Δmathbf {x} -mathbf {x} ^{star }{associatedleq delta ,}

expression

- f(x⋆ ⋆ )≤ ≤ f(x){displaystyle f(mathbf {x} ^{star })leq f(mathbf {x})}

is true; that is, in some region around x*, all values of the function are greater than or equal to the value at that point. The local maximum is defined in a similar way.

A large number of algorithms proposed to solve non-convex problems – including most of the commercially available solvers – are not able to make a distinction between local optimal solutions and rigorous optimal solutions, and treat the former as current solutions. of the original problem. The branch of applied mathematics and numerical analysis that is responsible for the development of deterministic algorithms that are capable of guaranteeing convergence in finite time to the real optimal solution of a non-convex problem is called global optimization.

Notation

Optimization problems are often expressed in a special notation. Here are some examples.

Minimum and Maximum value of a function

Consider the following notation:

- minx한 한 R(x2+1){displaystyle min _{xin mathbb {R} };(x^{2}+1)}

This denotes the minimum value of the objective function x2+1{displaystyle x^{2}+1}When x is selected from the set of real numbers R{displaystyle mathbb {R} }. The minimum value in this case is 1{displaystyle 1} and happens to x=0{displaystyle x=0}.

Similarly, the notation

- maxx한 한 R2x{displaystyle max _{xin mathbb {R} };2x}

expresses the maximum value of the objective function 2x, where x is any real number. In this case, there is no such maximum, therefore there is no bounded optimal value.

Optimal input arguments

Consider the following expression:

argminx한 한 (− − ∞ ∞ ,− − 1]x2+1,{displaystyle {underset {xin(-infty-1)}{operatorname {arg,min}} };x^{2}+1,}

or equivalently

argminxx2+1,subject to:x한 한 (− − ∞ ∞ ,− − 1].{displaystyle {underset {x}{operatorname {arg,min}}}};x^{2}+1,{;{text{subject to:};xin (-infty-1]. !

This represents the value (or values) of the argument x in the interval (− − ∞ ∞ ,− − 1]{displaystyle (-infty-1)} that minimize (or maximize) the objective function x2+ 1 (and not the minimum value that achieves the objective function for such values). In this case, the answer is x = -1, since x = 0 is not feasible, i.e. does not belong to the domain of the problem.

Similarly,

- argmaxx한 한 [chuckles]− − 5,5],and한 한 Rx# (and),{displaystyle {underset {xin [-5,5],;yin mathbb {R}}{operatorname {arg,max}}}{;xcos(y),}}

which equivalent to

- argmaxx,andx# (and),subject to:x한 한 [chuckles]− − 5,5],and한 한 R,{displaystyle {underset {x,;y}{operatorname {arg,max}}}};xcos(y),;{text{subject to:}};xin [-5,5],;yin mathbb {R}}}

represents the pair(s) (x,y) that minimize (or maximize) the value of the objective function xcos(y), with the constraint added that x is in the interval [-5,5] (again, the minimum value of the function doesn't matter). In this case, the solutions are pairs of the form (5, 2kπ) and (−5,(2k+1)π), where k loops through all integers.

Arg min and arg max are sometimes written as argmin and argmax, meaning argument from minimum and argument from maximum.

History

Pierre de Fermat and Joseph Louis Lagrange found calculus-based formulas to identify optimal values, while Isaac Newton and Carl Friedrich Gauss proposed iterative methods to approximate the optimum. Historically, the term linear programming to refer to certain optimization problems is due to George B. Dantzig, although much of the theory had been introduced by Leonid Kantorovich in 1939. Dantzig published the Simplex Algorithm in 1947 and John von Neumann developed the theory. of duality in the same year.

The term programming in this context does not refer to computer programming. Rather, the term comes from the US Army's use of programme when referring to proposed training and logistics planning, which was the problem studied by Dantzig at the time.

Other important researchers in the field of mathematical optimization were:[citation needed]

Main subfields

- Convex programming studies the case in which the objective function is convex (minimization) or concave (maximization) and the set of restrictions is convex. This can be seen as a particular case of non-linear programming or as the generalization of linear programming or quadratic convex.

- Linear programming (PL): is a type of convex programming, in which the objective function f is linear and the set of restrictions is specified using only linear equations and inecutions. This set is called polyhedron or polytopo if it is attached.

- Conical programming: is a general form of convex programming. PL, PCSO and PSD can all be seen as conical programs with the appropriate cone type.

- Second-order cone programming (PCSO): is a type of convex programming and includes certain types of quadratic programming problems.

- Semi-defined programme (PSD): is a convex optimization subfield where the fundamental variables are semi-defined matrices. It is a generalization of linear programming and convex quadratic programming.

- Geometric programme: it is a technique by which the objective and the restrictions of inequality expressed as polynomials and the restrictions of equality as mononomies can be transformed into a convex program.

- Programming with integers or Whole programme: studies linear programs in which some or all variables are obliged to take whole values. This is not convex and in general is much more complex than regular linear programming.

- Quad programme: allows the objective function to have quadratic terms, while the feasible set can be specified with linear equations and ineffects. For specific forms of the quadratic term, this is a type of convex programming.

- Fractionary programme: studies the optimization of reasons for two non-linear functions. The special class of concave fractional programs can be transformed into a convex optimization problem.

- Non-linear programme: studies the general case in which the objective function, or the restrictions, or both contain non-linear parts. This may or may not be a convex program. In general, if the program is convex it affects the difficulty of resolution.

- Stochastic programming u Stochastic optimization: studies the case in which any of the restrictions or parameters depends on random variables.

- Robust programming: like stochastic programming, it is an attempt to capture uncertainty in the fundamental data of the optimization problem. This is done by using random variables, but instead, the problem is solved by taking into account imprecisions in the input data.

- Combined optimization: cares about the problems where the set of feasible solutions is discreet or can be reduced to one.

- dimensional-infinite optimization: studies the case where the set of feasible solutions is a subset of an infinite dimension space, for example a space of functions.

- Heuristics and Metaheuristics: make assumptions about the problem being optimized. Usually, heuristics do not guarantee that any optimal solution is found. Then heuristics are used to find approximate solutions for many complicated optimization problems.

- Restriction satisfaction: studies the case in which the objective function f is constant (this is used in artificial intelligence, particularly in automated reasoning).

- Disjunctive programming: is used when at least one restriction can be satisfied but not all. This is of particular use in programming in a number of subfields. The techniques are designed primarily for optimization in dynamic contexts (i.e. decision-making over time).

- Variance calculation: seeks to optimize a defined goal on many points over time, considering how the objective function changes if the change is small on the path of choice. The optimal control technique is a generalization of this.

- Dynamic programming studies the case in which the optimization strategy is based on the division of the problem into smaller subproblems. The equation that describes the relationship between these subproblems is called Bellman's equation.

- Mathematical Programming with Balance Restrictions is where restrictions include variable or complementary inequalities.

Classification of critical and extreme points

Feasibility of the problem

The solvability of the problem, also called the feasibility of the problem, is the question of whether any feasible solution exists, regardless of its objective value. This can be considered as the special case of mathematical optimization where the objective value is the same for all solutions, and thus any solution is optimal.

Many optimization algorithms need to start from a feasible point. One way to obtain such a point is to relax the feasibility conditions using a slack variable; with enough slack, any starting point is feasible. Then, that slack variable is minimized until the slack is zero or negative.

Existence

Weierstrass's theorem states that a real and continuous function on a compact set reaches its maximum and minimum value. More generally, a lower semi-continuous function on a compact set reaches its minimum; an upper semi-continuous function in a compact set reaches its maximum.

Necessary conditions of optimality

One of Fermat's theorems ensures that the optimums of unrestricted problems are found at stationary points, where the first derivative of the objective function is zero (or its gradient zero). More generally, they can also be found at critical points where the first derivative or gradient of the objective function is undefined, or at the boundary of the choice set. An equation (or set of equations) stating that the first derivative(s) is(are) equal to zero at an interior optimum is called a first-order condition or a set of first-order conditions.

Optima of inequality-constrained problems are instead found using the Lagrange multipliers method. This method computes a system of inequalities called the Karush–Kuhn–Tucker conditions or complementary slack conditions, which are then used to compute the optimum.

Sufficient conditions for optimality

While the first derivative test identifies points that may be extreme, this test does not distinguish whether a point is minimum, maximum, or neither. When the objective function is twice differentiable, these cases can be distinguished by studying the second derivative or the matrix of second derivatives (called the Hessian matrix), in unrestricted problems, or the matrix of second derivatives of the objective function and the constraints called the bordered Hessian matrix, in restricted problems.

Conditions that distinguish maxima, or minima, from other stationary points are called second-order conditions. If a candidate solution satisfies the first-order conditions and the second-order conditions as well, it is sufficient to establish, at least, local optimality.

Sensitivity and continuity of the optimum

The Envelope Theorem describes how the value of an optimal solution changes when an underlying parameter changes. The process that computes this change is called comparative statics.

Claude Berge's (1963) maximum theorem describes the continuity of an optimal solution as a function of underlying parameters.

Optimization calculations

For unrestricted problems with twice differentiable functions, some critical points can be found by detecting the points where the gradient of the objective function is zero (ie, the stationary points). More generally, a zero subgradient certifies that a local minimum has been found for minimization problems with convex or other Lipschitz functions.

In addition, critical points can be classified using the definiteness of the Hessian matrix: if it is positive definite at a critical point, then the point is a local minimum; if it is negative definite, then the point is a local maximum; finally, if it is undefined, then the point is some kind of saddle point.

Restricted problems can often be transformed into unrestricted problems with the help of Lagrange multipliers. Lagrangian relaxation can also provide approximate solutions to difficult restricted problems.

When the objective function is convex, then any local minimum will also be a global minimum. There are efficient numerical techniques to minimize convex functions, for example the interior point methods.

Computational optimization techniques

To solve problems, researchers can use algorithms that end in a finite number of steps, or iterative methods that converge to a solution (in some specific class of problems), or heuristics that can provide approximate solutions to some problems (although their iterations do not necessarily converge.)

Optimization algorithms

- George Dantzig's Simplex Algorithm, designed for linear programming.

- Simplex algorithm extensions, designed for quadratic programming and for linear-fraction programming.

- Simplex algorithm variants that are especially suitable for networking optimization.

- Combinatory algorithms.

Iterative methods

The iterative methods used to solve nonlinear programming problems differ depending on what they evaluate: Hessians, gradients, or just function values. While evaluating Hessians (H) and gradients (G) improves the speed of convergence, such evaluations increase the computational complexity (or computational cost) of each iteration. In some cases, the computational complexity may be excessively high.

An important criterion for optimizers is just the number of function evaluations required, as this is often a large computational effort in itself, usually much more effort than the optimizer itself, since it mostly has to operate on N variables. Derivatives provide detailed information for optimizers, but are even more expensive to calculate, eg approximating the gradient takes at least N+1 function evaluations. For the approximation of the second derivatives (grouped in the Hessian matrix) the number of function evaluations is of order N². Newton's method requires second order derivatives, so for each iteration the number of function calls is of order N², but for the simplest pure gradient optimizer it is of order N. However, gradient optimizers they usually need more iterations than Newton's algorithm. Being better regarding the number of function calls depends on the problem itself.

- Methods that evaluate Hessianas (or approximate Hessianas, using finite differences):

- Newton Method.

- Quadratic sequential programming: a Newton method based on small-half-scale restrictive problems. Some versions can handle large-dimensional problems.

- Newton Method.

- Methods that evaluate gradients or approximate gradients using finite differences (or even subgradient):

- Quasi-Newton methods: iterative methods for medium-large problems (e.g. N.1000).

- conjugated gradient methods: iterative methods for big problems. (In theory, these methods end in a finite number of steps with quadratic objective functions, but this finite termination is not observed in practice in finite precision computers.)

- Inner point methods: this is a great kind of methods for restrictive optimization. Some inner point methods use only subgrade information, and others require Hessian evaluation.

- Descent of gradient (alternatively, steep descent or pronounced ascent): a slow method of theoretical and historical interest, which has been renewed to find approximate solutions of enormous problems.

- Subgradient method: an iterative method for large Lipschitz functions locally using generalized gradients.

- Methods that evaluate only functions values: if a problem is continually differentiated, then gradients can be approached using finite differences, in such case a gradient-based method can be used.

- Interpolation methods.

- Pattern search methods, which have better convergence properties than Nelder-Mead heuristics.

Global convergence

In general, if the objective function is not a quadratic function, then many optimization methods use other methods to ensure that some subsequence of iterations converges to an optimal solution. The first popular method that guarantees convergence relies on linear search, which optimizes a function in one dimension. A second and popular method of ensuring convergence uses trusted regions. Both linear searches and trust regions are used in modern non-differentiable optimization methods. Usually a global optimizer is much slower than advanced local optimizers (eg BFGS), so often an efficient global optimizer can be built by starting the local optimizer from different starting points.

Heuristics

In addition to algorithms (finite completion) and iterative methods (converging), there are heuristics that can provide approximate solutions to some optimization problems:

- Differential evolution.

- Differential search algorithm.

- Dynamic Relaxation.

- Genetic algorithms.

- Ascense of mountains.

- Nelder-Mead: a popular heuristic to approach minimisation (without calls to gradients).

- Optimization by particle swarm.

- Artificial optimization of bee colony.

Contenido relacionado

Karl Weierstrasse

Thousands separator

Eckmann–Hilton argument

![{displaystyle {underset {xin (-infty-1]}{operatorname {arg,min} }};x^{2}+1,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/39c071258fcecb43aaf25920b9833589bc35036c)

![{displaystyle {underset {x}{operatorname {arg,min} }};x^{2}+1,;{text{sujeto a:}};xin (-infty-1].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b24d2927492dd084a4cfbaf4bccacb3b78d8d167)

![{displaystyle (-infty-1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3159526ce3102ba58727dfc08cb732b19fdd0b96)

![{displaystyle {underset {xin [-5,5],;yin mathbb {R} }{operatorname {arg,max} }};xcos(y),}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5a821b5f32ba0a33ed4f712423e44edd613e3696)

![{displaystyle {underset {x,;y}{operatorname {arg,max} }};xcos(y),;{text{sujeto a:}};xin [-5,5],;yin mathbb {R}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7899c7b79016ffe7f2fac0b1404a6387af147a52)