Numerical analysis

The numerical analysis or numerical calculation is the branch of mathematics in charge of designing algorithms to simulate approximations of solutions to problems in mathematical analysis. It differs from symbolic computation in that it does not manipulate algebraic expressions, but numbers.

Numerical analysis became especially important with the advent of computers. Computers are useful for extremely complex mathematical calculations, but ultimately they deal with binary numbers and simple mathematical operations.

From this point of view, numerical analysis will provide all the scaffolding necessary to carry out all those mathematical procedures that can be expressed algorithmically, based on algorithms that allow their simulation or calculation in simpler processes. using numbers.

Once the error has been defined, along with the admissible error, we move on to the concept of algorithm stability. Many of the mathematical operations can be carried out through the generation of a series of numbers that in turn feed the algorithm again (feedback). This provides a very important calculation and refinement power to the machine that, as it completes a cycle, arrives at the solution. The problem occurs in determining how long the cycle should continue, or if we are moving away from solving the problem.

Finally, another concept parallel to numerical analysis is that of representation, both of numbers and of other mathematical concepts such as vectors, polynomials, etc. For example, for the computer representation of real numbers, the concept of floating point is used, which is far from that used by conventional mathematics.

In general, these methods are applied when a numerical value is needed as a solution to a mathematical problem, and "exact" or "analytical" procedures (algebraic manipulations, theory of differential equations, integration methods, etc.) are unable to give an answer. Due to this, they are frequently used procedures by physicists and engineers, and whose development has been favored by their need to obtain solutions, even if the precision is not complete. It should be remembered that experimental physics, for example, never yields exact values but rather intervals that encompass the vast majority of experimental results obtained, since it is not usual for two measurements of the same phenomenon to yield exactly the same values.

For example, ordinary differential equations appear in celestial mechanics for predicting the motions of planets, stars, and galaxies; numerical linear algebra is important for data analysis; Stochastic differential equations and Markovs chains are essential in living cell simulation for medicine and biology.

Before the advent of modern computers, numerical methods often relied on manual interpolation formulas applied to data from large printed tables. Since the middle of the XX century, computers calculate the necessary functions for them, but many of the same formulas are still used, not however, as part of the software algorithms.

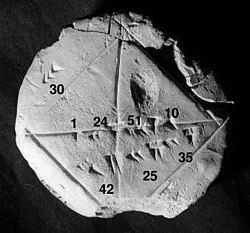

The numerical point of view dates back to the earliest mathematical writings. A tablet from the Yale Babylonian Collection (YBC 7289), gives a sexagesimal numerical approximation of the square root of 2, the length of the diagonal in a unit square.

Numerical analysis continues this long tradition: instead of exact symbolic answers, which can only be applied to real-world measurements by translation to digits, it gives approximate solutions within specified limits of error.

General introduction

The general objective of the field of numerical analysis is the design and analysis of techniques to give approximate but precise solutions to difficult problems, the variety of which is suggested in the following:

- Advanced numerical methods are essential to make numerical prediction of time viable.

- The calculation of the trajectory of a spacecraft requires the precise numerical solution of a system of ordinary differential equations.

- Automobile companies can improve the safety of their vehicles by computer simulations of traffic accidents. These simulations consist essentially of the numerical resolution of partial differential equations.

- Coverage funds (private investment funds) use tools from all fields of numerical analysis to try to calculate the value of shares and derivatives more accurately than other market participants.

- Airlines use sophisticated optimization algorithms to decide the price of tickets, the allocation of planes and crews and fuel needs. Historically, these algorithms have developed in the field of operational research.

- Insurance companies use numerical programs for actuarial analysis.

The remainder of this section outlines several important topics in numerical analysis.

History

The field of numerical analysis predates the invention of modern computers by many centuries. Linear interpolation was already used more than 2000 years ago. Many great mathematicians of the past were concerned with numerical analysis, as can be seen from the names of important algorithms such as Newton's method, Lagrange's interpolation polynomial, Gaussian elimination, or Euler's method.

To make calculations by hand easier, large books were produced with formulas and tables of data such as interpolation points and coefficients of functions. With these tables, often calculated to 16 decimal places or more for some functions, you could look up values to plug into the given formulas and get very good numerical estimates for some functions. The canonical work in this field is the NIST publication edited by Abramowitz and Stegun, a 1000+ page book with a large number of commonly used formulas and functions and their values at many points. Function values are no longer very useful when you have a computer, but the large list of formulas can still be very useful.

The mechanical calculator was also developed as a manual calculation tool. These calculators evolved into electronic computers in the 1940s, and it was then discovered that these computers were also useful for administrative purposes. But the invention of the computer also influenced the field of numerical analysis, since longer and more complicated calculations could now be made.

Direct and iterative methods

Let us consider the problem to solve

- 3x3 + 4 = 28

for the unknown quantity x.

| 3x3 + 4 = 28. | |

| Rest 4 | 3x3 = 24. |

| Split by 3 | x3 = 8. |

| Make the cubic root | x = 2. |

For the iterative method, let's apply the bisection method to f(x) = 3x3 − 24. The initial values are: a = 0, b = 3, f(a) = − 24, f(b) = 57.

| a | b | mid | f(mid) |

|---|---|---|---|

| 0 | 3 | 1.5 | −13.875 |

| 1.5 | 3 | 2.25 | 10.17... |

| 1.5 | 2.25 | 1.875 | −4.22... |

| 1.875 | 2.25 | 2.0625 | 2.32... |

From this table it can be concluded that the solution is between 1.875 and 2.0625. The algorithm could return any number in that range with an error of less than 0.2.

Discretization and numerical integration

In a two-hour race, the speed of the car is measured at three instants and is recorded in the following table:

| Time | 0:20 | 1:00 | 1:40 |

|---|---|---|---|

| km/h | 140 | 150 | 180 |

A discretization would be to say that the speed of the car was constant from 0:00 to 0:40, then from 0:40 to 1:20, and finally from 1:20 to 2:00. For example, the total distance traveled in the first 40 minutes is approximately (2/3 h × 140 km/h) = 93.3 kilometers. This would allow us to estimate the total distance traveled as 93.3 km +100 km +120 km =313.3 km, which is an example of numerical integration (see below) using a Riemann sum, because displacement is the integral of velocity.

Ill-conditioned problem: Take the function f(x) = 1/(x − 1). Note that a change in x of less than 0.1 becomes a change in f(1.1) = 10 and f(1.001) = 1000, out of nearly 1000. Evaluating f (x) near x = 1 is an ill-conditioned problem.

Well-conditioned problem: instead, evaluate the same function f(x) = 1/(x − 1) near x = 10 is a well-conditioned problem. For example, f(10) = 1/9 ≈ 0.111 and f(11) = 0.1, so a modest change in x leads to a modest change in f (x).

Direct methods compute the solution to a problem in a finite number of steps. These methods would give the precise answer if performed in Infinite Precision Arithmetic. Some examples are Gaussian elimination, the QR factorization method for solving systems of linear equations, and the simplex method of linear programming. In practice, finite precision is used and the result is an approximation of the true solution (assuming stability).

Unlike direct methods, iterative methods are not expected to terminate in a finite number of steps. Starting from an initial guess, iterative methods form successive approximations that converge to the exact solution only in the limit. A convergence test, often involving the remainder, is specified to decide when a sufficiently exact solution has been (hopefully) found. Even using infinite-precision arithmetic, these methods would not, in general, reach the solution in a finite number of steps. Some examples are Newton's method, bisection method, and Jacobi's method. In matrix computer algebra, iterative methods are generally necessary for large problems.

Iterative methods are more common than direct methods in numerical analysis. Some methods are direct in principle, but are often used as if they were not, eg GMRES and the conjugate gradient method. For these methods the number of steps required to obtain the exact solution is so great that an approximation is accepted in the same way as for an iterative method.

Problems

The problems of this discipline can be divided into two fundamental groups:

- finite dimension problems: those whose answer is a finite set of numbers, such as algebraic equations, determinants, problems of their own values, etc.

- Problems of infinite dimension: problems in whose solution or approach involve elements described by an infinite number of numbers, such as numerical integration and derivation, calculation of differential equations, interpolation, etc.

Classification according to its nature or motivation

Likewise, there is a subclassification of these two large sections into three categories of problems, according to their nature or motivation for the use of numerical calculus:

- Problems of such complexity that have no analytical solution.

- Problems in which there is an analytical solution, but this, for complexity or other reasons, cannot be exploited simply in practice.

- Problems for which there are simple methods but which, for elements that are used in practice, require an excessive amount of calculations; greater than that required for a numerical method.

Areas of study

Numerical analysis is divided into different disciplines according to the problem to be solved.

Calculating the values of a function

One of the simplest problems is the evaluation of a function at a given point. For polynomials, one of the most used methods is the Horner algorithm, since it reduces the number of operations to perform. In general, it is important to estimate and control round-off errors that occur from the use of floating-point arithmetic.

Extrapolation is very similar to interpolation, except now we want to find the value of the unknown function at a point that is not between the given points.

Regression is also similar, but it takes into account that the data is imprecise. Given some points, and a measure of the value of the function at them (with an error due to the measurement), we want to determine the unknown function. The method of least squares is a popular way to do this.

Solving Equations and Systems of Equations

Another fundamental problem is to calculate the solution of a given equation or equation system. Two cases are distinguished depending on whether the equation or equation system is or not linear. For example, the equation 2x+5=3{displaystyle 2x+5=3} is linear while the second degree equation 2x2+5=3{displaystyle 2x^{2}+5=3} It's not.

Much effort has been put into developing methods for solving systems of linear equations. Direct methods, i.e., methods that use some factorization of the matrix are the Gaussian elimination method, the LU decomposition, the Cholesky decomposition for positive definite symmetric (or Hermitian) matrices, and the QR decomposition. Iterative methods such as the Jacobi method, the Gauss-Seidel method, the method of successive approximations, and the conjugate gradient method are frequently used for large systems.

In the numerical resolution of nonlinear equations, some of the best known methods are the bisection, secant, and false position methods. If the function is also differentiable and the derivative is known, Newton's method is widely used. This method is a fixed-point iteration method. Linearization is another technique for solving nonlinear equations.

Polynomial algebraic equations have a large number of numerical methods for enumerating:

- Method of Gräeffe (or method of Lobachevsky or Lobachevsky-Dandelin-Gräeffe or the square of the roots)

- Method of Laguerre

- Bairstow Method (or Lin-Bairstow Method)

- Method of Bernoulli

- Horner method

- Householder Method

- Newton-Raphson method specialized for polynomials

- Richmond Method Specialized for Polynomials

- Richmond Modified Method

- Newton-Horner Method

- Richmond-Horner Method

- Method of Birge-Biète

- Jenkins-Traub Method

Singular value and spectral decomposition

Quite a few important problems can be expressed in terms of spectral decomposition (the computation of vectors and eigenvalues of a matrix) or singular value decomposition. For example, principal component analysis uses decomposition into vectors and eigenvalues.

Optimization

Optimization problems search for the point for which a given function reaches its maximum or minimum. Often the point also satisfies some constraint.

Examples of optimization problems are linear programming in which both the objective function and the constraints are linear. A famous linear programming method is the simplex method.

The Lagrange multipliers method can be used to reduce constrained optimization problems to unconstrained problems.

Evaluation of integrals

Numerical integration, also known as numerical quadrature, seeks to calculate the value of a definite integral. Popular methods use one of the Newton-Cotes formulas (such as the rectangle rule or Simpson's rule) or Gaussian quadrature. These methods are based on a "divide and conquer" strategy, dividing the integration interval into subintervals and calculating the integral as the sum of the integrals in each subinterval, being able to subsequently improve the value of the integral obtained using the Romberg method. For the calculation of multiple integrals these methods require too much computational effort, the Monte Carlo method being useful.

Differential Equations

Numerical analysis can also calculate approximate solutions of differential equations, either ordinary differential equations or partial differential equations. The methods used are usually based on discretizing the corresponding equation. It is useful to see the numerical derivation.

For the resolution of ordinary differential equations, the most used methods are the Euler method and the Runge-Kutta methods.

Partial differential equations are first solved by discretizing the equation, taking it into a finite-dimensional subspace. This can be done using a finite element method.

Sources of errors and their impact

Algorithms for numerical methods are often implemented by computers. These have some properties that cause failure when used to find the numerical solution of mathematical problems, among which are the following:

- Computers are able to store a finite number of digits, so they cannot store the set of real numbers as a whole to perform numerical operations with these. Instead, they have a subset of the actual numbers to which it is known as floating point numbers or machine numbers. The error to which this limiter carries is called round error.

- There are problems that involve many calculations for your solution. Sometimes solutions are sensitive to the accuracy of intermediate calculations, in which case it is said that solutions may have been disrupted by data.

- Increased number of operations will have a higher rounding error. The speed provided by computers for processing has significantly accelerated the speed with which operations are calculated. However, the spread of round errors by computing calculations may result in the instability of the results cast by the algorithms programmed in them.

Failures in the intermediate calculations performed by a computer to return a final result are often unknown to programmers and very difficult to detect: the addition and product of floating-point numbers are commutative operations, but they are not associative and not distributive. By not checking these two properties of real numbers, the handling of operations performed with floating point numbers is a complicated task. On the other hand, the order of operations can affect the precision of the results returned by the machine, since two expressions that are equivalent in an algebraic sense can give different results in the context of machine numbers.

Fortunately, there are some techniques to prevent and attack rounding error. Some of the implications of these strategies for the basic operations of addition, subtraction, multiplication, and division are discussed in. Also discussed are some IEEE floating point standards and the connections between floating point and computer system design.

Improving the precision of floating point numbers is still being studied today. In 2015, researchers at the University of Washington developed a computational tool they called Herbie that "automatically detects the transformations necessary for a program to improve its accuracy." Herbie evaluates the error of a floating-point expression and identifies which operations contribute most significantly to the accumulation of errors, then generates alternatives to perform these operations and does a comparison to finally determine the optimal equivalent expression (the one that minimizes the error) to correct the program.

The interest in ensuring a certain level of precision in the numerical results provided by a computer is due to its possible repercussions in practice. For example, in the academic field there have been cases of research articles in which rounding error has prevented the results from being reproducible and, on occasions, this has even been a reason for rejection for publication (y). This type of error has also permeated financial legal regulation in some countries and distorted stock market indices.

The limitation on the floating-point representation of real numbers also has repercussions on computer-generated graphs. When a number is less than what is known as the machine epsilon, the computer is unable to represent it. This can cause the graphs associated with numerical values smaller than the epsilon to exhibit false behavior and affect decision making based on them, with unsuspected consequences, for example, when making forecasts, an area in which precision plays a crucial role.

There are other types of error in the context of numerical methods that deserve equal attention and care. Truncation and conversion errors, among others, have given rise to multiple catastrophes: the failure of the Patriot missile, the explosion of the Ariane 5 rocket, the sinking of the Sleipner oil platform are just a few examples. Hence the importance of recognize these sources of error to anticipate them and, where appropriate, detect and correct them.

Other numerical analysis topics

- approximation error, absolute error and relative error

- convergence order

- round

- Numbering system

- truncation

Contenido relacionado

IEEE 1394

Single user

Datatype