MIDI

MIDI (an acronym for Musical Instrument Digital Interface) is a technology standard that describes a protocol, digital interface, and connectors that allow various electronic musical instruments, computers, and other related devices connect and communicate with each other. A single MIDI connection can transmit up to sixteen channels of information that can be connected to different devices each.

The MIDI system carries event messages that specify musical notation, pitch, and velocity (intensity); control signals for musical parameters such as dynamics, vibrato, 2D panning, cues, and clock signals that set and synchronize tempo between various devices. These messages are sent over a MIDI cable to other devices that control sound generation or other features. These data can also be recorded in hardware or software called a sequencer, which allows editing the information and playing it later.

MIDI technology was standardized in 1983 by a group of industry representatives of musical instrument manufacturers called the MIDI Manufacturers Association (MMA). All MIDI standards are jointly developed and published by the MMA in Los Angeles, California, United States, and for Japan, the MIDI committee of the Association of Musical Electronics Industry (AMEI) in Tokyo.

Advantages of using MIDI include small file sizes (a complete song can be encoded in a few hundred lines, say a few kilobytes) and easy instrument manipulation, modification, and selection.

History

Development of MIDI

Toward the end of the 1970s, electronic music devices became more common and less expensive in North America, Europe, and Japan. Early analog synthesizers were usually monophonic and controlled by the voltage produced by their keyboards. Manufacturers used this voltage to connect instruments together so that a single device could control one or more, however this system was not suitable for polyphonic and digital synthesizers. Some manufacturers created systems that allowed their own equipment to be interconnected, but the systems were not externally compatible (as was the case with other technologies), that is, the systems of some manufacturers could not be synchronized with those of others.

In June 1981, Roland founder Ikutaro Kakehashi pitched the idea of standardization to Oberheim Electronics founder Tom Oberheim, who at the time spoke with Sequential Circuits president Dave Smith. In October 1981, Kakehashi, Oberheim, and Smith discussed the idea with representatives from Yamaha, Korg, and Kawai.

Sequential Circuits engineers and synth designers Dave Smith and Chet Wood came up with the idea of a universal synth interface, allowing direct communication between equipment regardless of whether they were from different manufacturers. Smith proposed this standard in November 1981 to the Audio Engineering Society. For the next two years, the standard was discussed and modified by representatives of companies such as Roland, Yamaha, Korg, Kawai, Oberheim, and Sequential Circuits, renamed Musical Instrument Digital. Interface. The development of MIDI was introduced to the public by Robert Moog in October 1982 in Keyboard magazine.

At the NAMM exhibit in January 1983, Smith managed to introduce the MIDI connection between the Prophet 600 analog synthesizer (by Sequential circuits inc) and the Jupiter-6 (by Roland). The MIDI 1.0 protocol was published in August 1983. The MIDI standard was published by Ikutaro Kakehashi and Dave Smith, who in 2013 received the Technical Grammy for their role in the development of MIDI.

Impact of MIDI on the music industry

The use of MIDI was originally limited to those who wanted to make use of electronic instruments in the musical production of pop music. The standard allowed different instruments to communicate with each other and with computers. This caused a rapid expansion in the sales and production of electronic instruments and musical software. This intercompatibility allowed one device to be controlled from another, helping musicians who needed to use different types of hardware. The introduction of MIDI coincided with the advent of personal computers, samplers (which allow you to play pre-recorded sounds in live performances to include effects that were previously not possible outside of studios), and digital synthesizers, which allowed sounds to be stored. pre-programmed and then used simply by pressing a button. The creative possibilities afforded by MIDI technology helped revive the music industry during the 1980s.

MIDI introduced many capabilities that transformed the way musicians worked. MIDI sequencing made it possible for a user with no music writing skills to develop complex arrangements. A musical act with one or two members, both operating a few MIDI devices, could represent a sound similar to groups with a much larger number of musicians.. The cost of hiring musicians for a project could be reduced or eliminated, and complex productions can be accomplished on a small system such as a MIDI workstation, synthesizer with built-in keyboard, and sequencer. Professional musicians could do this in a space called home recording, without the need to rent a professionally staffed recording studio. By working pre-production in such an environment, an artist can reduce recording costs, arriving at the studio with a job that is partially complete. Rhythm and background parts can be sequenced and then played back on stage. Performances require less material transportation and equipment set-up time due to the reduced and different connections needed to play various sounds.[ citation needed] MIDI-compatible educational technology has also transformed music education.

In 2012, Ikutaro Kakehashi and Dave Smith received the Technical Grammy for the development of MIDI in 1983.

Applications

Instrument control

MIDI was invented so that musical instruments could communicate with each other and that one instrument could control another. Analog synthesizers that did not have a digital component and were built before the development of MIDI can be fitted with kits that convert MIDI messages to analog control voltages. When a note is played on a MIDI instrument, it generates a digital signal. which can be used to trigger the same note on another instrument. Remote control capability allows large instruments to be replaced with small sound modules. This allows musicians to combine instruments to achieve a full sound or to create combinations such as acoustic piano and strings. MIDI also allows other instrument parameters to be controlled remotely. Synthesizers and samplers have several tools for shaping a sound. The frequency of a filter and the attack of an envelope, or the time it takes for a sound to reach its maximum value are examples of synth parameters, and can be controlled remotely via MIDI. Effect devices have different parameters, such as reverb or delay time. When a MIDI controller number is assigned to these parameters, the device will respond to messages it receives from that controller. Controls such as knobs, switches, and pedals can be used to send these messages. A set of parameters set as a patch can be saved in the memory of a device. These patches can be selected remotely via MIDI program changes. Standard MIDI allows a selection of 128 different programs, but there are many devices that can allow many more by patching their patches into banks with 128 programs each and combining the program change message for bank selection.

Composition

MIDI events can be sequenced via a MIDI editor or a dedicated workstation. Several DAWs are specifically designed to work with MIDI as an integral component. MIDI sequences have been developed in various DAWs so that MIDI messages can be modified. These tools allow composers to test and edit their work faster and more efficiently than other solutions such as multitrack recording, improve composer efficiency and allow you to create complex arrays without the need for training.

Because MIDI is a set of commands that create sounds, MIDI sequences can be manipulated in different ways compared to pre-recorded audio. It is possible to change the key, instrumentation, or tempo of a MIDI arrangement, and rearrange sequences individually. The ability to compose ideas and hear them immediately allows composers to experiment. Algorithmic composition programs allow Computer generated performances can be used as song ideas or accompaniment.

Some composers took advantage of MIDI 1.0 and General MIDI (GM) technology that allowed musical data to be transferred between various instruments using a standardized set of commands and parameters. Data composed through a MIDI sequence can be saved as a Standard MIDI File (SMF), distributed digitally, and played back by any computer or electronic instrument that adheres to the same standard MIDI, GM, and SMF. MIDI data is much smaller than audio file recordings.

MIDI and computers

At the time MIDI was introduced the computer industry was focused on mainframe computers. Personal computers were not very common. The personal computer market stabilized at the same time that MIDI appeared, with which computers became a viable option for music production. In the years after the ratification of the MIDI specification, MIDI features were adapted to several early computing platforms, including the Apple II Plus, IIe and Macintosh, Commodore 64 and Amiga, Atari ST, Acorn Archimedes, and PC DOS. The Macintosh was a favorite among American musicians. It was at a competitive price, and it would be years later that the efficiency and its graphical interface would be matched by the PC's. The Atari ST was a favorite in Europe, where Machintosh machines were more expensive. Apple computers featured audio hardware that was more advanced than its competitors. The Apple IIGS used a digital sound chip designed for the Ensoniq Mirage synthesizer. A specialized audio system with improved processors was used in later models, which led other companies to improve their products. The Atari ST was preferred because the MIDI connectors were built directly into the computer. Most software music of the first decade of MIDI publication was for Apple or Atari. By the launch of Windows 3.0 in 1990, PC's had improved their graphical interface along with their processors, so different software began to appear on different platforms.

Standard MIDI Files

The Standard MIDI Format (SMF) allows for a standardized way to store, transport, and open sequences on other systems. The compact size of these files has allowed them to be widely deployed on computers, ringtones, Internet pages, and greeting cards. They were created for universal use and include information such as note values, time, and track names. The lyrics can be included as metadata, which can be viewed on karaoke machines. The SMF specification was developed and maintained by MMA. SMF's are created as a format to export information to software sequencers or music workstations. They organize MIDI messages into one or more tracks and into timestamps for replaying the sequences. A header contains the information of the number of tracks, tempo and in which of the three SMF formats the file is. A type 0 file contains the information for an entire performance on a single track, while type I files contain the information for each of the tracks played synchronously. Type II files are rarely used and hold multiple arrangements, each having its own track to be played in sequence. Microsoft Windows packages the SMF with Downloadable Sounds (DLS) in a Resource Sharing Computer File (RIFF), with RMID files having the extension .rmi. RIFF-RMID has been deprecated in favor of Extensible Music Files (XMF).

File Sharing

A MIDI file is not a recording of the music. Rather, it is a sequence of instructions, typically occupying on the order of 1,000 times less bit space than a recording. This made arranging MIDI files a more attractive way of sharing music, before the advent of from the internet and devices with higher storage. Licensed MIDI files were available in floppy disk format in stores in Europe and Japan during the 1990s. The major drawback of this was the wide variety between user audio cards and audio samples or synthesized sounds on the card that MIDI took up symbolically. Even a sound card with high quality samples can have inconsistencies between the quality of one instrument to another, while different models of cards did not guarantee a consistency in the sound of the same instrument. Early inexpensive cards, such as the AdLib and Sound Blaster, used a stripped-down version of Yamaha's FM synthesis technology reproduced through low-quality digital-to-analog converters. The low playback quality of these ubiquitous cards was assumed to be somehow due to MIDI. This created the perception of MIDI as low-quality audio, while in reality MIDI has no sound and the quality of its playback depends entirely on the quality of the device playing it (and the samples on the device).

MIDI Software

The main advantage of personal computers in a MIDI system is that it can be used for different purposes, depending on the software used. The multitasking capability of operating systems allows the operation of several programs simultaneously that can share data with each other.

Sequencers

Sequencing software allows certain benefits for a composer or arranger. It allows recorded MIDI to be manipulated using basic computer editing features such as cut, copy and paste, or drag and drop. Keyboard shortcuts can be used to speed up work and MIDI editing tools can be selected via commands. The sequencer allows each channel to be played by a different sound as well as showing a graphical preview of the arrangement. There are different editing tools, including a visualization in musical notation. Tools like loops, quantize, randomize, and transpose simplify the process of creating arrangements. Beat making is simplified and the groove can be duplicated into the rhythmic feel of another track. Realistic expression can be added through real-time controller manipulation. Mixing can be performed and MIDI can be synchronized with recorded audio or video tracks. Progress can be saved and taken to another computer or studio.

Sequencers can take different forms such as drum beat editors that allow the user to create beats by clicking on pattern grids and loop sequences, for example ACID Pro, which allows MIDI to be combined with audio pre-recorded whose times and notes are tied. The cues sequence is used to trigger dialogue, sound effects, and musical segments in broadcasts.

Software for editing scores

Via MIDI, notes on a piano can be automatically transcribed into sheet music. Sheet music editing software usually lacks advanced sequencing tools and is optimized for creating professional sheet music for instrumentalists. These programs they allow the specification of dynamics and expression marks, chords and lyrics, as well as the design of complex scores. Available software allows printing of braille scores.

DoReMIR Music Research's ScoreCloud is known as the best tool for real-time transcription from MIDI to sheet music.

SmartScore (formerly MIDIScan) by SmartScore allows you to reverse this process and can play MIDI files from a score on a scanner.

Known sheet music editing programs include Finale by MakeMusic and Encore originally created by Passport Designs Inc., currently owned by GVOX. Sibelius, originally created for Acorns' RISC computers, it was widely accepted that, before the Windows and Macintosh versions were available, composers purchased an Acorns just to use Sibelius.

Publishers and bookstores

Patch editors allow the user to program their equipment through the use of an interface. These became essential with the advent of more complex synthesizers like the Yamaha FS1R, which had thousands of programmable parameters but had an interface consisting of only 15 small buttons, four knobs, and a small LCD display. Digital instruments often put off users to experimentation due to the lack of control they allow on their knobs and switches, but patch editors can give commands to hardware instruments and effect devices the same functionality that exists on software synthesizers to users. Some editors are designed for specific instruments or effect devices, while other, "universal" they support different types of equipment and can ideally control the parameters of each device.

Patch libraries have the specialized function of organizing sounds into rig collections and allowing the transmission of entire banks of sounds between an instrument and a computer. This allows the user to augment the device's patch storage capabilities with a computer and share patches with other users of the same instrument. Universal editors/libraries combining the two functions were common such as "Galaxy" from Opcode Systems and SoundDiver from eMagic. These programs have fallen out of favor due to the advent of computer synthesis, although Mark of the Unicorn's Unisyn and Sound Quest's Midi Quest remain available. Native Instruments' Kore was an attempt to bring the concept of editors/libraries back to the era of software synthesizers.

Self-accompaniment programs

There are programs that can dynamically generate backing tracks called "auto-accompaniment" programs. These create an entire band arrangement from the style the user selects, and the results are sent to a MIDI sound device to generate the sounds. The generated tracks can be used as testing or educational tools, as well as backing for live performances or songwriting aids. Some examples are Band-in-a-Box, which stems from the Atari platform in the 1980s, One Man Band, Busker, MiBAC Jazz, SoundTrek JAMMER, and DigiBand.

Synthesis and sampling

Computers can use software to generate sounds, which are passed through a digital-to-analog converter (DAC) to a speaker system. Polyphony, the number of sounds that can be played simultaneously, depends on the power of the computer's CPU, as well as the digital sampling and sound resolution of the playback, which directly impact the resulting sound quality. Synthesizers implemented in software are subject to problems of time that are not present in real hardware instruments, which have dedicated operating systems that are not subject to the interruption of background tasks like desktop operating systems. These timing problems can cause distortions due to the lag between different tracks and clicks when playback is interrupted. Software synthesizers can be affected by latency in sound generation due to computers using data buffers that delay playback and lag behind the MIDI (command) signal.

Software synthesis originated in the 1950s, when Max Mathews of Bell Laboratories wrote the MUSIC-N programming language, which was capable of generating sound but not in real time. The first synthesizer to run directly from the CPU of a computer was Reality, by Dave Smith's Seer Systems, which allowed low latency through driver integration and could only be played on Creative Labs sound cards. Some systems used dedicated software to reduce noise. work on the CPU, such as Symbolic Sound Corporation's Kyma System and Creamware/Sonic Core's Pulsar/SCOPE systems, which used multiple DSP chips on a Peripheral Component Interconnect (PCI) to power all instruments, effects and mixers.

The ability to build MIDI arrangements entirely on a computer allows the composer to later export the result as an audio file.

Video game music

Early computer games were distributed on floppy disks, and the small size of MIDI files made them a viable option for creating soundtracks. Games from the DOS era and early versions of Windows typically required support for Ad Lib or SoundBlaster sound cards. Those cards used FM synthesis, which generates a sound through the modulation of sine waves. John Chowning, the pioneer of the technique, theorized that the technology might be able to accurately recreate any sound if enough sine waves were used, but inexpensive computer audio cards performed FM synthesis with only two sine waves. Combined with the 8-bit cards, they offered a sound described as "artificial" and "primitive". FM synthesis. These were expensive, thought MIDI instrument sounds like the E-mu Proteus. The computer industry in the mid-1990s focused on sound cards based on wavetable sound synthesis with 16-bit playback but standardized on a 2MB ROM, a very small space for quality audio samples for 128 instruments plus percussion. Some manufacturers used 12-bit samples set to those 16 bits.

Other applications

MIDI has also been adopted as a control protocol for non-musical applications. MIDI Show Control uses MIDI commands to direct lights and trigger events in theatrical productions. VJ's and turntablists use it to play videos or sync gear and recording systems use it for timing and automation. Apple Motion allows control of animation parameters via MIDI. The 1987 first-person shooter game called MIDI Maze (ported to the Game Boy in 1991, the Game Gear in 1992, and the SNES in 1993) and the puzzle game Oxyd (1990) from Atari ST (ported to Windows in 1992) used MIDI to connect computers. Some kits for controlling lights or home applications use MIDI.

Despite its association with musical devices, MIDI can control any device and can read and process any MIDI command. It is possible to send a ship into space from earth to another destination, control house lighting, heating and air conditioning, or sequence traffic lights, all through MIDI commands. The device or object receiving the MIDI signal will require a General MIDI processor, in this case, program changes would trigger a function on that device instead of the notes of a MIDI instrument. Each function can be set to a clock (also MIDI controlled) or other condition determined by the device's creator.

MIDI Devices

Connectors

Initially midi cables ended in a 180º DIN connector. Standard applications use only three of the five conductors: ground and a pair of balanced wires that carry a 5v signal. This connector configuration can only carry messages in one direction, so one wire is necessary for two-way communication.. Some high-priority applications such as phantom power for some controllers use the leftover pins for direct current (DC) transmission.

Using Optocouplers they keep MIDI devices electrically separated from other connectors, which prevents ground loops and protects the equipment from voltage spikes. There was initially no way to detect MIDI errors, so the maximum size of the cable is 15 meters (50 feet) to limit destructive interference.

Most devices don't copy messages from the input to their output port. A third type of port, the "thru" port, outputs a copy of whatever is received at the "in" input port, allowing the data to be transmitted to another instrument. in a "Daisy chain". Not all devices feature a thru port and devices that lack the ability to generate MIDI data, such as effects units or sound modules, may not include an output port.

Device Management

Each device in a chain slows down the system. This is avoided through a MIDI thru box, which contains several outputs that provide a copy of the input signal. A MIDI mixer is capable of combining multiple devices into a single signal and allows multiple controllers to be controlled by a single device. A MIDI switch allows switching between multiple devices and eliminates the need to reconnect cables. MIDI patch panels combine all of these features. They have multiple inputs and outputs that allow any combination of input channels to be sent to any output. Connections can be created using software, storing them in memory and selecting them from MIDI program change commands. This allows the devices to function as MIDI routers in situations where a computer is not available. MIDI patch panels also they are used to clean up stray MIDI data bits on their inputs.

MIDI data processors are used for tasks and special effects. These include MIDI filters, which remove MIDI data from a signal, and MIDI delays, which send a repeat of input MIDI data at a set time.

Interfaces

The primary function of a computer MIDI interface is to synchronize relays between a MIDI device and the computer. Some computer sound cards include a standard MIDI connector, while others connect via D-sub DA -15 game port, USB, firewire or ethernet. The increasing use of USB connectors in the 2000's has led to the availability of MIDI to USB interfaces that can transfer MIDI channels to USB-enabled computers. Some MIDI controllers are equipped with USB connectors and can be connected to computers running music software.

Serial MIDI transmission leads to synchronization problems. Experienced musicians can detect differences of 1/3 of a millisecond (ms)[citation needed] (this is the time it takes for sound to travel 4 inches) and a MIDI message of 3 bytes require 1ms to transmit. Since MIDI is serial, only one event can be sent at a time. If an event is sent to two channels at the same time, the event with the higher channel number cannot be transmitted until the first one has finished and will be delayed by 1ms. If an event is sent to all channels at the same time, the event with a higher channel number will be delayed by as much as 16ms. This has contributed to the rise of MIDI interfaces with multiple inputs and outputs because timing improves when events are sent on different ports as opposed to multiple channels on the same port. The term "MIDI stumble" refers to audible errors resulting from a delayed transmission.

Controllers

There are two types of MIDI controllers: performance controllers that generate notes and are used to play music, and controllers that may not transmit notes but can transmit other types of events in real time. Several devices are the combination of the two types.

Controllers for performance

MIDI was designed with keyboards in mind and any controller that does not have a keyboard is considered an "fallback" controller. This has been seen as a limitation for composers who are not interested music employing keyboards, flexibility, and MIDI compatibility was introduced to other types of controllers including guitars, woodwinds, and drum machines.

Keyboards

Musical keyboards are the most common type of MIDI controller. They can be found in different sizes from 25-key, two-octave models, to 88-key instruments. Some only include the keyboard, although there are models with other real-time controllers such as knobs, sliders, and levers. They usually also have connections for sustain and expression pedals. Most keyboard controllers allow you to divide the piano area into zones, which can be of different sizes and overlap. Each zone corresponds to a different MIDI channel and a different set of controllers, and can be used to play any selected range of notes. This allows a single instrument to play many different sounds. MIDI capabilities can also be found on traditional keyboard instruments such as pianos and Rhodes pianos. Pedalists can control the tones of a MIDI organ or can play a synthesizer like the Moog Taurus.

Wind Controllers

Wind controllers allow MIDI sections to be played with the same expression and articulation possible as conventional wind instruments. They allow the breath and pitch control to produce a more versatile way of phrasing, particularly when playing physically modeled wind instrument parts or samples. A typical wind controller has a sensor that converts breath pressure into volume information. and you can control the tone although it can also be done with a lip pressure sensor and a tone knob. Some models allow the configuration of the different execution systems of different instruments. Examples of these controllers can be found the Akai EWI and the Electronic Valve Instrument (EVI). The EWI uses a system of buttons and knobs modeled after a woodwind instrument, while the EVI is based on a brass instrument and has three switches that emulate the valves of a trumpet.

Drums and Percussion Controllers

Piano-type keyboards can be used to trigger drum sounds but are impractical for playing repetitive patterns like rolls due to the dimensions of the keys. After keyboards, drum pads are the most significant controllers for MIDI performance. Percussion controllers can be integrated into drum machines, can be stand-alone control surfaces, or emulate and feel like percussion instruments. The pads built into drum machines are usually too small and fragile to be played with sticks and are played with the fingers. Specialized drum pads such as the Roland Octapad or DrumKAT are played with the hands or sticks and are built as a set of battery. Other percussion controllers exist with the vibraphone-like MalletKAT and Don Buchla's Marimba Lumina. MIDI triggers can be installed on acoustic drums and percussion instruments. The pads can trigger a MIDI device that can even be homemade from a piezo sensor or practice pad.

String Controller Instruments

A guitar can be fitted with special pickups that digitize the instrument's output and allow you to play synth sounds. Each string is assigned to a different MIDI channel, giving the player the opportunity to play the same sound on all strings or different ones for each. Some models, such as the Yamaha G10, use electronics instead of a guitar body. Other systems, such as Roland pickups can be included or fitted to a standard instrument. Max Mathews designed a MIDI violin for Laurie Anderson in the mid-1980s, as well as violas, cellos, double basses, and mandolins.

Specialized controllers for performance

Some digital DJ controllers can work on their own like the FaderFox or the Xone 3D from Allen & Heath or they can be integrated by some specific software like Traktor or Scratch Live. These commonly respond to MIDI clock timing and allow control over mixing, samples, effects, and loops.

MIDI triggers attached to shoes or clothing are often worn by performers. The Kroonde Gamma wireless sensor can capture movement into MIDI signals. Sensors placed on stage at the University of Texas at Austin convert dancers' movements into MIDI messages and the Very Nervous System installation by David Rokeby creates music from the movement of people. There are software applications that allow the use of iOS devices as gesture controllers.

There are numerous experimental controllers which abandon traditional music interfaces entirely. Some of these include control via controllers such as the Buchla Thunder, the C-Thru Music Axis, which rearranges the scales in an isometric display, or the Haken Audio continuum. Experimental MIDI controllers can be created from unusual objects such as an ironing board with heat sensors installed or a sofa with pressure sensors equipped.

Auxiliary Controllers

Soft synthesizers have great power and versatility, but some players find that there is a division of attention between a MIDI keyboard and a computer keyboard with a mouse that robs the immediacy of the playing experience. Dedicated devices to real-time MIDI control allow for an ergonomic benefit as well as offering a sense of connection to the instrument rather than the use of a mouse or digital button. Controllers in general are multi-purpose devices that are designed to work with various types of equipment or may be designed to work with specific software. Some examples are Akai's APC40 for Ableton Live and Korg's MS-20ic which is a reproduction of the MS-20 analog synthesizer. The MS-20ic controller includes patch cables that can be used to control the signal in the virtual playback of the MS-20 synth, it can also control other devices.

Control surfaces

Control surfaces are hardware devices that enable a variety of controls that transmit control messages in real time. These software instruments can be programmed without excessive use of mouse movement or adjustment of hardware devices without the need to operate them through various menus. Buttons, sliders, and knobs are the most common controllers. You can also find rotary encoders, transport controls, joysticks, ribbon controllers, vector touchpads like Korg's Kaoss pad, and optical controllers like Roland's D-Beam. Control surfaces can be used for mixing, automating sequences, turntables, and lighting control.

Specialized real-time controllers

Audio control surfaces often resemble mixing consoles in appearance and allow some level of control for changing parameters such as sound level and effects applied to individual tracks of a multitrack recording or live performance..

MIDI footswitches are commonly used to send MIDI program changes to effect devices but can be combined with instrument footswitches to allow for detailed programming of effect units. Footswitches are available as on/off, momentary, or footswitches where their position determines their MIDI value.

Sliders are used for MIDI and virtual organs. Along with a set of sliders for timbre control, they allow you to control standard organ effects such as rotation speed, vibrato, and a Leslie speaker's chorus.

Instruments

A MIDI instrument has ports to send and receive MIDI signals, a CPU to process these signals, an interface that allows the user to program it, an audio circuit that generates sounds, and controllers. The operating system and factory sounds are usually stored in read-only memory (ROM).

A MIDI instrument can also be a stand-alone module (without the need for a keyboard) made up of a General MIDI sound card (GM, GS and /XG) editable within it, including pitch transposition changes, pitch changes, MIDI instrument settings, adjust volume, panel, reverb levels, and other MIDI controllers. Normally, the MIDI module includes a large screen, allowing the user to view the information depending on the selected function. Other functions include viewing lyrics, usually included in a MIDI or Karaoke MIDI file, track lists, song library, and editing screens. Some MIDI plug-ins include a harmonizer and the ability to play and retranspose MP3 files.

Synthesizers

Synthesizers can employ any variety of techniques to generate sound. These typically include a built-in keyboard, or may exist as "sound modules" that generate sounds from an external controller. Sound modules are typically designed to be placed in a 19-inch rack. Manufacturers commonly produce a synthesizer in stand-alone and rack-mount versions, usually the keyboard version varies in size.

Samplers

A sampler can record and digitize audio, store it in random access memory (RAM), and play it back later. Samplers normally allow the user to edit a sample and save it to a hard drive, apply effects to it, and modify its sound through the same tools used on synthesizers. They may also have a keyboard or be rack-mounted. Instruments that generate sounds through playback but do not have recording capabilities are known as "ROMplers".

Samplers did not become viable MIDI instruments as quickly as synthesizers did due to the cost of memory and processing power required at the time. The first low-cost MIDI sampler was the Ensoniq Mirage, released in 1984. MIDI samplers are usually limited due to the small screens used to edit the sampled waveforms, although some can be connected to a computer monitor.

Drum machines

Drum machines are typically specialized devices that play drum samples and percussion sounds. They usually have a sequencer that allows the creation of rhythm patterns to be incorporated into the arrangement of a song. They commonly have multiple outputs that allow each of the sounds to be assigned to each other. Individual drum sounds can be played from another MIDI instrument or from a sequencer.

Workstations and hardware sequencers

Sequencer technology came before MIDI. Analog sequencers use voltage control signals to control pre-MIDI analog synthesizers. MIDI sequencers typically operate via transport functions created from the recorders. They are capable of recording MIDI sequences and organizing them into individual tracks through the concept of multitrack recording. The workstations combine keyboard controllers with an internal sound generator and sequencer. The workstations can be used to create complete arrangements and play them back through the onboard sounds, functioning as small production studios. They usually include a storage unit and transfer capabilities.

Effect devices

Audio effects are frequently used on stage and in recording such as reverb, delay and chorus, they can be adjusted remotely via MIDI signals. Some units only allow a certain number of parameters to be controlled in this way, but most respond to program change messages. Eventide's H3000 Ultra-harmonizer is an example of a unit that allows full MIDI control that functions like a synthesizer.

Technical specifications

MIDI messages are made up of one "word" 8 bits (called bytes) that are transmitted serially at 31.25 kbit/s. This rate was chosen because it is an exact division of 1 MHz, the speed at which many early microprocessors operated. The first bit of each word identifies whether the word is a status or data byte, the next seven bits are the information. A start bit and a stop bit are added to each byte for timing purposes, so a MIDI message requires ten bits to transmit.

A MIDI connection can carry sixteen independent channels of information. The channels are numbered from 1 to 16 but actually correspond to the order of the binary code from 0 to 15. A device can be configured to only listen to specific channels and ignore the messages sent from others ("Omni Off" mode) or it can listen to all channels regardless of their address (“Omni On”). A device can be monophonic (the start of a new MIDI note-on signal implies the end of the previous note) or polyphonic (multiple notes can sound at the same time, until the limit of the instrument's polyphony has been reached)., the notes have finished their envelope or the “note-off” command has been received Devices receiving the messages typically have four combinations of “omni off/on” vs. “mono/poly” modes.

General MIDI Instruments

These are the numbers of the 128 instrument timbres from the General MIDI specification, included in the "Complete MIDI 1.0 detailed specification":

|

|

|

|

Messages

A MIDI message is an instruction that controls some aspect of the receiving device. A MIDI message consists of a status byte, which indicates the type of the message, followed by two data bytes containing the parameters. MIDI messages can be "channel messages", which are sent to one of the sixteen channels and can be heard only by devices on that channel, or "system messages", which can be heard by all devices. Any data not relevant to a receiving device is ignored. There are five types of messages: "Channel Voice", "Channel Mode", "System Common", &# 34;System Real-Time" and "System Exclusive".

Channel Voice messages transmit real-time performance data over a single channel. Some examples are the messages "note-on" that contain the MIDI note number that specifies the pitch of the note, a velocity value that indicates how strong the note is, and the channel number; "note-off" indicate the end of the note; Program Change messages that patch the device and Control Changes that adjust the instrument's parameters. Channel Mode messages include Omni/mono/poly mode on and off messages, as well as messages to reset all controllers to their initial state or to send "note-off" for all notes. System messages do not include channel numbers and are received by every connected MIDI device. The MIDI time code is an example of a common System message. System Real-Time messages contain data for synchronization and include MIDI clock and Active Sensing.

System Exclusive messages

System Exclusive (SysEx) messages are the major reason for the flexibility and longevity of the MIDI standard. They allow manufacturers to create proprietary messages specific to each manufacturer's instrument, which allow control of equipment in a specific and not contained in standard MIDI messages. SysEx messages only work on a specific device on a system. Each manufacturer has a unique identifier that is included in SysEx messages, which causes the messages to only be heard by a specific device and ignored by all others. Several instruments also include a SysEx ID, which allows two devices of the same model to be addressed independently while connected to the same system. SysEx messages can include more functionality than the MIDI standard allows. They are directed to a specific instrument and are ignored by other devices connected to the system. Many sysex messages are not documented.

The MIDI Map Implementation

Not all midi devices respond to all messages defined in the MIDI specification. For example, there are many real instruments that actually produce sounds that span fewer octaves than the reference (an 88-key piano), such as a trumpet. The implementation of the MIDI map was standardized by the MMA as a way for users to learn the capabilities of an instrument and how it responds to messages. In the documentation for every midi device there is usually a list of the midi commands that uses (midi map).

Extensions

MIDI's flexibility and wide acceptance have led to various refinements of the standard, as well as allowing it to be applied for purposes beyond those originally intended.

General MIDI

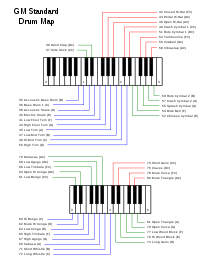

MIDI allows selection of an instrument's sounds via program change messages but there is no guarantee that two instruments will produce the same sound given the same program location. Initially program #0 could be the same piano in one instrument and in another it can be a flute. The General MIDI (GM) standard was established in 1991 and allows for a standardized sound bank that allows a Standard MIDI file created on one device to sound similar on another. The GM specifies a bank of 128 sounds organized into 16 families of 8 related instruments, and assigns a specific program number to each of these instruments. Percussion instruments are placed on channel 10 and a specific MIDI value is placed on each percussion sound. GM compiler devices must offer 24-note polyphony. Any given program change will select the same type of instrument sound on any GM compatible instrument.

The GM standard eliminates variations in the mapping of a note. Some manufacturers differ on which note Middle C should represent, but the GM specifies that note number 68 plays A 440, which sets Middle C to 60. GM compatible devices require velocity (intensity) response, aftertouch, and pitch bend, be specified to default values at startup, and be compatible with certain controller numbers such as sustain pedal and registered parameter numbers. A simplified version of GM, called "GM Lite", is used on phones. mobile phones and other devices with limitations regarding processing capacity.

GS, XG and GM2

With use it was found that only 128 GM instruments were not enough. Both Roland and Yamaha developed additional sets. Roland's General Standard, or GS, is a system that includes additional sounds, drums, and effects, allows a "bank select" that you can access them and use a number of MIDI Non-Registered Parameters (NRPNs) to access new sounds and new features. For its part, Yamaha developed its own different system, the Extended General MIDI, or XG, in 1994. XG similarly offers other sounds, drums, and effects, but uses standard controllers instead of NRPNs for editing and increases polyphony to 32 voices. Both the GS and XG standards include compatibility with the GM specification, but are not compatible with each other. Neither of these two standards have been implemented beyond their own creators but they are commonly used by music software from both manufacturers and others.

Companies belonging to the Association of Musical Electronics Industry (AMEI) of Japan developed General MIDI Level 2 in 1992. GM2 maintains its compatibility with GM but increases polyphony to 32 voices, standardizes controller numbers such as sostenuto and soft pedal (una corda), RPNs and Universal System Exclusive messages, plus incorporates the MIDI Tuning standard. GM2 is the basis of the instrument selection mechanism in Scalable Polyphony MIDI (SP-MIDI), a MIDI variant for low-power devices that allows polyphony scaled according to the processing power of the device.

MIDI Tuning Standard

Most MIDI synthesizers use equal-tempered tuning. The MIDI Tuning Standard (MTS), created in 1992, also allows alternate tunings. MTS allows microtunings that can be loaded from a bank of 128 patches and allows real-time adjustment of the pitch of notes. Manufacturers do not need to comply this standard. Those who use it are not required to implement all functions.

MIDI Time Code

A sequencer can drive a MIDI system with its internal clock, but when the system contains multiple sequencers, they need to be synchronized by the same clock. MIDI Time Code (MTC), developed by Digidesign, implements SysEx messages that have been developed especially for timing issues, and is capable of converting data between the standard SMPTE time code. MIDI Clock is tempo-based, but SMPTE it is based on frames per second and is independent of tempo. MTC, as SMPTE code, includes information about position and can be adjusted by itself if a beat is out of time. MIDI interfaces such as Mark of the Unicorn's MIDI Timepiece can convert between SMPTE and MTC.

MIDI Machine Control

MIDI Machine Control (MMC) consists of a series of SysEx commands that operate the transport controls of hardware recording devices. MMC allows a sequencer to send "Start", "Stop" and "Record" to a tape recorder or hard disk recording system in addition to fast forwarding or rewinding the device for playback from the same sequencer point. No sync data is involved, although there are devices that can sync via MTC.

MIDI Show Control

MIDI Show Control (MSC) is a series of SysEx commands that allow you to remotely sequence and activate show control devices such as lighting, music and playback, as well as motion control systems. Some applications include the stage production, museum exhibits, audio recording systems, and amusement parks.

MIDI timestamping

One solution to MIDI timing problems is to mark MIDI events with a flag when they are played back and buffer them on the MIDI interface ahead of the event. Sending early data reduces the probability that a data-heavy passage will send a large amount of information that saturates the transmission link. Once stored in the interface, the data will not be subject to timing issues associated with USB latency or operating system interruptions and can be transmitted with a degree of accuracy. MIDI timestamping only works when hardware and software are compatible. MOTU's MTS, eMagic's AMT, and Steinberg's Midex 8 are implementations that were incompatible with each other, requiring users to have software and hardware made by the same company to increase their profits. Timestamping is integrated into the FireWire MIDI interfaces. and Core Audio from Mac OS X.

MIDI Sample Dump Standard

An unintended capability of SysEx messages was to use them to transport audio samples between instruments. SysEx is rarely used for this purpose since MIDI words are limited to seven bits of information and an 8-bit sample requires two bytes to transmit instead of one. This led to the development of the Sample Dump Standard (SDS), which establishes a protocol for the transmission of samples. SDS was enhanced with a couple of commands that allow the transmission of sample loop point information without require the entire sample to be transmitted.

Downloadable Sounds

The Downloadable Sounds (DLS) specification, created in 1997, allows mobile devices and computer sound cards to expand their wavetables with downloadable sound sets. The DLS Level 2 specification created in 2006 and defined as the standardized synthesizer architecture. The Mobile DLS standard uses the DLS banks combined with SP-MIDI as Mobile XMF files.

Alternative midi signal transport hardware

In addition to the 31.25 kbit/s transmission rate on a five-pin DIN connector, other common connectors have been used for the same electrical data and MIDI signal transmission in different ways via USB, IEEE 1394 or FireWire and ethernet. Some samplers and hard disk recorders can transmit MIDI data to each other using SCSI.

USB and FireWire

Members of USB-IF in 1999 developed a standard for MIDI over USB, the "Universal Serial Bus Device Class Definition for MIDI Devices" MIDI over USB has been more common than other Interfaces that have been used for MIDI connections (serial, joystick, etc.) have disappeared from personal computers. Microsoft Windows, Macintosh OS X, and Apple iOS operating systems have included drivers for compatibility with "Universal Serial Bus Device Class Definition for MIDI Devices". Drivers are also available for Linux. Some manufacturers have decided to implement a MIDI interface over USB that is designed to operate outside of the specification, using custom drivers.

Apple Computer developed the FireWire interface during the 1990s. It began appearing in digital video cameras towards the end of the decade and in models of the Apple G3 Macintosh computer in 1999. This proprietary interface was created for multimedia applications. Unlike USB, FireWire uses intelligent controllers that can handle its transmission without the attention of a main CPU. Like standardized MIDI devices, FireWire devices can communicate with each other without the intervention of a CPU or computer.

XLR Connectors

Octave-Plateau's Voyetra-8 synthesizer was the first to implement MIDI via XLR connectors instead of 5-pin DIN connectors. It was released before the departure of MIDI and was adapted with a MIDI interface while keeping its XLR connector.

Parallel Serial and MIDI Joystick Port

As the use of computers in studios grew, MIDI devices began to come out that could be connected directly to the computer. These typically used an eight-pin Mini-DIN connector that was used by Apple for serial and printer ports prior to the introduction of the Power Macintosh G3 models. MIDI interfaces were created with the intention that they be the central part of the studio, like Mark of the Unicorn, they were possible due to the "fast" which could take advantage of the serial ports' ability to operate 20 times faster than standard MIDI speed. Mini-DIN ports were built into some MIDI instruments in the late 1990s and allowed them to be connected directly to the computer. Some devices connected via the DB-25 parallel port or joystick port can be found on some PC sound cards.

MLAN

Yamaha introduced the mLAN protocol in 1999. It was conceived as a local area network for musical instruments using FireWire and was designed to bring multiple MIDI channels together with multi-channel digital audio, data file transfers, and time code. mLan was used in a number of Yamaha products, most commonly digital mixers, the Yamaha Motif synthesizer, and products such as the PreSonus FIREStation and the Korg Triton Studio. No mLan products have been released since 2007.

Ethernet

Implementing MIDI on a computer network enables new connectivity capabilities and enables a high-bandwidth channel that early MIDI alternatives, such as ZIPI, tried to create. Proprietary implementations have existed since the 1980s, such as the use of fiber optic cables for transmission. The Internet Engineering Task Group's open specification RTP MIDI is gaining industry support because proprietary protocols MIDI/IP require high licensing costs or offer no advantage other than speed over the original MIDI protocol. Apple has supported this protocol since Mac OS X 10.4 and there is a Windows driver based on Apple's implementation since Windows XP and for newer versions since 2012.

CSOs

The OpenSound Control (OSC) protocol was developed at the Center for New Music and Audio Technologies (CNMAT) at the University of California at Berkeley and is used by programs such as Reaktor, Max/MSP and Csound, as well as some drivers, including the Lemur Input Device. OSC can be transmitted over Ethernet connections but is not widely used as a studio solution, to date lacking general hardware and software support. The size of OSC messages versus MIDI messages make it an impractical solution for various mobile devices, and its speed advantages over MIDI are not perceptible when transmitting the same data. OSC does not have a property but is not supported by the standards of any organization.

Wireless MIDI

Systems for transmitting MIDI wirelessly have existed since the 1980s. Several commercial transmitters allow wireless transmission of MIDI and OSC signals via Wi-Fi and Bluetooth. iOS devices can function as control interfaces MIDI using Wi-Fi and OSC. An XBee radio can be used to build a MIDI transmitter as a DIY project. Android devices can function as control surfaces via different protocols such as Wi-Fi and Bluetooth.

MIDI Versions

A new version of established MIDI, tentatively named "HD Protocol" or "High-Definition Protocol", was billed as "HD-MIDI" in 2012. This new standard offers backwards compatibility with MIDI 1.0 and is planned to support high transmission speeds, allow device detection by simply connecting them, enumerate them, and offer a large data range and resolution. Channel numbers and controllers will increase, new event types will be added, and messages will be simplified. New events will be supported such as Note Update and Direct Pitch which are targeted at guitar controllers. Proposed physical layers include Ethernet-based protocols such as RTP MIDI and Audio Video Bridging. The HD protocol and a User Datagram-based transport protocol Protocol (UDP) are under review by the MMA's High-Definition Protocol Working Group (HDWG), which includes representatives from various companies. Device prototypes based on the early phases of the protocol have been shown privately at NAMM using both wired and wireless connections, however it is uncertain whether the protocol will be taken up by the industry. In 2015, the HD protocol specifications are nearing completion and MMA develops product licensing and certification policies. the cost of data storage has fallen, MIDI music has been replaced by compressed audio in commercial products, making MIDI again a tool for music production. MIDI connectivity and a soft synthesizer is still included in Windows, OS X and iOS but not Android.

MIDI 2.0

The MIDI 2.0 standard was introduced at the Winter NAMM Show in Anaheim, California on January 17, 2020 in a session titled "Strategic Overview and Introduction to MIDI 2.0" made by representatives of Yamaha, Roli, Microsoft, Google, and the MIDI Association. This update adds two-way communication and maintains compatibility with the previous standard.

Contenido relacionado

Breakbeat

Arcangelo Corelli

Daniel lanois