Graphic card

A graphics card or video card is an expansion card on the computer's motherboard or motherboard that is responsible for processing data from the processor and transforming it into information understandable and representable on the output device (for example: monitor, television or projector). These cards use a graphics processing unit, or GPU, which is often mistakenly used to refer to the graphics card itself. They are also known as Display Adapter, Video Adapter, Video Card, and Graphics Accelerator Card.

A video card allows the user in general terms to play video games, use filter editing applications such as:

- PhotoDirector.

- Canva.

- VSCO.

- Adobe Lightroom.

- Snapseed. Video editing applications such as Adobe Premiere or DaVinci Solve and computer-assisted drawing applications such as AutoCAD, Revit and ArchiCAD. They also serve to undermine cryptocurrencies.

Some graphics cards have offered added functionality such as television tuning, video capture, MPEG-2 and MPEG-4 decoding, or even IEEE 1394 (Firewire) connectors, mouse, stylus or joystick.

The most common graphics cards are those available for computers compatible with the IBM PC, due to their enormous popularity, but other architectures also make use of this type of device.

Graphics cards are not the exclusive domain of IBM-compatible personal computers (PCs); Devices such as: Commodore Amiga (connected via Zorro II and Zorro III slots), Apple II, Apple Macintosh, Spectravideo SVI-328, MSX equipment and modern game consoles such as the Nintendo Switch, PlayStation 4 and the Xbox One.

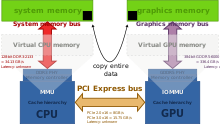

Dedicated graphics and integrated graphics

As an alternative to video cards, video hardware can be integrated into the motherboard or Central Processing Unit. Both approaches can be understood as integrated graphics. Almost all computers with integrated graphics on the motherboard can disable the integrated graphics feature in the BIOS, and have a PCI, or PCI Express (PCI-E) input for adding a higher performance card instead of integrated graphics. The ability to disable integrated graphics sometimes also allows the use of a motherboard in which the graphics component has failed. Sometimes both types of graphics (dedicated and integrated) can be used simultaneously for different functions. The main advantages of integrated graphics are cost, compactness, simplicity, and low power consumption. The performance disadvantage of integrated graphics is that they use CPU resources. A graphics card has its own RAM memory, its own cooling system, and dedicated electrical regulators, with all components specifically designed to process video images. Upgrading to dedicated graphics frees up work from the CPU and system RAM, so not only will graphics processing be faster, but overall computer performance might improve as well.

The two largest CPU makers, AMD and Intel, are investing more in processors with integrated graphics. One of the main reasons is that graphics processors are very good at parallel processing, and putting them on CPU chips allows their parallel processing ability to be used for different computing tasks in addition to graphics processing. AMD refers to these CPUs as APUs, and they are usually sufficient for casual gaming.

Components

GPUs

The GPU (acronym for "graphics processing unit") is a processor (like the CPU) dedicated to processing graphics; its raison d'être is to lighten the workload of the central processor and, therefore, it is optimized for floating point calculation, predominant in 3D functions. Most of the information provided in a graphics card specification refers to the GPU characteristics, as it is the most important part of the graphics card, as well as the main determinant of performance. Three of the most important of these characteristics are the core clock frequency, which can range from 825 MHz on low-end cards to 1600 MHz (and even higher) on high-end ones, the number of shaders, and the number of shaders. of pipes (of vertices or fragments) in charge of translating a 3D image made up of vertices and lines into a 2D image made up of pixels.

General elements of a GPU:

- Hats or shaders: is the most remarkable element of power of a GPU, these unified shaders receive the name of CUDA cores in the case of NVIDIA and data flow processors or cores in default in the case of AMD. They are a natural evolution of the ancient pixel hats (loaded with the rasterization of textures) and vertices hats (loaded with the geometry of objects), which previously acted independently, a GPU can contain different amounts of nuclei according to the type of GPU. Them shaders unified are able to act as of vertices as of pixels according to the workload in the GPU, and appeared in 2007 with the G90 chips of NVIDIA (Series 8000) and the R600 chips for AMD (Series HD 2000), former ATi, increasing the drastic power over their previous families.

- ROP: they represent the data processed by the GPU on the screen, and it is also responsible for filters such as softening or softening antialiasing.

VRAM

Graphics Random Access Memory (VRAM) are memory chips that store and carry information with each other, they are not determinative of the maximum performance of the graphics card, but lower specifications can limit the power of the GPU.

There are two types of graphic memories:

- Dedicated: when the graphic card or the GPU has exclusively for itself these memories, this way is the most efficient and the best results it gives.

- Shared: when memory is used to detriment of random access memory (VRAM).

The graphics memory characteristics of a graphics card are expressed in three characteristics:

- Capacity: The memory capacity determines the maximum number of processed data and textures, an insufficient capacity translates into a delay waiting for such data to be emptied. However it is a very overrated value as a recurring marketing strategy to deceive the consumer, trying to make believe that the performance of a graphic card is measured by the capacity of its memory. It is an important metric in large resolutions (Superiors to 1440p) and multiple monitors as each image takes much more space in the VRAM.

- Memory interface: also called data bus, is the multiplication resulting from the bit width of each chip by its number of units. It is an important and decisive feature, along with the frequency of memory, to the amount of data that can be transferred in a given time, called bandwidth. An analogy to bandwidth could be associated with the width of a highway or lanes and the number of vehicles that could circulate at the same time. The memory interface is measured in bits.

- Memory Frequency: is the frequency to which memories can transport the processed data, so it is complementary to the memory interface to determine the total bandwidth of data at a given time. Continuing the analogy of the traffic of the motorway vehicles, the memory frequency would translate into the maximum speed of traffic of the vehicles, resulting in increased transport of goods in the same period of time. The frequency of the memories is measured in herts (their actual frequency) and technologies are being designed more frequently, the attached are highlighted in the following table:

| Technology | Effective frequency (MHz) | Bandwidth (GB/s) |

|---|---|---|

| GDDR | 166 - 950 | 1.2 - 30.4 |

| GDDR2 | 533 - 1000 | 8.5 - 16 |

| GDDR3 | 700 - 1700 | 5.6 - 54.4 |

| GDDR4 | 1600 - 1800 | 64 - 86.4 |

| GDDR5 | 3200 - 7000 | 24 - 448 |

| GDDR6 | 12000 - 14000 | 48 - 846 |

| HBM | 500 | 512 |

| HBM2 | 500 - 1700 | 1.2 Tb/s |

- Bandwidth (AdB): is the data rate that can be transported in a time unit. An insufficient bandwidth translates into an important GPU power limiter. Usually measured in gigabytes per second (GB/s).

- Its general formula is the quotient of the memory interface product (expressed in bits) by the effective frequency of memories (expressed in gigahercios), between 8 to convert bits to bytes.

AdB(GB/s)=Bus(bits)↓ ↓ Frecuencia(GHz)8{displaystyle mathrm {AdB(GB/s)} ={frac {mathrm {Bus(bits)} *mathrm {Frequency(GHz)}}{8}}}}}

- For example, we have a graphic card with 256 bit memory interface and 4200 MHz of effective frequency and we need to find its bandwidth:

AdB=256↓ ↓ 4,28=134,4GB/s{displaystyle mathrm {AdB} ={frac {256}4,2}{8}=134,4mathrm {GB/s} }

An important part of the memory of a video adapter is the z-buffer, in charge of managing the depth coordinates of the images in 3D graphics, that is, it is the memory space where depth is managed in charts.

RAMDAC

The Random Access Memory Digital-to-Analog Converter (RAMDAC) is a converter of digital signal to analog signal of RAM memory. It is responsible for transforming the digital signals produced in the computer into an analog signal that can be interpreted by the monitor. Depending on the number of bits it handles at the same time and the speed with which it does so, the converter will be able to support different monitor refresh rates (it is recommended to work from 75 Hz, and never less than 60). Given the growing popularity of digital signal monitors, the RAMDAC is becoming obsolete, since analog conversion is not necessary, although it is true that many retain a VGA connection for compatibility.

Space occupied by stored textures

The space occupied by an image displayed on the monitor is given by its resolution and color depth, that is, an uncompressed image in standard Full HD format with 1920 × 1080 pixels and 32-bit color depth would occupy 66,355,200 bits, that is, 8,294 MiB.

Outputs

The most common connection systems between the graphics card and the display device (eg monitor or television) are:

- VGA: the video graphics array (VGA) or super video graphics array (SVGA or Super VGA) was the analog standard of the 1990s; designed for devices with cathode beam tube (CRT); suffers from electrical noise and distortion by converting digital to analogue and sampling error when evaluating pixels to send to the monitor. It is connected by 15 pins with the D-sub connector: DE-15. Its use continues to be widespread, although it clearly shows a reduction against the DVI.

- DVI: digital visual interface (DVI) or digital visual interface is a substitute for the previous, but digital; it was designed to obtain the highest quality display on digital displays or projectors. It is connected by pins. Avoid distortion and noise by directly matching a pixel to represent with one of the monitor in the native resolution of the monitor. Increasingly adopted, although competing with HDMI, DVI is not able to transmit audio.

- HDMI: high definition multimedia interface or high-definition multimedia interface (HDMI) is a proprietary high definition audio and digital video transmitter without compression, on the same cable. It is connected by contact paths. It was initially designed for televisions, and not for monitors, so it does not turn off the screen when it stops receiving signal and should be switched off manually in case of monitors.

- DisplayPort: VESA graphics card port and HDMI rival, transfer video to high resolution and audio. Its advantages are that it is free of patents, and therefore royalties to incorporate it into the devices, it also has a few tabs to anchor the connector preventing the cable from accidentally disconnecting. More and more graphics cards are adopting this system, although it is still its minority use, there is a reduced version of that connector called Mini DisplayPort, widely used for graphics cards with multiple simultaneous outputs, as can be 5.

Others not so widespread: for minority use, for not being implemented or for being obsolete; are:

- S-Video (separate video, separate video): implemented mostly on TV tuning cards or chips with NTSC/PAL video support, it is simply becoming obsolete.

- Composed video: very low-resolution analog via RCA connector (Radio Corporation of America). Completely disused for graphics cards, although it is still used for TV.

- Video by components: analog system of high-definition video transmission, also used for projectors; of quality comparable to that of SVGA, has three pins (And, Cb and Cr). Previously used on PCs and high-end workstations, it has been relegated to TV and video consoles.

- DA-15 with RGB connector used mostly in the old Apple Macintosh. It is no longer used.

- Digital TTL with DE-9 connector: used by primitive IBM cards (MDA, CGA and variants, EGA and very VGA counts). Completely obsolete.

Motherboard interfaces

The connection systems between the graphics card and the motherboard have been mainly (in chronological order):

- MSX Slot: 8-bit bus used on MSX computers.

- ISA: 16-bit bus architecture to 8 MHz, dominant during the 1980s; it was created in 1981 for IBM PCs.

- Fox II: used in Commodore Amiga 2000 and Commodore Amiga 1500.

- Fox III: Used in Commodore Amiga 3000 and Commodore Amiga 4000

- NuBus: Used on Apple Macintosh computer.

- Processor Direct Slot: used in Apple Macintosh.

- MCA: in 1987, ISA replacement attempt by IBM. It was 32-bit and 10 MHz speed, but it was incompatible with the previous ones.

- EISA: in 1988, IBM competition response; 32-bit, 8,33 MHz and compatible with previous plates.

- VESA: ISA extension that solved the 16-bit restriction, duplicating the bus size and with a speed of 33 MHz.

- PCI: bus that moved to the previous ones, starting in 1993; with a 32-bit size and a speed of 33 MHz, allowed a dynamic configuration of connected devices without the need to manually adjust the jumpers. PCI-X was a version that increased the bus size to 64 bits and increased its speed to 133 MHz.

- AGP: dedicated 32-bit bus like PCI; in 1997, the initial version increased the speed to 66 MHz.

- PCI-Express (PCIe): since 2004, it is the serial interface that started competing against AGP; in 2006, it doubled the bandwidth of the AGP. It suffers from constant revisions multiplying its bandwidth, there are already versions of the 1.0 to the current 6.0, output in the year 2022. It should not be confused with PCI-X, PCI version.

The attached table shows the most relevant characteristics of some of these interfaces.

| Bus | Anchura (bits) | Frequency (MHz) | Width band (MB/s) | Puerto |

|---|---|---|---|---|

| ISA XT | 8 | 4.77 | 8 | Stop it. |

| ISA AT | 16 | 8,33 | 16 | Stop it. |

| MCA | 32 | 10 | 20 | Stop it. |

| EISA | 32 | 8,33 | 32 | Stop it. |

| VESA | 32 | 40 | 160 | Stop it. |

| PCI | 32-64 | 33 to 100 | 132 to 800 | Stop it. |

| AGP 1x | 32 | 66 | 264 | Stop it. |

| AGP 2x | 32 | 133 | 528 | Stop it. |

| AGP 4x | 32 | 266 | 1000 | Stop it. |

| AGP 8x | 32 | 533 | 2000 | Stop it. |

| PCIe x1 | 1*32 | 25/50 | 100/200 | Series |

| PCIe x4 | 1*32 | 25/50 | 400/800 | Series |

| PCIe x8 | 1*32 | 25/50 | 800/1600 | Series |

| PCIe x16 | 1*32 | 25/50 | 1600/3200 | Series |

| PCIe x16 2.0 | 1*32 | 25/50 | 3200/6400 | Series |

Cooling devices

Due to the workloads they are subjected to, graphics cards reach very high temperatures. If not taken into account, the heat generated can cause the device to fail, block or even damage. To avoid this, cooling devices are incorporated to remove excessive heat from the card.

There are two types:

- Dissipator: passive device (without moving parts and therefore silent); composed of a very heat-driven metal, extract this from the card. Its efficiency goes according to the structure and total surface, so to greater demand for refrigeration, greater should be the surface of the dissipator.

- Ventilator: active device (with moving parts); removes heat emanated from the card when moving the near air. It is less efficient than a dissipator, as long as we refer to the fan alone, and produces noise when having mobile parts.

Although different, both types of devices are compatible with each other and are usually mounted together on graphics cards; a heatsink above the GPU extracts heat, and a fan above it moves hot air away from the assembly.

Liquid cooling is a cooling technique using water instead of heat sinks and fans (inside the chassis), thus achieving excellent results in terms of temperatures, and with enormous possibilities in overclocking. It is usually carried out with sealed water circuits.

Water, and any coolant, has a higher heat capacity than air. From this principle, the idea is to extract the heat generated by computer components using water as a medium, cool it once outside the cabinet and then reintroduce it.

Food

Until now, the power supply of graphics cards has not been a big problem, however, the current trend of new cards is to consume more and more power. Although power supplies are becoming more powerful every day, the lack of energy is found in what can be provided by the PCIe port, which is only capable of providing a power of 75 W by itself. For this reason, graphics cards with a consumption greater than the power supply can supply, PCIe include a PCIe connector that allows a direct connection between the power supply and the card, without having to go through the motherboard, and, therefore, therefore, by the PCIe port.

Even so, it is predicted that graphics cards may not need their own power supply for a long time, becoming external devices.

Historical overview

The history of graphics cards begins in the late 1960s when they went from using printers, as a display element, to using monitors. The first cards were only capable of displaying text at 40x25 or 80x25, but the appearance of the first graphics chips such as the Motorola 6845 allowed us to begin to provide equipment based on the S-100 or Eurocard bus with graphic capabilities. Together with the cards that added a television modulator they were the first to receive the term card video.

The success of the home computer and the first game consoles mean that, due to lower costs (mainly they are closed designs), these chips are integrated into the motherboard. Even in computers that already come with a graphics chip, 80-column cards are marketed, which added a text mode of 80x24 or 80x25 characters, mainly to run CP/M programs (like those of the Apple II and Spectravideo SVI-328).

Interestingly, the video card that comes with the IBM PC, which with its open design inherited from the Apple II will popularize the concept of switchable graphics card, is a text-only card. The monochrome display adapter (MDA) developed by IBM in 1981, worked in text mode and was capable of representing 25 lines of 80 characters on the screen. It had a 4KB VRAM memory, so it could only work with one page of memory. It was used with monochrome monitors, normally green in color.

From there, various graphics controllers followed, summarized in the attached table.

| Year | Text mode | Graphics mode | Colors | Memory | |

|---|---|---|---|---|---|

| MDA | 1981 | 80*25 | - | 1 | 4 KiB |

| CGA | 1981 | 80*25 | 640*200 | 4 | 16 Kib |

| HGC | 1982 | 80*25 | 720*348 | 1 | 64 KiB |

| EGA | 1984 | 80*25 | 640*350 | 16 | 256 KiB |

| IBM 8514 | 1987 | 80*25 | 1024*768 | 256 | - |

| MCGA | 1987 | 80*25 | 320*200 | 256 | - |

| VGA | 1987 | 720*400 | 640*480 | 256 | 256 KiB |

| USG | 1989 | 80*25 | 800*600 | 256 | 1 MiB |

| XGA | 1990 | 80*25 | 1024*768 | 65 000 | 2 MiB |

The video graphics adapter (VGA) had a massive acceptance, which led companies such as ATI, Cirrus Logic and S3 Graphics, to work on the card to improve the resolution and the number of colors. Thus the Super Video Graphics Array (SVGA) standard was born. With this standard, 2 MB of VRAM memory was reached, as well as resolutions of 1024 × 768 pixels at 256 colors.

Competitors from PCs, the Commodore Amiga 2000 and Apple Macintosh, instead reserved this possibility for professional extensions, almost always integrating the GPU (which easily outperformed the graphics cards of the PCs). PC of the moment) on their motherboards. This situation was perpetuated until the appearance of the Peripheral Component Interconnect (PCI) bus, which puts PC cards at the level of the internal buses of their competitors, by eliminating the bottleneck represented by the Industry Standard Architecture (ISA) bus. Although always below in efficiency (with the same S3 ViRGE GPU, what in a PC is an advanced graphics card becomes a professional 3D accelerator in the Commodore Amiga with Zorro III slot), mass manufacturing (which substantially lowers costs) and the adoption by other platforms of the PCI bus causes VGA graphics chips to begin to leave the PC market.

In 1995, the evolution of graphics cards took a major turn with the appearance of the first 2D/3D cards, made by Matrox, Creative, S3, and ATI, among others. These cards complied with the SVGA standard, but incorporated 3D functions.

In 1997, 3dfx released the Voodoo graphics chip, with great computing power, as well as new 3D effects (mip mapping, z-buffering, antialiasing, etc..). From that point on, a series of graphics card releases followed like Voodoo2 from 3dfx, TNT and TNT2 from NVIDIA. The power reached by these cards was such that the PCI port where they were connected fell short of bandwidth. Intel developed the Accelerated Graphics Port (AGP) that would solve the limitation that was beginning to appear between the processor and the card.

From 1999 to 2002, NVIDIA dominated the graphics card market (buying even most of 3dfx's assets) with its GeForce range. In that period, improvements were oriented towards the field of 3D algorithms and the speed of graphics processors. However, the memories also needed to improve their speed, so DDR memories were incorporated into the graphics cards. Video memory capacities at the time go from 32 MB for GeForce, up to 64 and 128 MB for GeForce 4.

Most sixth-generation and subsequent video game consoles use graphics chips derived from the most powerful 3D accelerators of their time. Apple Macintoshes have had chips from NVIDIA and ATI since the first iMac, and PowerPC models with a PCI or AGP bus can use PC graphics cards with CPU-agnostic BIOSes.

In 2006 and thereafter, NVIDIA and ATI (that same year bought by AMD) shared the market leadership with their GeForce and Radeon series of graphics chips, respectively. GeForce and Radeon are examples of series of graphics processors.

Old Types of Graphics Cards

MDA Card

The Monochrome Display Adapter (MDA) was released by IBM as a 4 KiB memory exclusively for TTL monitors (which rendered the classic amber or green characters). It did not have graphics and its only resolution was the one presented in text mode (80x25) in 14x9 point characters, with no possibility of configuration.

Basically this card uses the video controller to read from the ROM the dot matrix to be displayed and it is sent to the monitor as serial data. The lack of graphics processing should not be surprising, since there were no applications on these early PCs that could really take advantage of a good video system. Virtually everything was limited to information in text mode.

This type of card is quickly identified as it includes (or included in its day) a communication port for the printer.

CGA Card

The color graphics adapter (CGA, color graphics array or color graphics adapter, depending on the text used), was launched on the market in the year 1981 also by IBM and it was very widespread. It allowed 8x8 dot character matrices on 25-row, 80-column displays, though it only used 7×7 dots to represent the characters. This detail made it impossible for him to represent underscores, so he replaced them with different intensities in the character in question. In graphic mode it supported resolutions up to 640 × 200 pixels. The memory was 16 KiB and was only compatible with RGB and Composite monitors. Despite being superior to MDA, many users preferred the latter since the distance between points on the potential grid on CGA monitors was greater. The color treatment, of course in a digital way, was carried out with three bits and one more for intensities. Thus it was possible to achieve 8 colors with two intensities each, that is, a total of 16 different tones but not reproducible in all resolutions as shown in the attached table.

This card had a fairly common glitch known as the snow effect. This issue was random in nature and consisted of snow appearing on the screen (bright, intermittent dots that distorted the image). So much so that some BIOSes of the time included the snow removal option in their settings.

HGC Card

The Hercules graphics card (Hercules graphics card, HGC), or more popularly known as Hercules (name of the producing company), launched in 1982, with great success becoming a standard video despite not having the support of the BIOS routines from IBM. Its resolution was 720 × 348 points in monochrome with 64 KiB of memory. As there is no color available, the memory's only mission is to reference each point on the screen using 30.58 KiB for graphic mode (1 bit × 720 × 348) and the rest for text mode and others. functions. The readings were made at a frequency of 50 HZ, managed by the 6845 video controller. The characters were drawn in 14x9 dot matrices.

Designers, manufacturers and assemblers

| GPU Designers | ||

|---|---|---|

| AMD | NVIDIA | |

| Cardholders | ASUS | ASUS |

| CLUB3D | CLUB3D | |

| GIGABYTE | GIGABYTE | |

| MSI | MSI | |

| POWERCOLOR | EVGA | |

| GECUBE | POINT OF VIEW | |

| XFX | GAINWARD | |

| SAPPHIRE | ZOTAC | |

| HIS | ECS ELITEGROUP | |

| DIAMOND | PNY | |

| - | SPARKLE | |

| - | GALAXY | |

| - | PALIT | |

In the graphics card market, we must distinguish three types of companies:

- GPU designers: design and generate exclusively the GPU. The two most important are:

- AMD (Advanced Micro Devices), formerly known as ATI Technologies (ATi);

- NVIDIA;

- Intel, also stands out in addition to the ones mentioned above (NVIDIA and AMD), for the GPU integrated into the base plate chipset.

- Other manufacturers such as Matrox or S3 Graphics have a very small market share. All of them hire and handle certain chip units from a design.

- GPU manufacturers: they are the ones who manufacture and supply the units extracted from chip obles to assemblers. TSMC and GlobalFoundries are clear examples.

- Wrappers: integrate the GPUs provided by the manufacturers with the rest of the card, of own design. Hence cards with the same chip have different shapes or connections or can give slight performance differences, especially modified graphics cards or factory uploads.

The attached table lists the two chip designers and some of the card assemblers they work with.

Graphics API

At the programmer level, working with a graphics card is complicated; thus, application programming interfaces (APIs) emerged that abstract the complexity and diversity of graphics cards. The two most important are:

- Direct3D: released by Microsoft in 1996, is part of the DirectX library. It works only for Windows, as it is proprietary. Used by most of the video games marketed for Windows. They're currently going for version 12.

- OpenGL: created by Silicon Graphics in the early 1990s; it is free, free and multi-platform. Used mainly in CAD applications, virtual reality or flight simulation. Version 4.3 is currently available.

OpenGL is being displaced from the video game market by Direct3D, although it has undergone many improvements in recent months.

Graphic effects

Some of the techniques or effects commonly used or generated by graphics cards can be:

- Anti-escalation: challenge to avoid aliasing, staggering or saw edges, effect that appears when representing curves and straight tilts in a discreet and finite space like the monitor pixels.

- Hat: processing pixels and vertices for lighting effects, natural phenomena and surfaces with several layers, among others.

- High dynamic range (HDR): innovative technique to represent the wide range of intensity levels of the real scenes (from direct light to dark shadows). It is an evolution of the brightness or effect bloom, although unlike this, it does not allow smoothing of edges.

- Texture mapping: technique that adds details on the surfaces of the models, without increasing the complexity of the models.

- Motion blur: ambushed effect due to the speed of a moving object.

- Defocus of depth: effect of ambush acquired by the remoteness of an object.

- Lens distello: imitation of flashes produced by light sources on camera lenses.

- Fresnel effect or speculative image (specifying reflex): reflections on a material depending on the angle between the normal surface and the observation direction. At greater angle, more reflective.

- Teselado: it consists of multiplying the number of polygons to represent certain geometric figures and are not fully flat. This feature was included in API DirectX 11

Common mistakes

- Confuse the GPU with the graphics card. Although very important, not all GPUs and video boards go on card, they are not the only determinant of their quality and performance. That is, the GPUs do determine the maximum performance of the card, but its performance can be layered by having other elements that are not at their height (e.g. a small bandwidth).

- Consider the term video card as a proprietary and compatible PC. Those cards are used on computers that are not PC and even without Intel or AMD processor, and their chips in video consoles.

- Confuse the GPU designer with the card brand. Currently on the market, NVIDIA and AMD (formerly ATi Tecnologies) are the largest graphic chip designers for PC. They only design the graphics chip (GPU). Then, companies like TSMC or GlobalFoundries manufacture the GPUs, and later, for sale to the public are assembled in PCB with memories, by: ASUS, POV, XFX, Gigabyte, Sapphire and other assemblers (although the reference version that comes out first the designer and later, the assemblers take out their versions).

- Out of the PC circle, for other devices such as smartphones, most GPUs are integrated into the chip (SoC) systems along with the processor and memory controller.

Contenido relacionado

Civia

Special phone numbers

File manager