Exponential distribution

In Probability Theory and Statistics, the exponential distribution is a continuous distribution that is used to model waiting times for the occurrence of a certain event. This distribution, like the geometric distribution, has the property of memory leak. The exponential distribution is a particular case of the gamma distribution.

Definition

Density Function

It is said that a continuous random variable X{displaystyle X} She's got one. exponential distribution with parameter 0}" xmlns="http://www.w3.org/1998/Math/MathML">λ λ ▪0{displaystyle lambda 한0}0" aria-hidden="true" class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/eea25afc0351140f919cf791c49c1964b8b081de" style="vertical-align: -0.338ex; width:5.616ex; height:2.176ex;"/> and write X♥ ♥ Exp (λ λ ){displaystyle Xsim operatorname {Exp} (lambda)} if your density function is

- fX(x)=λ λ e− − λ λ x{displaystyle f_{X}(x)=lambda e^{-lambda x}

for x≥ ≥ 0{displaystyle xgeq 0}.

Distribution Function

Its cumulative distribution function is given by

- FX(x)=1− − e− − λ λ x{displaystyle F_{X}(x)=1-e^{-lambda x}}

for x≥ ≥ 0{displaystyle xgeq 0}.

Alternative Parameterization

Exponential distribution is sometimes parametric in terms of the scale parameter β β =1/λ λ {displaystyle beta =1/lambda } in which case, the density function will be

- fX(x)=1β β e− − xβ β {displaystyle f_{X}(x)={frac {1}{beta }{;e^{-{frac {x}{beta }}}}}}}

for x≥ ≥ 0{displaystyle xgeq 0}.

Survival Feature

In addition, this distribution presents an additional function that is the Survival function (S), which represents the complement of the Distribution Function.

- x]=left{{begin{matrix}1&{text{para }}xS(x)=P [chuckles]X▪x]={1forx.0e− − λ λ xforx≥ ≥ 0{displaystyle S(x)=operatortorname {P} [X/2005x]=left{{begin{matrix}1 fake{text{para }}{e^{-lambda x}{text{para }}}xgeq 0end{matrix}{right. !

x]=left{{begin{matrix}1&{text{para }}x

Properties

Yeah. X{displaystyle X} is a random variable such that X♥ ♥ Exp (λ λ ){displaystyle Xsim operatorname {Exp} (lambda)} then.

The mean of the random variable X{displaystyle X} That's it.

- E [chuckles]X]=1λ λ {displaystyle operatorname {E} [X]={frac {1}{lambda }}}}}

Variance of the random variable X{displaystyle X} That's it.

- Var [chuckles]X]=1λ λ 2{displaystyle operatorname {Var} [X]={frac {1}{lambda ^{2}}}}}}

The n{displaystyle n}-the moment of the random variable X{displaystyle X} That's it.

- E [chuckles]Xn]=n!λ λ n{displaystyle operatorname {E} [X^{n}]={frac {n}{lambda ^{n}}}}}}

The generating function of moments X{displaystyle X} for t}" xmlns="http://www.w3.org/1998/Math/MathML">λ λ ▪t{displaystyle lambda t}t}" aria-hidden="true" class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/06e1c46af304b4a4e76acd74280ba00c6709a2b3" style="vertical-align: -0.338ex; width:5.293ex; height:2.176ex;"/> is given by

- MX(t)=(1− − tλ λ )− − 1=λ λ λ λ − − t{displaystyle M_{X}(t)=left(1-{frac {t}{lambda }}}{-1}={lambda }{lambda }}}}}}}}}

Scale

Yeah. X{displaystyle X} is a random variable such that X♥ ♥ Exp (λ λ ){displaystyle Xsim operatorname {Exp} (lambda)} and 0}" xmlns="http://www.w3.org/1998/Math/MathML">c▪0{displaystyle c HCFC}0}" aria-hidden="true" class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/2ba126f626d61752f62eaacaf11761a54de4dc84" style="vertical-align: -0.338ex; width:5.268ex; height:2.176ex;"/> a constant then

- cX♥ ♥ Exp (λ λ c){displaystyle cXsim operatorname {Exp} left({frac {lambda }{c}right)}

Memory Loss

Sea X{displaystyle X} a random variable such that X♥ ♥ Exp (λ λ ){displaystyle Xsim operatorname {Exp} (lambda)} then for any x,and≥ ≥ 0{displaystyle x,ygeq 0}

- x+y|X>y]=operatorname {P} [X>x]}" xmlns="http://www.w3.org/1998/Math/MathML">P [chuckles]X▪x+and日本語X▪and]=P [chuckles]X▪x]{displaystyle operatorname {P} [X HCFCx+yhealthX parenty]=operatorname {P} [X HCFCx]}}

x+y|X>y]=operatorname {P} [X>x]}" aria-hidden="true" class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/8fc3596bcdd6f77e68d8aa947d20b498bc8fac6b" style="vertical-align: -0.838ex; width:32.545ex; height:2.843ex;"/>

This can be easily shown as

- x+y|X>y]&={frac {operatorname {P} [X>x+ycap X>y]}{operatorname {P} [X>y]}}\&={frac {operatorname {P} [X>x+y]}{operatorname {P} [X>y]}}\&={frac {e^{-lambda (x+y)}}{e^{-lambda y}}}\&=e^{-lambda x}\&=operatorname {P} [X>x]end{aligned}}}" xmlns="http://www.w3.org/1998/Math/MathML">P [chuckles]X▪x+and日本語X▪and]=P [chuckles]X▪x+and X▪and]P [chuckles]X▪and]=P [chuckles]X▪x+and]P [chuckles]X▪and]=e− − λ λ (x+and)e− − λ λ and=e− − λ λ x=P [chuckles]X▪x]{displaystyle}{begin{aligned}operatorname {P} [xgivex+y1⁄4x1⁄41⁄41⁄1⁄1⁄1⁄1⁄1⁄1⁄1⁄41⁄41⁄41⁄41⁄41⁄41⁄41⁄41⁄41⁄41⁄41⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄21⁄2⁄2

x+y|X>y]&={frac {operatorname {P} [X>x+ycap X>y]}{operatorname {P} [X>y]}}\&={frac {operatorname {P} [X>x+y]}{operatorname {P} [X>y]}}\&={frac {e^{-lambda (x+y)}}{e^{-lambda y}}}\&=e^{-lambda x}\&=operatorname {P} [X>x]end{aligned}}}" aria-hidden="true" class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/a25c363af2bbaa0f3668733f6c6e3012d3552082" style="vertical-align: -12.171ex; width:46.945ex; height:25.509ex;"/>

Quantiles

The quantile function (against the accumulated distribution function) for a random variable X♥ ♥ Exp (λ λ ){displaystyle Xsim operatorname {Exp} (lambda)} is given by

- <math alttext="{displaystyle F^{-1}(p)={frac {-ln(1-p)}{lambda }}qquad 0leq pF− − 1(p)=− − ln (1− − p)λ λ 0≤ ≤ p.1{displaystyle F^{-1}(p)={frac {-ln(1-p)}{lambda }qquad 0leq p≤1}<img alt="{displaystyle F^{-1}(p)={frac {-ln(1-p)}{lambda }}qquad 0leq p

so the quantiles are:

The first quartile is

- F− − 1(14)=− − ln (34)λ λ =1λ λ ln (43){displaystyle F^{-1}left({frac {1}{4}}}{right)=-{frac {ln left({frac {3}{4}}}}}{right)}{lambda }{1}{lambda }{lambda }{ln left({frac {4}{

The median is

- F− − 1(12)=− − ln (12)λ λ =ln (2)λ λ {displaystyle F^{-1}left({frac {1}{2}}}}{right)=-{frac {ln left({frac {1}{2}}}}{right)}{lambda }}}}{{{lambda }}}}{{lambda }}}}}{

And the third quartile is given by

- F− − 1(34)=− − ln (14)λ λ =ln (4)λ λ {displaystyle F^{-1}left({frac {3}{4}}}}{right)=-{frac {ln left({frac {1}{4}}}}{right)}{lambda }}}}{{lambda }}}{{lambda }}}}{

Example

Examples for the exponential distribution is the distribution of the length of the intervals of a continuous variable that elapse between two events, which are distributed according to the Poisson distribution.

- The time spent in a call center until receiving the first call of the day could be modeled as an exponential.

- The time interval between earthquakes (of a certain magnitude) follows an exponential distribution.

- Suppose a machine that produces wire thread, the amount of meters of wire until you find a wire failure could be modeled as an exponential.

- In systems reliability, a device with constant failure rate follows an exponential distribution.

Related Distributions

- Yeah. X♥ ♥ Laplace (μ μ ,β β − − 1){displaystyle Xsim operatorname {Laplace} (mubeta ^{-1}}}}} then. 日本語X− − μ μ 日本語♥ ♥ Exp (β β ){displaystyle ΔX-mu Δsim operatorname {Exp} (beta)}.

- Yeah. X♥ ♥ Couple (1,λ λ ){displaystyle Xsim operatorname {Pareto} (1,lambda)} then. ln (X)♥ ♥ Exp (λ λ ){displaystyle ln(X)sim operatorname {Exp} (lambda)}.

- Yeah. X♥ ♥ Interpreter Interpreter (1,λ λ ){displaystyle Xsim Gamma (1,lambda)} then. X♥ ♥ Exp (λ λ ){displaystyle Xsim operatorname {Exp} (lambda)}.

- Yeah. X1,X2,...... ,Xn{displaystyle X_{1},X_{2},dotsX_{n}} are independent random variables such that Xi♥ ♥ Exp (λ λ ){displaystyle X_{i}sim operatorname {Exp} (lambda)}then. ␡ ␡ i=1nXi♥ ♥ Interpreter Interpreter (n,λ λ ){displaystyle sum limits _{i=1}^{n}X_{i}sim Gamma left(n,lambda right)}Where Interpreter Interpreter (n,λ λ ){displaystyle Gamma left(n,lambda right)} is the distribution of Erlang with parameters n한 한 N{displaystyle nin mathbb {N} } and λ λ {displaystyle lambda }, this is ␡ ␡ i=1nXi♥ ♥ Erlang (n,λ λ ){displaystyle sum limits _{i=1}^{n}X_{i}sim operatorname {Erlang} left(n,lambda right)}}. I mean, the sum of n{displaystyle n} independent random variables with exponential distribution with parameter λ λ {displaystyle lambda } is a random variable with Erlang distribution.

Statistical Inference

Suppose X{displaystyle X} is a random variable such that X♥ ♥ Exp (λ λ ){displaystyle Xsim operatorname {Exp} (lambda)} and x1,x2,...... ,xn{displaystyle x_{1},x_{2},dotsx_{n}} is a sample from X{displaystyle X}.

Parameter Estimation

The estimator for maximum verosimilitude λ λ {displaystyle lambda } is built as follows:

The likelihood function is given by

- L(λ λ )= i=1nλ λ e− − λ λ xi=λ λ nExp (− − λ λ ␡ ␡ i=1nxi)=λ λ nExp (− − λ λ nx! ! ){displaystyle {begin{aligned}{mathcal {L}}(lambda) fake=prod _{i=1}{n}{n}{lambda e^{-lambda x_{i}{iopera}{lambda}{nx1}{nx1}{nx1}{ix1}{x1}{ix1}}{e

where

- x! ! =1n␡ ␡ i=1nxi{displaystyle {bar {x}}={frac {1}{n}}}{sum _{i=1}^{n}x_{i}}}

is the sample mean.

Taking logarithms to the likelihood function

- ln L(λ λ )=ln (λ λ nExp (− − λ λ nx! ! ))=nln λ λ − − λ λ nx! ! {displaystyle {begin{aligned}ln {mathcal {L}}}(lambda) fake=ln left(lambda ^{n}operatortorname {exp} (-lambda n{bar {x}}}}}{ right}{right}{aln lambda n{baral {x}{

drifting over λ λ {displaystyle lambda } We get

- dln Ldλ λ =ddλ λ (nln λ λ − − λ λ nx! ! )=nλ λ − − nx! ! {displaystyle {begin{aligned}{frac {dmathcal {L}}}{dlambda }}{frac {d}{dlambda }}{(nln lambda}{lambda n{bar {x}{x}{frac {n}{lambda}{

If we equal 0{displaystyle} We get the estimate. λ λ ^ ^ {displaystyle {hat {lambda}}} given

- λ λ ^ ^ =1x! ! {displaystyle {hat {lambda }}={frac {1}{bar {x}}}}}}}

The estimate λ λ ^ ^ {displaystyle {hat {lambda}}} It's an estimate.

- E [chuckles]λ λ ^ ^ ]I was. I was. λ λ {displaystyle operatorname {E} [{hat {lambda }}]neq lambda }

Application

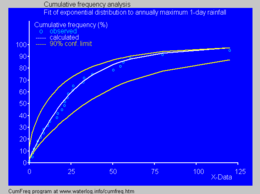

In hydrology, the exponential distribution is used to analyze random variable extremes of variables such as monthly and yearly maximums of daily precipitation.

- The blue image illustrates an example of the adjustment of the exponential distribution to the ordered annual maximum dairy rains, also showing the 90% confidence strip, based on the binomial distribution. The observations present the position markers, as part of the accumulated frequency analysis.

Computational methods

Pseudo-Random Number Generator

To obtain pseudo-random numbers the random variable X{displaystyle X} with exponential distribution and parameter λ λ {displaystyle lambda }, an algorithm is used based on the method of reverse transformation.

To generate a value of X♥ ♥ Exp (λ λ ){displaystyle Xsim operatorname {Exp} (lambda)} from a random variable U♥ ♥ U (0,1){displaystyle Usim operatorname {U} (0.1)} the following algorithm is used

- X=− − 1λ λ ln (1− − U){displaystyle X=-{frac {1}{lambda }}ln(1-U)}

using the fact that if U♥ ♥ U (0,1){displaystyle Usim operatorname {U} (0.1)} then. 1− − U♥ ♥ U (0,1){displaystyle 1-Usim operatorname {U} (0.1)} so a more efficient version of the algorithm is

- X=− − 1λ λ ln (U){displaystyle X=-{frac {1}{lambda }}ln(U)}

Software

Software and a computer program can be used to fit a probability distribution, including the exponential one, to a series of data:

Contenido relacionado

Thiessen polygons

Slater's determinant

Rod (unit of length)

![{displaystyle operatorname {E} [X]={frac {1}{lambda }}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b73f390ebc9ba94630076990a6d8cdb39c7b2e3c)

![{displaystyle operatorname {Var} [X]={frac {1}{lambda ^{2}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a568b295dd8fd94661a9ebbb0f4ef4c7df8625e3)

![{displaystyle operatorname {E} [X^{n}]={frac {n!}{lambda ^{n}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7e9cdded24c663acdfe60cb3188eedda2d402680)

![{displaystyle operatorname {E} [{hat {lambda }}]neq lambda }](https://wikimedia.org/api/rest_v1/media/math/render/svg/74ee1ea5ee71387651481abe1999049cb49bb39a)