Distributed computing

Distributed computing is a model for solving massive computing problems using a large number of computers organized in clusters embedded in a distributed telecommunications infrastructure.

Distributed computing is a computer model that allows large calculations to be made using thousands of volunteer computers. This system is based on distributing the information through the Internet using software, previously downloaded by the user, to different computers, which solve the calculations and once they have the result, they send it to the server. This project, almost always in solidarity, distributes the information to be processed among the thousands of volunteer computers in order to achieve processing rates that are often higher than those of supercomputers and at a much lower cost.

The benefit of distributed computing is that processing activity can be assigned to the location or locations where possible, performing it more efficiently. We can take distributed computing in a company as an example, each office can organize and manipulate the data to meet specific needs, as well as share the product with the rest of the organization. It also allows us to optimize the equipment and improves the balancing of the processing within an application, the latter is of great importance since in some applications there is simply no machine that is capable of performing all the processing.

For this, we can talk about "processes". A process performs two types of operations:

•Initial Declaration and External Requests made by other processes. these operations are executed one at a time interleaving each other. This continues until the statement terminates or waits for a condition to become true.

•Then another operation is started (as a result of an external request). When this operation, in turn, terminates or waits, the process will start another operation (requested by another process) or resume a previous operation (as a result of a condition becoming true).

•This intertwining of the initial statement and external requests goes on forever. If the initial statement terminates, the process continues to exist, even though the first process terminates, it will continue to accept External requests.

Introduction

From the beginning of the modern computer age (1945), until about 1985, only centralized computing was known. From the middle of the 1980s, two fundamental technological advances appeared:

- Development of powerful and economic microprocessors with architectures of 8, 16, 32 and 64 bits.

- Development of high-speed local area networks (LAN), with the possibility of connecting hundreds of machines at transfer rates of millions of bits per second (mb/sec).

Distributed systems appear, in contrast to centralized systems.

A distributed system is a system in which hardware or software components are located on computers linked by a network.

OS for distributed systems have had important developments, but there is still a long way to go. Users can access a wide variety of computing resources:

- Hardware and software.

- Distributed among a large number of connected computer systems.

Characteristics of a distributed system:

- Concurrence. In a computer network, the concurrent execution of a program is the norm.

- Inexistence of a global clock. Temporary need for coordination/synchronization.

- Independent failures.

- By network isolation (red)

- By stop of a computer (hardware)

- Abnormal termination of a program (software)

Historical Evolution

The concept of messaging originated in the late 1960s. Although general-purpose multiprocessor and computer networks did not exist at the time, the idea of organizing an operating system as a collection of communication processes where each process has a specific function, in which others cannot interfere (non-shared variables). In this way, in the 70s the first generalized distributed systems were born, local area networks (LAN) such as Ethernet.

This event immediately spawned a host of languages, algorithms, and applications, but it wasn't until LAN prices fell that client-server computing was developed. In the late 1960s, the Advanced Research Projects Agency Network (ARPANET) was created. This agency was the backbone of the Internet until 1990, after completing the transition to the TCP/IP protocol, which began in 1983. In the early 1970s, ARPANET email was born, which is considered the first large-scale distributed application..

Research on distributed algorithms has been conducted over the last two decades and considerable progress has been made in the maturity of the field, especially during the 1980s. Originally the research was highly oriented towards applications of the algorithms in wide area networks (WANs), but nowadays mathematical models have been developed that allow the application of the results and methods to broader classes of distributed environments.

There are several journals and annual conferences that specialize in results relating to distributed algorithms and distributed computing. The first conference on the subject was the symposium 'Principles of Distributed Computing' (PoDC) in 1982, whose procedures are published by the 'Association for Computing Machinery, Inc'. ‘International Workshops on Distributed Algorithms’ (WDAG) was first held in Ottawa in 1985 and later in Amsterdam (1987) and Nice (1989). Since then, their proceedings have been published by Springer-Verlag in the series 'Lecture Notes on Computer Science'. In 1998, the name of this conference changed to Distributed Computing (DISC). The annual Symposia on Theory of Computation ('SToC') and Fundamentals of Computer Science (FoCS) cover all fundamental areas of computing, often leading to papers on distributed computing. The ‘SToC’ proceedings are published by the ‘Association for Computing Machinery, Inc.’ and those of FoCS by the IEEE. 'The Journal of Parallel and Distributed Computing (JPDC), 'Distributed Computing' and 'Information Processing Letters' (IPL) publish regularly distributed algorithms.

This is how distributed systems were born.

Comparison of Parallel-Distributed Computing

As with distributed systems, in parallel systems there is no clear definition. The only obvious thing is that any system in which events can be partially ordered would be considered a parallel system and therefore this would include all distributed systems and shared memory systems with multiple threads of control. In this way, it could be said that distributed systems form a subclass of parallel systems, where the state spaces of the processes do not overlap.

Some distinguish parallel systems from distributed systems based on their goals: parallel systems focus on increasing performance, while distributed systems focus on partial fault tolerance.

From another point of view, in parallel computing, all processors can have access to a shared memory to exchange information among themselves, and in distributed computing, each processor has its own private memory, where information is exchanged by passing messages between processors.

You could then say that parallel computing is a particular form of tightly coupled distributed computing, and distributed computing is a loosely coupled form of parallel computing.

The figure to the right illustrates the difference between distributed and parallel systems. The figure a. It is a schematic of a distributed system, which is represented as a network topology in which each node is a computer and each line connecting the nodes is a communication link. Figure b) shows the same distributed system in more detail: each computer has its own local memory, and information can only be exchanged by passing messages from one node to another using available communication links. Figure c) shows a parallel system in which each processor has direct access to a shared memory.

Architectures

To get into the subject, it is necessary to define some terms such as parallelism, parallel computing and distributed computing. Concurrency refers to the use of different resources.

Parallel computing aims to solve a problem quickly using many processors at the same time, while distributed computing is a collection of independent computers that are connected and interact by sharing resources. Among the characteristics of parallel computing, we have an application that will be used in it divided into subtasks that are processed at the same time. One request is processed at a time, and the goal is to speed up its creation. Such programs often work with similar designs and may share a concept.

On the other hand, shared computing has challenges in managing access to shared resources, hardware, functional heterogeneity of operating systems and programming languages, and security. It is characterized by having many devices in remote locations, running many applications at the same time, many different times, and since the distributed system tries to appear as a single machine to the users, they do not share the same idea. mode (in hardware).

Physical Models: Capture the hardware composition of a system in terms of computers and interconnection networks.

Architectural models: These describe the system in terms of the computational and communication tasks performed by the elements.

Fundamental models: Describe an abstract perspective for examining an individual aspect of a distributed system. • Interaction Model • Failure Model • Security Model

Various hardware and software architectures are used in distributed computing. At low levels, the interconnection of multiple CPUs with some kind of network is necessary. At a higher level, it is necessary to interconnect the processes running on those CPUs with the help of some kind of communication system.

Distributed programming is usually framed in one of the following basic architectures: client-server, three-tier, n.tier or peer-to-peer; o categories: loose coupling or tight coupling

- Client-server: These are architectures in which intelligent customers contact the server to obtain data, format them and show them to users. The data entered by the client is sent to the server when they represent a permanent change.

- Three-Tier: Architectures that move customer intelligence to an intermediate level to be able to use unstated customers. This simplifies the deployment of applications. Most web applications are Three-Tier type.

- n-Tier: Architectures that typically refer to web applications that forward their requests to other services of the company. This type of applications is the most responsible for the success of application servers.

- Peer-to-peer: Architectures in which there are no special machines that provide a service or manage the resources of the network. Instead, all responsibilities are evenly distributed among all machines, known as peers. Examples of this architecture include BitTorrent and the bitcoin network.

Another basic aspect of distributed computing architecture is the method of communication and coordination of work between concurrent processes. Through various message passing protocols, processes can communicate directly with each other, typically in a master/slave relationship. Alternatively, a "database-centric" it can allow distributed computing to be done without any form of direct communication between processes, using a shared database. The database-centric architecture, in particular, provides relational processing analytics in a schematic architecture that allows live streaming of the environment. This enables distributed computing functions both inside and outside the parameters of a networked database.

Applications and Examples

There are numerous examples of distributed systems being used in everyday life in a variety of applications. Most systems are structured as client-server systems, in which the server machine is the one that stores the data or resources, and provides service to several geographically distributed clients. However, some applications do not depend on a central server, that is, they are peer-to-peer systems, whose popularity is increasing. Here are some examples of distributed systems:

- World Wide Web: is a popular service that works on the Internet. Allows documents from a computer to refer to textual or non-textual information stored in others. These references appear on the user's monitor, and when the user selects the ones you want, the system gets the item from a remote server using the appropriate protocols and presents the information on the client machine.

- Network File Server: a local area network consists of a number of independent computers connected through high-speed links. In many local area networks, an isolated machine on the network serves as a file server. Thus, when a user accesses a file, the operating system directs the request of the local machine to the file server, which checks the authenticity of the request and decides whether it can grant access.

- Bank network

- Networks peer-to-peer

- Process control systems: Industrial plants use controller networks to inspect production and maintenance.

- Sensor Networks: The reduction of the cost of equipment and the growth of wireless technology have given rise to new opportunities in the design of specific purpose distributed systems, such as sensor networks, where each node is a processor equipped with some sensors, and is able to communicate wirelessly with others. These networks can be used in a wide range of problems: the monitoring of the battlefield, the detection of biological and chemical attacks, home automation, etc.

- Grid Computing: It is a distributed computer form that supports parallel programming in a variable-sized computer network. At the bottom end, a computer network can use a fraction of the computer resources, while at the top, it can combine millions of computers worldwide to work on extremely large projects. The objective is to resolve difficult computer problems faster and less cost than conventional methods.

- Cluster: Cluster means integrating two or more computers to work simultaneously in processing a particular task. The cluster began to be used in 1960 by IBM (International Business Machines Corporation) with the aim of increasing the efficiency of its processors. But now we must talk about how to know that it is a cluster we must understand that it is the connection as we have already commented on two or more computers in order to improve the performance of the systems during the execution of different tasks. In a cluster, each computer bears the name “node”, and there are no limits on how many nodes can be interconnected. With this, computers begin to act within a single system, working together in the processing, analysis and interpretation of data and information, and/or performing simultaneous tasks.

Models

Systems intended for use in real-world environments must be designed to function properly in the widest possible range of circumstances and in the face of potential difficulties and threats. The properties and design problems of distributed systems can be captured and discussed through the use of descriptive models. Each model is intended to provide an abstract and simplified but consistent description of a relevant aspect of distributed system design.

Some relevant aspects may be: the type of node and network, the number of nodes and their responsibility, and possible failures both in communication and between nodes. You can define as many models as characteristics you want to consider in the system, but this classification is usually followed:

Physical models

They represent the most explicit way to describe a system, they identify the physical composition of the system in computational terms, mainly considering heterogeneity and scale. We can identify three generations of distributed systems:

- First distributed systems: the 70-80s emerge in response to the first local networks (Ethernet). The objective was to provide quality of service (coordination and synchronization) from the beginning, being the basic point of departure in the future.

- Scalable distributed systems on the Internet: They are born based on the great growth of the internet in the 1990s. An interconnected network environment, better known as the Internet network, is beginning to be implemented, leading to a significant increase in the number of nodes and the level of heterogeneity. Open standards such as CORBA or web service are defined.

- Contemporary distributed systems: Distributed systems have acquired new features that should also be included when designing and implementing a system (extensibility, security, concurrence, transparency...etc). The appearance of mobile computing, ubiquitous computing or cloud computing and cluster architectures make it necessary to implement more complex computational elements subject to centralized control, offering a wide variety of applications and services according to their demand (thousands of nodes).

Architectural models

The general objective of this type of model is to guarantee the distribution of responsibilities between components of the distributed system and the location of these components. The main concerns are to determine the relationship between processes and to make the system reliable, adaptable and profitable.

- Client-Server: software design model in which tasks are distributed among resource or service providers, called servers, and plaintiffs, called clients. Customers make requests to the server, another program, which gives an answer. But also a server can be a client of other servers. A good example would be a web server, which is a DNS server client.

- A good practice is replication to increase performance and availability, better known as mirror server. Another option can be the proxy servers, which use caches with the most recent data requested.

- Derivatives: mobile code, mobile agent, network computers, light clients or Cloud Computing.

- Peer-to-peer: Systems equal to equal, that is, all interconnected elements have the same role. This is a fully decentralized and self-organized service, facilitating a dynamic balance of loads (storage and processing) between system computers.

Layered Architecture: allows to take advantage of the concept of abstraction, in this model, a complex system is divided into an arbitrary number of layers, where the upper layers make use of the services provided by the lower layers. In this way, one layer offers a service without the layers above or below being aware of the implementation details. A distributed service can be provided by one or more server processes, which interact with each other and with client processes to maintain a view of the entire system. The organization of services in layers occurs due to the complexity of distributed systems.

A common structure of the layered architecture model is divided into four layers: the application and service layer, the middleware layer, the operating system layer, and the network and computer hardware layer.

The platform for distributed systems and applications is made up of the lowest-level hardware and software layers, including network hardware, computers, and the operating system of the distributed system. This layer provides services to the layers above, which are implemented independently on each computer.

Middleware is all software that is intended to mask the distributed system, providing a homogeneous appearance of the system.

The upper layer, intended for applications and services are the functionalities provided to users, these are known as distributed applications.

Fundamental Models

All of the above models share a design and a set of requirements necessary to provide reliability and security of system resources. A fundamental model takes an abstract perspective, according to the analysis of individual aspects of the distributed system; it should contain only the essentials to be taken into account to understand and reason about some aspects of a system's behavior.

Interaction models

- They analyze the structure and sequence of communication between the elements of the system. The benefits of the communication channel (Latencia, AB, fluctuation) are important, making it impossible to predict the delay with which a message can arrive. In other words, there is no global time for the entire system, execution is “non-determinist”. Each computer has its own internal clock, which means having to synchronize the local clocks of all the machines that make up the distributed system. There are different mechanisms (NTP, GPS receivers, event management mechanisms).

- There are two types of interaction models:

- Synchronous: There are known limits for the time of execution of the stages of the processes, in the time necessary when transmitting messages or in the rates of derivation of the watches. That is, limits can be set to approach the actual behavior of the system, but in practice, this is not possible, and estimates (timeout) are usually used.

- Asynchronous: There are no limitations on synchronic models. Most distributed systems are asynchronous.

Failure models

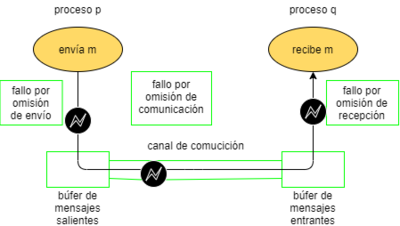

- study and identification of possible causes of failure. They may be classified according to the entity, resulting in process failures or communication failures, or according to the problem, resulting in failures by omission or arbitrary:

- Process omission failures: Process failures, failure-stop (the process stops and remains stopped) or timeout error detection, the process does not respond (only in synchronous models)

- Dismissal in communications: failures in the shipment (the message is not placed in the buffer) or in the reception (the process does not receive the message).

- Arbitrary or Byzantine failures: in the process (omissions, incorrect steps are taken in the processing or the response to messages is arbitrarily omitted) or in channels of communication (scheduling of messages, distributing of non-existent or duplicated messages).

- Failure masking: Some detected failures may be concealed or dimmed. For example, checksum (from arbitrary judgment to default judgment).

Security models

- The security of a distributed system can be achieved by ensuring the processes and channels used for its interactions and protecting objects that encapsulate against unauthorized access. These models provide the basis for building a secure system based on resources of all kinds. To do this, it is key to postulate an enemy that is able to send any message to any process and read or copy any message sent between a couple of processes.

Therefore, for it to be possible to affirm that there is a reliable communication between two processes, its integrity and validity must be ensured.

- Classification based on the structure of the network and memory.

When we talk about models in a distributed system, we refer mainly to the fact of automating tasks, using a computer, of the question-answer type, that is, when we ask a question to the computer, it must answer us with an appropriate answer. In theoretical computing, this process is known as computational problems.

Formally, a computational problem consists of instances together with a solution to each of them. The instances can be translated as questions that we ask the computer and the solutions as its answers to our questions.

Theoretically, theoretical computing seeks to find the relationship between problems that can be solved by a computer (computability theory) and the efficiency of doing so (computational complexity theory).

Commonly, there are three points of view:

- Parallel algorithms in the shared memory model.

A parallel algorithm defines multiple operations to be executed at each step. This includes communication/coordination between processing units.

A clear example for this type of model would be the Parallel Random Access Machine (PRAM) model.

- - Parallel RAM

- - Shared central memory

- - Processing Unit Set (PUs)

- - The number of processing units and the size of the memory is unlimited.

PRAM model details

- - Lock-step execution

- It's a 3-phase cycle:

- Memory cells are read.

- Local calculations and computations are performed.

- Write in shared memory.

- All processing units run these steps synchronically.

- There is no need for explicit synchronization.

- The entry of a PRAM program consists of n elements saved in M[0],..., M[n-1]M[0],...,M[n−1]

- The exit of the PRAM program consists of the following cells n' of memory from the last entry M[n]... M[n]...M[n+n′−1]

- An instruction is performed in 3-phase cycle, which can be jumped if necessary:

- Read (Read of shared memory)

- Computer

- Write in shared memory

- About simultaneous access to memory:

- PRAM models:

- CREW: Simultaneous reading, exclusive writing

- CRCW: Simultaneous reading, simultaneous writing

- EREW: Exclusive Reading, Exclusive Writing

- PRAM models:

There is much more information about this type of algorithm in a more summarized form in the following books.

- Parallel algorithms in the message step model.

- --In this algorithm the programmer imagines several processors, each with their own memory space, and writes a program to run it on each processor. So far, all right, but parallel programming by definition requires cooperation among processors to resolve a task, which requires some means of communication. The main point of the message-step paradigm is that processes communicate by sending messages to one another. Therefore, the message-pass model does not have the concept of a shared memory space or that processors access directly to the memory of each one, anything other than the message-step is outside the scope of the paradigm.

- - With respect to the programs that run on individual processors, the message-pass operations are only called to subroutines.

- - Models such as boolean circuits and classification networks are used. A boolean circuit can be seen as a computer network: each door is a computer that runs an extremely simple computer program. Similarly, a classification network can be seen as a computer network: each comparing is a computer.

- Algorithms distributed in the message step model.

- The algorithm designer only chooses the computer program. All computers run the same program. The system must work properly regardless of the network structure.

- A commonly used model is a graph with a finite state machine per node.

Advantages and disadvantages

Advantages

Geographically Distributed Environment: First, in many situations, the computing environment itself is geographically distributed. As an example, let's consider a banking network. Every bank is supposed to maintain the accounts of its customers. In addition, banks communicate with each other to monitor interbank transactions, or record fund transfers from geographically dispersed ATMs. Another common example of a geographically distributed computing environment is the Internet, which has profoundly influenced our way of life. User mobility has added a new dimension to geographic distribution.

Speed up: Secondly, there is a need to speed up the computations. Computing speed on traditional uniprocessors is rapidly approaching the physical limit. While VLIW and superscalar processors stretch the limit by introducing parallelism at the architectural level (instruction issue), the techniques do not scale much beyond a certain level. An alternative technique to obtain more computing power is to use multiple processors. Splitting an entire problem into smaller subproblems and assigning these subproblems to separate physical processors that can run simultaneously is a potentially attractive method of increasing computational speed. Furthermore, this approach promotes better scalability, where users can progressively increase computing power by purchasing additional processing elements or resources. This is often easier and cheaper than investing in a single super-fast uniprocessor.

Share resources: Third, there is a need to share resources. The user of computer A may want to use a laser printer attached to computer B, or the user of computer B may need some extra disk space available on computer C to store a large file. In a workstation network, Workstation A might want to use the idle computing power of Workstations B and C to increase the speed of a certain calculation. Distributed databases are good examples of software resource sharing, where a large database can be stored on multiple host machines and systematically updated or retrieved by a series of agent processes.

Fault tolerance: Finally, powerful uniprocessors, or computing systems built around a single central node, are prone to complete collapse when the processor fails. Many users consider this to be risky. However, they are willing to compromise with a partial degradation of system performance, when a failure brings to a standstill a fraction of the many processing elements or links in a distributed system. This is the essence of gradual degradation. The flip side of this approach is that by incorporating redundant processing elements into a distributed system, you can potentially increase the reliability or availability of the system. For example, in a system that has triple modular redundancy (TMR), three identical functional units are used to perform the same computation, and the correct result is determined by majority vote. In other fault-tolerant distributed systems, processors check each other at predefined checkpoints, allowing for automatic fault detection, diagnosis, and eventual recovery. Thus, a distributed system offers an excellent opportunity to incorporate fault tolerance and graceful degradation.

Modularity: The client/server architecture is built on the basis of pluggable modules. Both the client and the server are independent system modules from each other and can be replaced without affecting each other. Functions are added to the system either by creating new modules or by improving existing ones.

Portability: Currently processing power can be found in various sizes: super servers, servers, desktop, notebooks, portable machines. Distributed computing solutions allow applications to be located where it is most advantageous or optimal.

Drawbacks

Scalability: The system should be designed in such a way that the capacity can be increased with the increasing demand on the system.

Heterogeneity: The communications infrastructure consists of channels of different capacities.

Resource management: In distributed systems, resources are located in different places. Routing is a problem at the network layer and at the application layer.

Security and privacy: Since distributed systems deal with sensitive data and information, strong security and privacy measures must be in place. Protection of distributed system assets as well as higher level composites of these resources are important issues in the distributed system.

Transparency: Transparency means to what extent the distributed system should appear to the user as a single system. The distributed system should be designed to further hide the complexity of the system.

Openness: Openness means the extent to which a system is designed using standard protocols to support interoperability. To achieve this, the distributed system must have well-defined interfaces.

Synchronization: One of the main problems is the synchronization of calculations consisting of thousands of components. Current synchronization methods such as semaphores, monitors, barriers, remote procedure calls, object method invocations, and message passing do not scale well.

Deadlock and Race Conditions: Deadlock and race conditions are other big issues in the distributed system, especially in the context of testing. It becomes a more important issue especially in shared memory multiprocessor environment.

Supplementary reading

- Books

Contenido relacionado

Bit

AIX

NTFS