Differential equation

A differential equation is a mathematical equation that relates a function to its derivatives. In applied mathematics, functions usually represent physical quantities, derivatives represent their rates of change, and the equation defines the relationship between them. Because these relationships are so common, differential equations play a central role in many disciplines, including engineering, physics, chemistry, economics, and biology.

In applications of mathematics, problems often arise in which the dependence of one parameter on another is unknown, but it is possible to write an expression for the rate of change of one parameter relative to another (derivative). In this case, the problem is reduced to finding a function by its derivative related to some other expressions.

In pure mathematics, differential equations are studied from different perspectives, most concerning the set of solutions of the functions that satisfy the equation. Only the simplest differential equations can be solved by explicit formulas; however, some properties of the solutions of a certain differential equation can be determined without finding its exact form.

If the exact solution cannot be found, it can be obtained numerically, by approximation using computers. Dynamical systems theory emphasizes the qualitative analysis of systems described by differential equations, while many numerical methods have been developed to determine solutions with some degree of accuracy.

History

Differential equations first appeared in the calculus works of Newton and Leibniz. In 1671, in Chapter 2 of his work Method of Fluxions and Infinite Series, Isaac Newton listed three classes of differential equations:

- danddx=f(x){displaystyle {frac {dy}{dx}}=f(x)}}

- danddx=f(x,and){displaystyle {frac {dy}{dx}}=f(x,y)}

- x1▪ ▪ and▪ ▪ x1+x2▪ ▪ and▪ ▪ x2=and{displaystyle x_{1}{frac {partial y}{partial x_{1}}}}}x_{2}{frac {partial y}{partial x_{2}}}}}}}

He solved these equations and others using infinite series and discussed the non-uniqueness of the solutions.

Jakob Bernoulli proposed Bernoulli's differential equation in 1695. This is an ordinary differential equation of the form

- and♫+P(x)and=Q(x)andn{displaystyle y'+P(x)y=Q(x)y^{n},}

for which, in the following years, Leibniz obtained his solutions through simplifications.

Historically, the problem of a vibrating string, such as that of a musical instrument, was studied by Jean le Rond d'Alembert, Leonhard Euler, Daniel Bernoulli, and Joseph-Louis Lagrange. In 1746, d'Alembert discovered the one-dimensional wave equation, and within ten years Euler discovered the three-dimensional wave equation.

The Euler-Lagrange equations were developed in the 1750s by Euler and Lagrange in connection with their studies of the tautochrone problem. This is the problem of determining a curve in which a weighted particle will fall on a fixed point in a certain fixed amount of time, independent of the starting point.

Lagrange solved this problem in 1755 and sent the solution to Euler. Both developed Lagrange's method and applied it to mechanics, which led to Lagrangian mechanics.

In 1822 Fourier published his work on heat transfer in Théorie analytique de la chaleur (Analytic Theory of Heat), in which he based his reasoning on Newton's law of cooling, that is, that the heat transfer between two adjacent molecules is proportional to extremely small differences in their temperatures. In this book Fourier exposes the heat equation for the conductive diffusion of heat. This equation in partial derivatives is currently the object of study in mathematical physics.

Stochastic differential equations, which extend both the theory of differential equations and the theory of probability, were introduced with rigorous treatment by Kiyoshi Itō and Ruslan Stratonovich during the 1940s and 1950s.

Types

Differential equations can be divided into several types. Apart from describing the properties of the equation itself, the classes of differential equations can help in finding the choice of approximation to a solution. It is very common for these distinctions to include whether the equation is: ordinary/in partial derivatives, linear/nonlinear, and homogeneous/inhomogeneous. This list is too big; there are many other properties and subclasses of differential equations which can be very useful in specific contexts.

Ordinary Differential Equations

An ordinary differential equation (ODE) is an equation that contains a function of an independent variable and its derivatives. The term ordinary is used in contrast to the partial differential equation, which can be with respect to more than one independent variable.

Linear differential equations, which have solutions that can be added and multiplied by coefficients, are well defined and understood, and have exact solutions that can be found. In contrast, the ODEs whose solutions cannot be added are non-linear, and their solution is more intricate, and very rarely can be found in the exact form of elementary functions: the solutions are usually obtained in the form of series or integral form. Numerical and graphical methods for ODEs can be performed manually or by computers, the solutions of the ODEs can be approximated and their result can be very useful, often enough to dispense with the exact and analytical solution.

Equation in partial derivatives

A partial differential equation (PDE) is a differential equation that contains a multivariable function and its partial derivatives. These equations are used to formulate problems involving functions of several variables, and can be solved manually, to create a computer simulation.

PDEs can be used to describe a wide variety of phenomena such as sound, heat, electrostatics, electrodynamics, fluid dynamics, elasticity, or quantum mechanics. These different physical phenomena can be formalized in terms of PDEs. With ordinary differential equations it is very common to make one-dimensional models of dynamic systems, and partial differential equations can be used to model multidimensional systems. PDEs have a generalization to stochastic partial differential equations.

Linear Differential Equations

A differential equation is linear when its solutions can be obtained from linear combinations of other solutions. If it is linear, the differential equation has its derivatives with a maximum power of 1 and there are no terms where there are products between the unknown function and/or its derivatives. The characteristic property of linear equations is that their solutions have the form of an affine subspace of a space of appropriate solutions, the result of which is developed in the theory of linear differential equations.

Homogeneous linear differential equations are a subclass of linear differential equations for which the solution space is a linear subspace, that is, the sum of any set of solutions or multiples of solutions, is also a solution. The coefficients of the unknown function, and its derivatives in a linear differential equation can be functions of the variable or independent variables, if these coefficients are constant, then we speak of linear differential equations with constant coefficients.

An equation is said to be linear if it has the form:

an(x)and(n)+an− − 1(x)and(n− − 1)+ +a1(x)and♫+a0(x)and=g(x){displaystyle ,a_{n}(x)y^{(n)}+a_{n-1}(x)y^{(n-1)}+dots +a_{1}(x)y'+a_{0}(x)y=g(x)}}

That is:

- Neither function nor its derivatives are elevated to any power other than one or zero.

- In each coefficient that appears multiplying only the independent variable.

- A linear combination of your solutions is also a solution to the equation.

Examples:

- and♫− − and=0{displaystyle ,y'-y=0} is an ordinary linear differential equation of first order, has as solutions and=f(x)=k⋅ ⋅ ex{displaystyle y=f(x)=kcdot e^{x}}, with k any real number.

- and♫+and=0{displaystyle ,y''+y=0} is an ordinary linear differential equation of second order, has as solutions and=f(x)=a# (x)+bwithout (x){displaystyle y=f(x)=acos(x)+bsin(x), with a and b real.

- and♫− − and=0{displaystyle ,y'-y=0} is an ordinary linear differential equation of second order, has as solutions a⋅ ⋅ ex+b⋅ ⋅ 1/(ex){displaystyle ,acdot e^{x}+bcdot 1/(e^{x}}}}, with a and b real.

Nonlinear Differential Equations

Very few methods exist for solving nonlinear differential equations exactly; those that are known it is very common that they depend on the equation having particular symmetries. Nonlinear differential equations can exhibit very complicated behavior in large intervals of time, characteristic of chaos. Each of the fundamental questions of the existence, uniqueness, and extensibility of solutions for nonlinear differential equations, and the well-defined problem of initial and boundary condition problems for nonlinear PDEs are difficult problems and their resolution in special cases. it is considered to be a significant advance in mathematical theory (eg the existence and smoothness of Navier-Stokes). However, if the differential equation is a correctly formulated representation of a significant physical process, then a solution is expected.

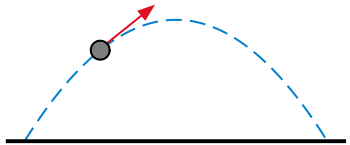

Nonlinear differential equations usually appear by means of approximations to linear equations. These approximations are valid only under restricted conditions. For example, the harmonic oscillator equation is an approximation of the nonlinear equation of a pendulum that is valid for small oscillation amplitudes (see below).

Semilinear and Quasilinear Equations

There is no general procedure for solving nonlinear differential equations. However, some particular cases of nonlinearity can be solved. Of interest are the semilinear case and the quasilinear case.

An ordinary differential equation of order n it is called quasilineal if it is "linear" in the derivative of order n. More specifically, if the ordinary differential equation for function and(x){displaystyle scriptstyle y(x)} can be written in the form:

f(and(n),and(n− − 1),...... ,and♫,and♫,and,x)=0,f1(z):=f(z,α α n− − 1,...... ,α α 2,α α 1,α α 0,β β 0){displaystyle f(y^{(n)},y^{(n-1)},dotsy',y',y,x)=0,qquad qquad f_{1}(z):=f(z,alpha _{n-1},dotsalpha _{2},alpha _{1},{alphate{0},}{0}{0},

It is said that this equation is quasi-linear if f1(⋅ ⋅ ){displaystyle scriptstyle f_{1}(cdot)} It's an aphin function, that is, f1(z)=az+b{displaystyle scriptstyle f_{1}(z)=az+b}.

An ordinary differential equation of order n is called semilineal if it can be written as a sum of a "linear" function of the derivative of order n plus any function of the rest of derivatives. Formally, if the ordinary differential equation for function and(x){displaystyle scriptstyle y(x)} can be written in the form:

f(and(n),and(n− − 1),...... ,and♫,and♫,and,x)=f^ ^ (and(n),x)+g(and(n− − 1),...... ,and♫,and,x)f2(z):=f^ ^ (z,β β 0){displaystyle f(y^{(n)},y^{(n-1)},dotsy',y',y,x)={hat {f}(y^{(n)},x)}{(y^{(n-1)}}{dotsy,y,xquad qquad f_{2}(z):={

This equation is said to be semi-linear if f2(⋅ ⋅ ){displaystyle scriptstyle f_{2}(cdot)} It's a linear function.

Equation Order

Differential equations are described by their order, determined by the term with derivatives of highest order. An equation containing only simple derivatives is a first-order differential equation, an equation containing up to second derivatives is a second-order differential equation, and so on.

Examples of order in equations:

- First-order differential equation: and♫+and(x)=f(x){displaystyle y'+y(x)=f(x)}

- Second-order differential equation: and♫+4and=0{displaystyle and''+4y=0}

- Third-order differential equation: xand♫− − 2xand♫+4and♫=0{displaystyle xy''-2xy''+4y'=0}

- Second variable coefficient equation:

and♫+2xand♫+4and=0{displaystyle and''+2xy'+4y=0}

Equation Degree

It is the power of the highest order derivative that appears in the equation, as long as the equation is in polynomial form, otherwise it is considered to have no degree.

Exact Differential Equations

Throughout this section, you will find differential equations written as: P(x,and)dx+Q(x,and)dand=0{displaystyle P(x,y)dx+Q(x,y)dy=0} and and♫=− − P(x,and)Q(x,and){displaystyle y'=-{P(x,y) over Q(x,y)}} without applying any distinction between the first and second equation.

It will be assumed that P and Q are defined functions in an open rectangle R{displaystyle R} in R2{displaystyle mathbb {R} ^{2}} don't null at once.

|

The importance of accuracy is that F(x,and)=c{displaystyle F(x,y)=c} is a family of integral curves deducting implicitly from x: Fx+Fandand♫=0{displaystyle F_{x}+F_{y}y'=0}So, F(x,and)=c{displaystyle F(x,y)=c} sat and♫=− − P(x,and)Q(x,and){displaystyle y'=-{P(x,y) over Q(x,y)}}.

A criterion that can be used to know exactly when an equation is accurate is based on the fact that P and Q are continuous functions and have partial continuous derivatives in R. If the equation P(x,and)dx+Q(x,and)dand=0{displaystyle P(x,y)dx+Q(x,y)dy=0} It's exact differential, then, it's proven that Pand=Qx{displaystyle P_{y}=Q_{x}} in R.

Assuming that Pand=Qx{displaystyle P_{y}=Q_{x}} in R and we searched F such that Fx={displaystyle F_{x}= P Fand=Q{displaystyle F_{y}=Q}. Integrating the equation Fx={displaystyle F_{x}= P regarding x: F(x,and)=∫ ∫ P(x,and)dx+C(and){displaystyle F(x,y)=int P(x,y)dx+C(y)} where C(y) is the constant integration dependent on and.

Setting the condition Fand=Q{displaystyle F_{y}=Q} is obtained: C♫(and)=Q(x,and)− − ▪ ▪ ▪ ▪ and∫ ∫ P(x,and)dx{displaystyle C'(y)=Q(x,y)-{partial over partial y}int P(x,y)dx} where, applying Pand=Qx{displaystyle P_{y}=Q_{x}}, the right side of equality is a function of the variable and independent of x.

Finally:

F(x,and)=∫ ∫ P(x,and)dx+∫ ∫ (Q(x,and)− − ▪ ▪ ▪ ▪ and∫ ∫ P(x,and)dx)dand{displaystyle F(x,y)=int P(x,y)dx+int (Q(x,y)-{partial over partial y}int P(x,y)dx)dy}

In this way a method has been obtained after calculating the integral curves of an accurate differential equation F(x,and)=c{displaystyle F(x,y)=c} showing the theorem stated above.

Examples

In the first set of examples, let u be an unknown function that depends on x, and c and ω are known constants. Note that both ordinary and partial differential equations can be classified as linear and nonlinear.

- Regular linear differential equation to constant coefficients of first order:

- dudx=cu+x2.{displaystyle {frac {du}{dx}}=cu+x^{2}. !

- Second order standard linear differential equation:

- d2udx2− − xdudx+u=0.{displaystyle {frac {d^{2}u}{dx^{2}}}}-x{frac {du}{dx}}+u=0. !

A solution is given by and=f(x)=AX{displaystyle y=f(x)=AX}

- Ordinary linear differential equation to second order homogeneous constant coefficients describing a harmonic oscillator:

- d2udx2+ω ω 2u=0.{displaystyle {frac {d^{2}u}{dx^{2}}}}}+omega ^{2}u=0. !

- Normal nonlinear inhomogeneous differential equation of first order:

- dudx=u2+4.{displaystyle {frac {du}{dx}}=u^{2}+4. !

- Nonlinear ordinary differential equation (due to the second order function), which describes the movement of a pendulum of length L:

- Ld2udx2+gwithout u=0.{displaystyle L{frac {d^{2}u}{dx^{2}}}}} +gsin u=0. !

In the following set of examples, the unknown function u depends on two variables x and t or x e y.

- First-order homogeneous linear partial derivative equation, then:

- ▪ ▪ u▪ ▪ t+t▪ ▪ u▪ ▪ x=0.{displaystyle {frac {partial u}{partial t}}} +t{frac {partial u}{partial x}}}=0. !

- Equation in linear partial derivatives homogenous to constant coefficients of second order of the elliptical type, the equation of Laplace:

- ▪ ▪ 2u▪ ▪ x2+▪ ▪ 2u▪ ▪ and2=0.{displaystyle {frac {partial ^{2}u}{partial x^{2}}}}}+{frac {partial ^{2}u}{partial y^{2}}}}}}}=0. !

- Non-linear partial derivative equation of third order, Korteweg-de Vries equation:

- ▪ ▪ u▪ ▪ t=6u▪ ▪ u▪ ▪ x− − ▪ ▪ 3u▪ ▪ x3.{displaystyle {frac {partial u}{partial t}}=6u{frac {partial} u{partial x}}}-{frac {partial ^{3}u}{partial x^{3}}}}}}}}} !

Exact Differential Equation Example

Be the first-order differential equation P(x,and)dx+Q(x,and)dand=0{displaystyle P(x,y)dx+Q(x,y)dy=0} Русский Русский (x,and)한 한 B{displaystyle forall (x,y)in B} where P and Q are two continuous functions in the open B R2{displaystyle Bsubset mathbb {R} ^{2}}.

It is said that the equation P(x,and)dx+Q(x,and)dand=0{displaystyle P(x,y)dx+Q(x,y)dy=0} is an exact differential equation if there is a potential function F(x,and){displaystyle F(x,y)} defined in B such that:

▪ ▪ F▪ ▪ x(x,and)=P(x,and){displaystyle {partial F over partial x}(x,y)=P(x,y)},{displaystyle}▪ ▪ F▪ ▪ and(x,and)=Q(x,and){displaystyle {partial F over partial y}(x,y)=Q(x,y)},{displaystyle}Русский Русский (x,and)한 한 B{displaystyle forall (x,y)in B}

Be besides P(x,and)dx+Q(x,and)dand=0{displaystyle P(x,y)dx+Q(x,y)dy=0} an exact differential equation Русский Русский (x,and)한 한 B R2{displaystyle forall (x,y)in Bsubset mathbb {R} ^{2}}} open and F(x,and){displaystyle F(x,y)} a potential function of this, then, every solution and=φ φ (x){displaystyle and=phi(x)} of the equation whose graph is in B satisfies the equation

Solution of a differential equation

Existence of solutions

1st Step: Solving differential equations is not like solving algebraic equations. Since, although sometimes their solutions are unclear, it may also be of interest if they are unique or exist.

For first order problems with initial values, Peano's existence theorem gives us a set of conditions in which the solution exists. For any given point (a,b){displaystyle (a,b)} in the plane xy, and defined a rectangular region Z{displaystyle Z}, such that Z=[chuckles]l,m]× × [chuckles]n,p]{displaystyle Z=[l,m]times [n,p]} and (a,b){displaystyle (a,b)} is inside Z{displaystyle Z}. If we have a differential equation danddx=g(x,and){displaystyle {frac {mathrm {d} y}{mathrm {d} x}=g(x,y)}} and condition and=b{displaystyle y=b} When x=a{displaystyle x=a}, then there is a local solution to this problem if g(x,and){displaystyle g(x,y)} and ▪ ▪ g▪ ▪ x{displaystyle {frac {partial g}{partial x}}}}} are both continuous in Z{displaystyle Z}. The solution exists at some interval with your center in a{displaystyle a}. The solution may not be unique. (See Ordinary differential equation for other results.)

However, this only helps us with first-order problems with initial conditions. Suppose we have a linear problem with initial conditions of nth order:

- fn(x)dnanddxn+ +f1(x)danddx+f0(x)and=g(x){displaystyle f_{n}(x){frac {mathrm {d} ^{ny}{mathrm {d} x^{n}}}}}+cdots +f_{1}(x){frac {mathrm {d} y}{mathrm {d} x}} +f_{0}x

such that

- and(x0)=and0,and♫(x0)=and0♫,and♫(x0)=and0♫, {displaystyle and(x_{0})=y_{0},y'(x_{0})=y'_{0},y'(x_{0})=y'_{0},cdots }

For any fn(x){displaystyle f_{n}(x)} No, no. {f0,f1, !{displaystyle {f_{0},f_{1},cdots }} and g{displaystyle g} are continuous over some interval holding x0{displaystyle x_{0}}, and{displaystyle and} It is unique and exists.

Types of solutions

A solution of a differential equation is a function that, by replacing the unknown function, in each case with the corresponding derivations, verifies the equation, that is, converts it into an identity. There are three types of solutions:

General solution

The general solution is a generic solution, expressed with one or more constant. It's a curve beam. It has an infinity order according to its number of constants (a constant corresponds to a simply infinite family, two constants to a double-infinite family, etc.). In the event that the equation is linear, the general solution is achieved as a linear combination of the solutions (such as the order of the equation) of the homogeneous equation (which results from making the term not dependent on and(x){displaystyle and(x)} or its derivatives equal to 0) plus a particular solution to the complete equation

Particular solution

If you set any point P(X0,And0){displaystyle P(X_{0},Y_{0}}}}} where the solution of the differential equation must necessarily pass, there is a single value of C, and therefore of the integral curve that satisfies the equation, it will receive the particular solution name of the equation at the point P(X0,And0){displaystyle P(X_{0},Y_{0}}}}}which receives the initial condition name.

It is a particular case of the general solution, where the constant (or constants) receives a specific value.

Singular solution

The singular solution is a function that verifies the equation, but is not obtained by particularizing the general solution. It is a solution of the equation not consistent with a particular one of the general one, in other words, this solution does not belong to the general solution but still verifies the differential equation.

Notes on Solutions

Be the ordinary differential equation of order n f(and(n),and(n− − 1),...... ,and♫,and♫,and,x)=0{displaystyle f(y^{(n)},y^{(n-1)},dotsy',y',y,x)=0}, it's easy to verify that the function y= f(x) It's your solution. It is enough to calculate their derivatives of f(x), then replace them in the equation with f(x) and prove that an identity is obtained in x.

The solutions of E.D.O. they are presented in the form of implicitly defined functions, and sometimes impossible to express explicitly. For example

xand=ln and+c{displaystyle xy=ln y+c}which is a solution of: danddx=and21− − xand{displaystyle {frac {{text{d}}}{{{{text{d}}x}}}{frac {y^{2}{1-xy}}}}}}}}}{{displaystyle {frac {{frac} {{ex {{1⁄2}}}{1⁄2}}}}}}}}}{ex {ex {text{1⁄2}}}}}}}}}}}{ex

The simplest form of all differential equations is dand/dx=f(x){displaystyle {text{d}}y/{text{d}}x=f(x)} whose solution is and=∫ ∫ f(x)dx+c{displaystyle y=int {f(x) {text{d}x}+c}In some cases it is possible to solve it by elementary methods of calculation. However, in other cases, the analytical solution requires complex or more sophisticated variable techniques such as integrals:

and=∫ ∫ Exp (x2)dx{displaystyle y=int {exp(x^{2}) {text{d}x}}}} and the integral and=∫ ∫ without xxdx{displaystyle y=int {{frac {sin x}{x}}{text{d}x}}}

cannot be structured by a finite number of elementary functions.

Applications

The study of differential equations is a vast field in pure and applied mathematics, physics, and engineering. All of these disciplines are interested in the properties of differential equations of various kinds. Pure mathematics focuses on the existence and uniqueness of solutions, while applied mathematics emphasizes the rigorous justification of solution approximation methods. Differential equations play a very important role in the virtual modeling of any physical, technical, or biological process, for example, both the celestial movement, the design of a bridge, or the interaction between neurons. The differential equations that are posed to solve real life problems are not necessarily directly solvable, that is, their solutions do not have a closed form expression. When this happens, the solutions can be approximated using numerical methods.

Many laws of physics and chemistry are formalized with differential equations. In biology and economics, differential equations are used for modeling the behavior of complex systems. The mathematical theory of differential equations was initially developed with the sciences where the equations originated and where results for applications were found. However, sometimes different problems arose in different scientific fields, resulting in identical differential equations. This happened because, behind the mathematical theory of the equations, a unified principle behind the phenomena can be seen. For example, if we consider the propagation of light and sound in the atmosphere, and of waves on the surface of a pond. All of these phenomena can be described with the same second-order partial differential equation, the wave equation, which allows us to think of light and sound as waveforms, and much like waves in water. Heat conduction, the theory that was developed by Joseph Fourier, is governed by another second-order partial derivative equation, the heat equation. It turns out that many diffusion processes, although they appear to be different, are described by the same equation. The Black-Scholes equation in finance, for example, is related to the heat equation.

Physics

- Equations of Euler-Lagrange in classical mechanics

- Hamilton equations in classic mechanics

- Radioactivity in nuclear physics

- Newton cooling law in thermodynamics

- Wave equation

- Thermodynamic heat equation

- Laplace equation, which defines harmonic functions

- Poisson equation

- Geodetic equation

- Navier-Stokes equations in fluiddynamics

- Dissemination equation in stochastic processes

- Fluid-dynamic convection equation

- Cauchy-Riemann equations in complex analysis

- Poisson-Boltzmann equation in molecular dynamics

- Equations of Saint-Venant

- Universal differential equation

- Equations of Lorenz whose solutions exhibit a chaotic flow.

Classical mechanics

As long as the force acting on a particle is known, Newton's Second Law is sufficient to describe the motion of a particle. Once the independent relations for each force acting on a particle are available, they can be substituted into Newton's second law to obtain an ordinary differential equation, which is called the equation of motion.

Electrodynamics

Maxwell's equations are a set of partial differential equations that, together with the Lorentz force law, form the foundations of classical electrodynamics, classical optics, and the theory of electrical circuits. These fields became fundamental in electrical, electronic and communication technologies. Maxwell's equations describe how electric and magnetic fields are generated by altering one another by electric charges and currents. These equations owe their name to the Scottish mathematical physicist James Clerk Maxwell, who published his work on these equations between 1861 and 1862.

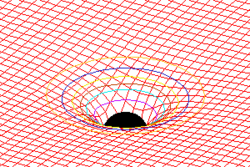

General relativity

Einstein's field equations (also known as "Einstein's equations") are a set of ten partial differential equations of general relativity describing the fundamental interaction of gravitation as a result of spacetime being curved by matter and energy. First published by Einstein in 1915 as a tensor equation, the equations equate a local spacetime curvature (expressed by the Einstein tensor) with the local energy and momentum within spacetime (expressed by the energy-momentum tensor).

Quantum Mechanics

In quantum mechanics, the analogue to Newton's law is the Schrödinger Equation (a partial differential equation) for a quantized system (usually atoms, molecules, and subatomic particles that may be free, bound, or localized). It is not a simple algebraic equation, but it is, in general, a linear partial differential equation, describing the evolution in time of a wave function (also called a "state function").

Biology

- Verhulst equation – for the growth of biological population.

- Model von Bertalanffy – for individual biological growth.

- Replication dynamic – in biological theory.

- Hodgkin and Huxley Model – neuronal action potentials.

Predator-Prey Equations

The Lotka–Volterra equations, also known as the predator-prey equations, are a pair of first-order nonlinear differential equations frequently used to describe the dynamics of biological systems in which two species interact, one the predator, and one the predator. the other, the prey.

Population growth

Around the 18th century they wanted to know how the population varied to predict possible changes. That is why the economist T. Malthus proposes the following:

|

It is imposed that births=α α Δ Δ tN(t){displaystyle {text{nacimientos}}=alpha Delta tN(t)} and deaths=β β Δ Δ tN(t){displaystyle {text{death}}=beta Delta tN(t)} with N(t)={displaystyle N(t)=} size of the population in time t.

Variation of population at a time interval Δ Δ t{displaystyle {ce {delta t}}} That's it. Δ Δ N(t)=γ γ N(t)Δ Δ t{displaystyle Delta N(t)=gamma N(t)Delta t} with γ γ =α α − − β β {displaystyle gamma =alpha -beta }.

Using the limit when Δ Δ t→ → 0{displaystyle Delta trightarrow 0} the mathematical model is obtained: dNdt=γ γ N{displaystyle {dN over dt}=gamma N}

By resolving the previous equation of separate variables, considering that t=0{displaystyle t=0} the size of the population is N0{displaystyle N_{0}}, you get the population in the instant t is given by N(t)=N0eγ γ t{displaystyle N(t)=N_{0}e^{gamma t}}}}. This equivalence reports, if <math alttext="{displaystyle gamma γ γ .0{displaystyle gamma ₡0}<img alt="{displaystyle gamma the population is extinguished, if γ γ =0{displaystyle gamma =0} the population remains constant and 0}" xmlns="http://www.w3.org/1998/Math/MathML">γ γ ▪0{displaystyle gamma }0}" aria-hidden="true" class="mwe-math-fallback-image-inline" src="https://wikimedia.org/api/rest_v1/media/math/render/svg/775a6435e4270cddf2cd7dcb486c20f7f4bb8cee" style="vertical-align: -0.838ex; width:5.523ex; height:2.676ex;"/> the population grows exponentially.

Values N0{displaystyle {ce {N_0}}} and γ γ {displaystyle gamma } are calculated for each population taking two measures in two separate moments of time. It is important to note that a small error in the data count leads to different values of the constants γ γ {displaystyle gamma } or N0{displaystyle N_{0}} which can be significant and significantly change the results of the model for large times.

Taking a real example. for the population in the USA we obtain:

| Year | Population USA |

|---|---|

| 1790 | N(t)=3.9× × 106e0.307t{displaystyle N(t)=3.9times 10^{6}e^{0.307t} |

| 1800 | N(t)=5.3× × 106e0.307t{displaystyle N(t)=5.3times 10^{6}e^{0.307t} |

| 1810 | N(t)=7.2× × 106e0.307t{displaystyle N(t)=7.2times 10^{6}e^{0.307t} |

| 1820 | N(t)=9.6× × 106e0.307t{displaystyle N(t)=9.6times 10^{6}e^{0.307t} |

| 1830 | N(t)=12.9× × 106e0.307t{displaystyle N(t)=12.9times 10^{6}e^{0.307t} |

| 1840 | N(t)=17.1× × 106e0.307t{displaystyle N(t)=17.1times 10^{6}e^{0.307t} |

| 1850 | N(t)=23.2× × 106e0.307t{displaystyle N(t)=23.2times 10^{6}e^{0.307t} |

| 1860 | N(t)=31.4× × 106e0.307t{displaystyle N(t)=31.4times 10^{6}e^{0.307t} |

| 1870 | N(t)=38.6× × 106e0.307t{displaystyle N(t)=38.6times 10^{6}e^{0.307t} |

| 1880 | N(t)=50.2× × 106e0.307t{displaystyle N(t)=50.2times 10^{6}e^{0.307t} |

| 1890 | N(t)=62.9× × 106e0.307t{displaystyle N(t)=62.9times 10^{6}e^{0.307t} |

| 1900 | N(t)=76× × 106e0.307t{displaystyle N(t)=76times 10^{6}e^{0.307t} |

| 1910 | N(t)=92× × 106e0.307t{displaystyle N(t)=92times 10^{6}e^{0.307t} |

| 1920 | N(t)=106.5× × 106e0.307t{displaystyle N(t)=106.5times 10^{6}e^{0.307t}}}} |

| 1930 | N(t)=123.2× × 106e0.307t{displaystyle N(t)=123.2times 10^{6}e^{0.307t}}} |

N(t)={displaystyle N(t)=}Verhulst proposes modifications to the Malthus model:

|

Therefore, the mathematical model tends to be: dNdt=γ γ N(1− − NN∞ ∞ ){displaystyle {dN over dt}=gamma Nleft(1-{N over N_{infty }}right)} and, after making an integration of this model, you get the size of the population which is:

N(t)={displaystyle N(t)=}N0N∞ ∞ N0+(N∞ ∞ − − N0)e− − γ γ t{displaystyle N_{0}N_{infty } over {N_{0}+(N_{infty }-N_{0})e^{-gamma t}}}}}}}}}

For the US population, the Verhulst values are calculated approximately:

N(t)=3.9× × 106e0.307t{displaystyle N(t)=3.9times 10^{6}e^{0.307t}, N∞ ∞ =197× × 106{displaystyle N_{infty }=197times 10^{6}} and γ γ =0.3134{displaystyle gamma =0.3134}.

After comparing the values obtained with the Verhulst model with the real population, it can be seen that this slightly exceeds the amount predicted by the model and, therefore, there is the possibility of having to make some modification to said model in order to predict appropriately the size of the population for future times.

Software

- ExpressionsinBar: desolve(y'=k*y,y)

- Maple: dsolve

- SageMath: desolve()

- Xcas: desolve(y'=k*y,y)

![{displaystyle Z=[l,m]times [n,p]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/515b71ad3b5fc01ff532df8cb71018baca811973)