Core (computing)

In computing, a nucleus or kernel (from the Germanic root Kern, nucleus, bone) is a software that constitutes a fundamental part of the operating system, and is defined as the part that runs in privileged mode (also known as kernel mode). It is primarily responsible for providing access to different programs secure to the hardware of the computer or in a basic way, it is in charge of managing resources, through system call services. Since there are many programs and access to the hardware is limited, it is also in charge of deciding which program will be able to use a hardware device and for how long, which is known as multiprogramming. Accessing hardware directly can be really complex, so kernels often implement a number of hardware abstractions. This allows complexity to be hidden, and provides a clean and uniform interface to the underlying hardware, making it easier for the programmer to use.

In some operating systems, there is no kernel as such (something common in embedded systems), because in certain architectures there are no different execution modes.

Technique

When voltage is applied to the processor of an electronic device, it executes a small code in assembly language located at a specific address in the ROM memory (reset address) and known as reset code, which in turn executes a routine that initializes the hardware that accompanies the processor. Also in this phase, the interrupt handler is usually initialized. Once this phase is finished, the startup code (startup code) is executed, also code in assembly language, whose most important task is to execute the main program (main() ) of the software. of the application.

General information

In computing, operating systems are the core of the computer that ensures:

- Communication between programmes requesting resources and hardware.

- Management of a machine's various software (tasks).

- Management hardware (memory, processor, peripheral, storage form, etc.)

Most user interfaces are built around the concept of the kernel. The existence of a kernel, that is to say, of a single program responsible for the communication between the hardware and the computer program, results from complex compromises regarding performance issues, security and architecture of the processors. The kernel has great powers over the use of hardware resources, in particular, memory.

Functions generally performed by a kernel

The basic functions of the kernels are to guarantee the loading and execution of the processes, the inputs/outputs and to propose an interface between the kernel space and the user space programs.

Apart from the basic functionalities, the set of functions in the following points (including hardware drivers, network and file system functions or services) are not necessarily provided by an operating system kernel. These operating system functions can be established both in user space and in the kernel itself. Its implantation in the nucleus is done with the sole objective of improving the results. Indeed, according to the kernel's conception, the same function called from user space or kernel space has an obviously different time cost. If this function call is frequent, it may be useful to integrate these functions into the kernel to improve the results.

Unix

A Unix kernel is a program written almost entirely in the C language, with the exception of a portion of interrupt handling, expressed in the assembly language of the processor on which it operates. The functions of the kernel are to allow the existence of an environment in which it is possible to serve several users and multiple tasks concurrently, distributing the processor among all of them, and trying to maintain individual attention to an optimum degree.

The kernel operates as a resource allocator for any process that needs to use the computing facilities. Its main functions are:

- Process creation, allocation of attention times and synchronization.

- Allocation of the processor's attention to the processes that require it.

- Space management in the file system, which includes: access, protection and user administration; communication between users and between processes, and manipulation of E/S and peripheral management.

- Monitoring data transmission between main memory and peripheral devices.

It always resides in main memory and has control over the computer, so no other process can interrupt it; they can only call you to provide any of the aforementioned services. A process calls the kernel using special modules known as system calls.

It consists of two main parts: the process control section and the device control section. The first allocates resources, programs, processes and attends to its service requirements; the second, supervises the transfer of data between the main memory and the devices of the computer. In general terms, every time a user presses a key on a terminal, or information must be read or written from the magnetic disk, the central processor is interrupted and the kernel is in charge of carrying out the transfer operation.

When the computer starts operating, a copy of the kernel, which resides on the magnetic disk, must be loaded into memory (an operation called bootstrap). To do this, some basic hardware interfaces must be initialized; among them, the clock that provides periodic interruptions. The kernel also prepares some data structures that include a temporary storage section for information transfer between terminals and processes, a section for file descriptor storage, and a variable indicating the amount of main memory.

The kernel then initializes a special process, called process 0. On Unix, processes are created by calling a system routine (fork), which works by a process duplication mechanism. However, this is not enough to create the first one, so the kernel allocates a data structure and sets pointers to a special section of memory, called the process table, which will contain the descriptors of each of the processes. existing in the system.

After having created process zero, a copy of it is made, creating process one; it will soon be in charge of giving life to the entire system, by activating other processes that are also part of the kernel. In other words, a chain of process activations begins, among which the one known as the dispatcher or scheduler stands out, which is responsible for deciding which process will be executed and which ones will enter or leave the central memory. From then on, number one is known as the system initialization process, init.

The init process is responsible for establishing the process structure in Unix. Typically, it is capable of creating at least two different process structures: single-user mode and multi-user mode. It begins by activating the Unix shell control language interpreter on the main terminal, or system console, giving it superuser privileges. In single user mode, the console allows a first session to be started, with special privileges, and prevents the other lines of communication from accepting the start of new sessions. This mode is often used to check and repair file systems, test basic system functions, and for other activities that require exclusive use of the computer.

Init creates another process, which waits for someone to log in on some communication line. When this happens, it makes adjustments to the line protocol and runs the login program, which is responsible for initially serving new users. If the user name and password provided are correct, then the Shell program starts operating, which from now on will be in charge of the normal attention of the user who registered in that terminal.

From that moment on, the person responsible for serving the user in that terminal is the Shell interpreter. When you want to end the session you have to disconnect from the shell (and therefore from Unix), using a special key sequence (usually. < CTL > - D). From that moment on, the terminal is available to serve a new user.

Types of systems

You don't necessarily need a kernel to use a computer. Programs can be loaded and run directly on an empty computer, as long as their authors want to develop them without using any hardware abstraction or any help from the operating system. This was the normal way to use many early computers: to use different programs you had to restart and reconfigure the computer each time. Over time, small helper programs, such as the loader and debugger, began to be left in memory (even between executions), or loaded from read-only memory. As they were developed, they became the foundation of what would become the first operating system kernels.

There are four main types of nuclei:

- Them monolithic cores facilitates truly powerful and varied built-in hardware abstractions.

- Them micronuclei English microkernel) provide a small set of simple abstractions of hardware, and use applications called servers to provide greater functionality.

- Them hybrid cores (modified micronuclei) are very similar to pure micronúcles, except because they include additional code in the core space to run more quickly.

- Them exonucles they do not facilitate any abstraction, but allow the use of libraries that provide greater functionality thanks to direct or almost direct access to the hardware.

Microkernels

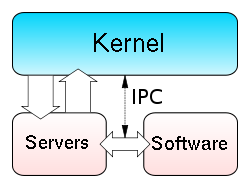

The microkernel approach consists of defining a very simple abstraction on the hardware, with a set of primitives or system calls that implement minimal operating system services, such as thread management, address space, and interprocess communication.

The main objective is the separation of the implementation of basic services and the policy of operating the system. For example, the I/O blocking process can be implemented with a user-space server running on top of the microkernel. These user servers, used to manage the high-level parts of the system, are highly modular and simplify the structure and design of the kernel. If one of these servers fails, the entire system will not hang, and this module can be restarted independently of the rest. However, the existence of different independent modules causes communication delays due to the copying of variables that is carried out in the communication between modules.

Some examples of microkernels:

- AIX

- The family of micronucleus L4

- The Mach micronucleus, used in GNU Hurd and on Mac OS X

- Beos

- Minix

- Morphos

- QNX

- Radical

- VSTa

- Hurd

Monolithic versus micronuclei

Monolithic kernels are often preferred over microkernels because of the lower level of complexity involved in dealing with all system control code in a single address space. For example, XNU, the kernel for Mac OS X, is based on the Mach 3.0 kernel and FreeBSD in the same address space to decrease latency associated with conventional microkernel design.

In the early 1990s, monolithic kernels were considered obsolete. The design of Linux as a monolithic kernel rather than a microkernel was the subject of a famous dispute between Linus Torvalds and Andrew Tanenbaum. The arguments on both sides in this discussion present some interesting motivations.

Monolithic kernels are usually easier to design correctly, and therefore can grow faster than a microkernel-based system, but there are successes on both sides. Microkernels are often used in embedded robotics or medical computers, since most operating system components reside in their own private, protected memory space. This would not be possible with monolithic kernels, even modern ones that allow loading of kernel modules.

Although Mach is the best known generalist microkernel, other microkernels have been developed for more specific purposes. L3 was created to show that microkernels are not necessarily slow. The L4 family of microkernels is the descendant of L3, and one of its latest implementations, called Pistachio, allows Linux to run concurrently with other processes, in separate address spaces.

QNX is an operating system that has been around since the early 1980s, and it has a very minimalist microkernel design. This system has managed to reach the goals of the microkernel paradigm much more successfully than Mach. It is used in situations where software glitches cannot be allowed, including everything from robotic arms on spaceships to glass grinding machines where a small mistake could cost a lot of money.

Many people believe that because Mach basically failed to solve the set of problems that microkernels were trying to fix, all microkernel technology is useless. Mach's supporters claim that this is a narrow-minded attitude that has become popular enough for many people to accept it as true.

Hybrid nuclei (modified micronuclei)

Hybrid kernels are essentially microkernels that have some "non-essential" code in kernel space so that it runs faster than it would if it were in user space. This was a compromise that many developers of early microkernel-based architecture operating systems made before it was shown that microkernels can perform very well. Most modern operating systems fall into this category, with the most popular being Microsoft Windows. XNU, the kernel of Mac OS X, is also a modified microkernel, due to the inclusion of FreeBSD kernel code in the Mach-based kernel. DragonFlyBSD is the first BSD system to adopt a hybrid kernel architecture without relying on Mach.

Some examples of hybrid kernels:

- Microsoft Windows NT, used in all systems that use Windows NT base code.

- XNU (used on Mac OS X)

- DragonFlyBSD

- Reactments

Some people confuse the term hybrid kernel with monolithic kernels that can load modules after boot, which is a mistake. Hybrid implies that the kernel in question uses architectural concepts or mechanisms from both the monolithic and microkernel designs, specifically message passing and the migration of non-essential code into space. but keeping some non-essential code in the kernel itself for performance reasons.

Exonuclei

Exokernels, also known as vertically structured operating systems, represent a radically new approach to operating system design.

The underlying idea is to allow the developer to make all decisions regarding the performance of the hardware. Exokernels are extremely small, since they expressly limit their functionality to protection and multiplexing of resources. They are so called because all functionality is no longer resident in memory and is now outside in dynamic libraries.

Classic kernel designs (both monolithic and microkernel) abstract the hardware, hiding resources under a hardware abstraction layer, or behind device drivers. In classical systems, if physical memory is allocated, no one can be sure of its actual location, for example.

The purpose of an exokernel is to allow an application to request a specific region of memory, a specific disk block, etc., and simply to ensure that the requested resources are available, and that the program has the right to access them. them.

Because the exokernel only provides an interface to very low-level hardware, lacking all the high-level functionality of other operating systems, it is supplemented by an operating system library. This library communicates with the underlying exokernel, and provides application developers with functionality that is common to other operating systems.

Some of the theoretical implications of an exokernel system are that it is possible to have different types of operating systems (e.g. Windows, Unix) running on a single exokernel, and that developers can choose to drop or add functionality for performance reasons.

Currently, exokernel designs are mostly in the study phase and are not used in any popular systems. One operating system concept is Nemesis, created by the University of Cambridge, the University of Glasgow, Citrix Systems, and the Swedish Institute of Computing. MIT has also designed some exokernel-based systems. The exonuclei are handled in a different structure since they also fulfill different functions.

Contenido relacionado

Ontology (computing)

Zero player game

Semantic network