Automatic translation

machine translation (TA or MT, the latter from English machine translation) is an area of computational linguistics that investigates the use of software to translate text or speech from one natural language to another. In its most basic aspect, MT simply substitutes the words of one language for those of the other, but this procedure rarely results in a good translation, since there is no one-to-one correspondence between the lexicon of the different languages.

The use of corpora linguistics as well as statistical and neural techniques is a fast-growing field that provides superior quality translations; differences in linguistic typology, translation of idiomatic expressions and isolation of anomalies are taken into account.

Today, machine translation software often allows adjustments for a specialized field (for example, weather forecasts or press releases), thus obtaining better results. This technique is especially effective in environments where a formulaic language is used. In other words, translating legal or administrative documents by computer tends to be more productive than when it comes to conversations or other non-standardized texts.

Some systems achieve higher quality by offering specific avenues of human intervention; for example, they give the user the ability to identify proper nouns included in the text. With the help of these techniques, machine translation is a very useful tool for translators, and in certain cases it can even produce usable results without the need for modification.

Statistical techniques

In the last decades, there has been a strong impulse in the use of statistical techniques for the development of machine translation systems. For the application of these techniques to a given language pair, the availability of a parallel corpus for that pair is required. Using this corpus, parameters of the respective statistical models are estimated that establish the probability with which certain words are likely to be translated by others, as well as the most probable positions that the words of the target language tend to occupy based on the corresponding words of the target language. source phrase. The appeal of these techniques is that the development of a system for a given language pair can be done very automatically, with very little need for expert work by linguists.

Human intervention can improve the quality of the output: for example, some systems can translate more accurately, if the user has previously identified the words that correspond to proper nouns. With the help of these techniques, computer translation has been shown to be a useful aid to human translators. However, even though in some cases they can produce usable results "as is", current systems are incapable of producing results of the same quality as a human translator, particularly when the text to be translated uses colloquial or familiar language. On the other hand, it is a fact that human translations also contain errors. In response to this, recent developments have been seen in automatic MT correction, such as the SmartCheck functionality of the translation company Unbabel, based on Machine Learning.

Interactive-predictive statistical techniques

In this direction, statistical assisted translation techniques based on an interactive-predictive approach, in which the computer and the human translator work in close mutual collaboration, have recently gained special interest. Based on the source text to be translated, the system offers suggestions on possible translations into the target language. If any of these suggestions is acceptable, the user selects it and, otherwise, corrects what is necessary until a correct fragment is obtained. From this snippet, the system produces better predictions. The process continues in this way until a translation completely acceptable to the user is obtained. Based on evaluations carried out with real users in the TransType-2 project, this process significantly reduces the time and effort required to obtain quality translations.

Translation as a problem

Translation is today the main bottleneck of the information society and its mechanization represents an important advance against the problem of the information avalanche and the need for translinguistic communication.

The first noteworthy computer developments were made in the famous Eniac computer in 1946. Among the pioneering researchers we must mention Warren Weaver, from the Rockefeller Foundation. He was the one who made the discipline publicly known, anticipating possible scientific methods to approach it: the use of cryptographic techniques, the application of Shannon's theorems and the utility of statistics, as well as the possibility of taking advantage of the underlying logic of human language and its apparent universal properties.

News

Currently, high levels of quality are obtained for translations between Romance languages (Spanish, Portuguese, Catalan, Galician and others). However, the results worsen notably the more typologically distant the languages are from each other, as is the case of translations between Spanish and English or German. However, this fact is not static, but dynamic: translation technology is improving day by day.

Another highly influential factor in quality is the degree of specialization of the translation systems, which improve as they adapt to the type of text and vocabulary to be translated. A system that specializes in the translation of weather forecasts will achieve an acceptable quality even for translating texts between languages that are typologically very different, but it will be useless for addressing, for example, sports or financial reports. A production system using machine translation will also incorporate technologies such as language detection, domain/subject detection, and automatic vocabulary generation.

Traditional translation

Translating has traditionally been an art and craft, which requires talent and dedication. A common criticism of the translation paradigm shift is to think that computers only substitute a word for another of the same from another language. However, MT systems in production are integrations of different linguistic technologies that go far beyond translating word for word. A linguistic analysis of a text will yield information about morphology (the way words are built from small units carrying meaning), syntax (the structure of a sentence) and semantics (meaning), which is certainly useful for translation tasks. There are also issues of style and discourse or pragmatics to consider.

Ambiguity and disambiguation

On the subject of ambiguity, not all humans understand it. It is possible for a human translator to misunderstand an ambiguous phrase or word. In favor of the computational approach, we can mention the use of disambiguation algorithms that, for example, Wikipedia uses to differentiate pages that have the same or very similar title.

Phrase-based statistical methods

The best machine translation results come from statistical phrase-based methods, which perform translations without regard to grammatical issues. Currently, the trend is to integrate all kinds of methodologies: linguistic, by rules, with post-editing, etc., but the main component, as in most technologies that use large amounts of data (Big Data), is Machine Learning (or Machine Learning).

History of machine translation

17th century: Descartes

The idea of machine translation can be traced back to the 17th century. In 1629, René Descartes proposed a universal language, with equivalent ideas in different languages sharing the same symbol.

1950s: Georgetown Experiment

In the 1950s, the Georgetown experiment (1954) involved a fully automatic translation of more than sixty sentences from Russian into English. The experiment was a complete success and marked the beginning of an era of significant funding for research into technologies that would enable machine translation. The authors claimed that within three to five years machine translation would be a problem solved.

World War

The world was coming out of a world war that on a scientific level had encouraged the development of computational methods to decipher coded messages. Weaver is credited with saying: 'When I see an article written in Russian, I say to myself: This is actually in English, albeit encoded with strange symbols. Let's decode it right now!" (cited by Barr and Feigenbaum, 1981). It goes without saying that both the computers and the programming techniques of those years were very rudimentary (programming was done by wiring boards in machine language), so the real possibilities of testing the methods were minimal.

1960-1980: ALPAC report and statistical machine translation

Actual progress was much slower. Funding for research was cut considerably after the ALPAC report (1966), because it found that research that had lasted ten years had not lived up to its expectations. Starting in the late 1980s, computing power increased computing power and made it less expensive, and increased interest in statistical models for machine translation was shown.

A. D. Booth, Birkbeck College and texts in Braille

The idea of using digital computers for the translation of natural languages was already proposed in 1946 by A. D. Booth and possibly others as well. The Georgetown experiment was by no means the first of these applications. A demonstration was made in 1954 with the APEXC team at Birkbeck College (University of London) of a rudimentary translation from English to French. At that time, several research papers on the subject were published, including articles in popular magazines (see, for example, Wireless World, September 1955, Cleave and Zacharov). A similar application, also pioneered at Birkbeck College at the time, was computer reading and composition of braille text.

John Hutchins

An obligatory reference to learn more about the evolution of machine translation is the British academic John Hutchins, whose bibliography can be freely consulted on the Internet. The main article follows Jhonatan Slocum's simplified scheme, which addresses the history of machine translation for decades.

Types of machine translation

Given enough information, machine translations can work quite well, allowing people with a given mother tongue to be able to get an idea of what someone else has written in their language. The main problem lies in obtaining the appropriate information for each of the translation methods.

According to his approach, machine translation systems can be classified into two large groups: those based on linguistic rules on the one hand, and those that use textual corpora on the other.

Rule-based machine translation

Machine translation using rules consists of performing transformations from the original, replacing words with their most appropriate equivalent. The set of this type of transformations of the original text is called text prediction.

For example, some common rules for English are:

- Short prayers (not more than 20).

- Avoid multiple prayer coordination.

- Insert determinants, whenever possible.

- Insert that, which, in order to in subordinate prayers, whenever possible.

- Avoiding pronouns or anaphoric expressions (it, them...).

- Rewrite when, while, before and After followed by -ing.

- Rewrite if, where, when followed by past involvement.

- Avoid the use of verbal phrases.

- Repeat the name/substantive when modified by two or more adjectives.

- Repetition of prepositions in the coordination of syntagmas prepositions.

- Rewrite nominal compounds of more than three names.

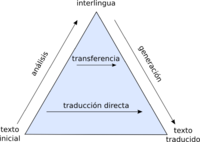

In general, in a first phase a text will be analyzed, usually creating an internal symbolic representation. Depending on the abstraction of this representation, it is also possible to find different degrees: from direct, which basically do word-for-word translations, to interlingua, which uses a complete intermediate representation.

Transfer

In transfer translation, the analysis of the original plays a more important role, and gives way to an internal representation that is used as a link to translate between different languages.

Intermediate Language

Machine translation from an intermediate language is a particular case of rule-based machine translation. The original language, for example a text that must be translated, is transformed into an intermediate language, whose structure is independent of that of the original language and that of the final language. The text in the final language is obtained from the representation of the text in the intermediate language. In general, this intermediate language is called "interlingua".

Corpus-based machine translation

Machine translation from a linguistic corpus is based on the analysis of real samples with their respective translations. Mechanisms that use corpus include statistical and example-based methods.

Statistics

The objective of statistical machine translation is to generate translations based on statistical methods based on corpus of bilingual texts, such as the acts of the European Parliament, which are translated into all the official languages of the EU. As multilingual text corpora are generated and analysed, results are iteratively improved by translating texts from similar domains.

The first statistical machine translation program was Candide, developed by IBM. Google used SYSTRAN services for a few years, but since October 2007 it has been using its own statistical-based machine translation technology. In 2005, Google improved translation capabilities by parsing 200 billion words of United Nations documents.

The progress of machine translation is not an isolated phenomenon. Information technologies as a whole present exponential progress, thanks in large part to disciplines such as Machine Learning, artificial intelligence, statistics that, nourished by Big Data and Big Language, have given amazing results in language recognition, in the synthesis text-to-speech and real-time speech translation.

Based on examples

Example-based machine translation is characterized by the use of a bilingual corpus as the main source of knowledge in real time. It is essentially a translation by analogy and can be interpreted as an implementation of the base case reasoning used in machine learning, which consists of solving a problem based on the solution of similar problems.

Context-based machine translation

Context-based machine translation uses techniques based on finding the best translation for a word by looking at the rest of the words that surround it. Basically, this method is based on treating the text in units of between 4 and 8 words, so so that each one of them is translated by its translation into the target language, and the translations that have generated a "phrase" without sense. Then, the window is moved one position (word), retranslating most of them again and filtering again, leaving only the coherent sentences. This step is repeated for the entire text. And then the results of said windows are concatenated in such a way that a single translation of the text is achieved.

The filtering that is carried out where it is decided if it is a meaningful phrase uses a corpus of the target language, where the number of occurrences of the searched phrase are counted.

It is, therefore, a method based on fairly simple ideas that offer very good results compared to other methods.

As advantages, it also provides the ease of adding new languages, since you only need:

- a good dictionary, which can be any commercial version adapted by grammatical rules to have conjugated verbs and names/adjectives with their variations in number and gender, and

- a corpus in the target language, which can be removed for example from the Internet, without translation of any part, such as in statistical methods.

Machine translation in Spain

Research in Spain has gone through three important stages. Since 1985, research began with a sudden interest in Spain. After one year upon entry into the European Community. Three transnational companies financed the creation of various research groups. IBM, Siemens and Fujitsu. Paradoxically, 1992, which was the year of the celebration of the 5th centenary of the discovery of America and the Olympic Games, were also held in Barcelona. First IBM and then Siemens, formed R&D groups in their laboratories in Madrid and Barcelona in 1985, led by Luis de Sopeña and Montserrat Meya, respectively. IBM used the Artificial Intelligence Research Center of the Autonomous University of Madrid as the headquarters of a team specializing in natural language. This team first took part in the design of the Mentor prototype, together with another IBM center in Israel, and later in the Spanish adaptation of LMT, a system designed at the T.J. Watson Research Center in the United States. According to the group's publications in the Natural Language Processing magazine, between 1985 and 1992 at least the following specialists worked on IBM projects: Teo Redondo, Pilar Rodríguez, Isabel Zapata, Celia Villar, Alfonso Alcalá, Carmen Valladares, Enrique Torrejón, Begoña Carranza, Gerardo Arrarte and Chelo Rodríguez.

For its part, Siemens decided to bring the development of the Spanish module of its prestigious Metal system closer to Barcelona. Montserrat Meya, who until then had worked at the Siemens central laboratories in Munich, contacted the philologist and engineer Juan Alberto Alonso, and together they formed the nucleus of a team in which an endless list of collaborators would later participate: Xavier Gómez Guinovart, Juan Bosco Camón, Begoña Navarrete, Ramón Fanlo, Clair Corbishley, Begoña Vázquez, etc. After 1992 the group dedicated to linguistic projects became an independent company, Incyta. Following an agreement with the Generalitat of Catalonia and the Autonomous University of Barcelona, the Catalan module was developed, which is now its main line of activity.

At the end of 1986, two new groups were created in Barcelona and Madrid, among whom the development of the modules of the EUROTRA system was distributed, financed by the European Commission. Ramón Cerdá brought together a large group of specialists at the University of Barcelona, made up of, among others, Jesús Vidal, Juan Carlos Ruiz, Toni Badia, Sergi Balari, Marta Carulla and Nuria Bel. While this group was dealing with syntax and semantics, another group in Madrid was in charge of morphology and lexicography, led by Francisco Marcos Marín. They collaborated with him, among others, Antonio Moreno, Pilar Salamanca and Fernando Sánchez-León.

A year later, in 1987, a fifth group was formed in the R&D laboratories of the Fujitsu company in Barcelona to develop the Spanish translation modules of the Japanese Atlas system. This group was led by the engineer Jorge Vivaldi and the philologists José Soler, from Eurotra, and Joseba Abaitua. Together they will create the embryo of a team that Elisabet Cayuelas, Lluis Hernández, Xavier Lloré and Ana de Aguilar-Amat later joined. The company discontinued this line of research in 1992.

Another group dedicated to machine translation in those years was the one formed by Isabel Herrero and Elisabeth Nebot at the University of Barcelona. This group, supervised by Juan Alberto Alonso, created an Arabic-Spanish translation prototype in collaboration with the University of Tunis.

It is clear that machine translation was the main catalyst for the birth of computational linguistics in Spain. It is no coincidence that the Spanish Society for Natural Language Processing (SEPLN) was established in 1983. Together with Felisa Verdejo, two other people stood out in its foundation, the aforementioned Montserrat Meya and Luis de Sopeña, who at that time led, As has been said, machine translation groups. The third congress of the association (then still under the name of Ťjornadas técnicasť) was held in July 1987 at the Polytechnic University of Catalonia, with two highlights on machine translation: a lecture by Sergei Nirenburg, then attached to the Center for Machine Translation from Carnegie Mellon University, and a round table with the participation of Jesús Vidal and Juan Carlos Ruiz (from Eurotra), Luis de Sopeńa (from IBM), Juan Alberto Alonso (from Siemens), and Nirenburg himself.

Some statistical data confirms the relevance of machine translation in the SEPLN between 1987 and 1991. During those years, of the 60 articles published in the association's journal, Natural Language Processing, 23 (more than a third) dealt with machine translation. The level of participation reflects the relevance of the groups: eight describe Eurotra, seven IBM research, four Metal, Siemens, and 3 Atlas, Fujitsu. Only one of the 23 published articles was unrelated to the four star projects. This was the one presented at the 1990 conference by Gabriel Amores, current researcher in the area of machine translation, with the results of his research at the Umist Center for Computational Linguistics. 35 people have been cited and this figure gives an idea of the activity. In a rough estimate, it can be calculated that in 1989 machine translation research in Spain had an annual budget of about 200 million pesetas, a figure that, however modest it may seem, multiplies several times the amount handled today. in our country, a decade later.

Since 1998, the Department of Computer Languages and Systems of the University of Alicante has been developing automatic translation systems between Romance languages: interNostrum, between Spanish and Catalan; Universia Translator, between Spanish and Portuguese, and, more recently, Apertium, an open source machine translation system developed in collaboration with a consortium of Spanish companies and universities, which currently translates between the languages of the Spanish State and other Romance languages.

Since 1994, ATLS has developed its corporate language platform that incorporates high-performance hybrid machine translation engines. The platform is completed by a set of components necessary to solve the multilingual and multiformat problems of large organizations.

In 2010, Pangeanic became the first company in the world to apply the Moses statistical translator in a commercial environment developing a platform with self-learning, corpus cleanup and retraining together with the Instituto Técnico de Informática de Valencia (ITI) and the Pattern Recognition and Human Language Technology research group of the Politècnica de València. A founding member of TAUS, Pangeanic won the largest machine translation infrastructure contract for the European Commission with its iADAATPA project in 2017.

Machine translation resources

- Language Corpus

- Dictionaries

- Grammar

- Language industry

- Translation memories

Contenido relacionado

RAM (disambiguation)

Baseband

High level language